Introducing the high-performance open-source AI model 'Mistral 3,' partnering with NVIDIA to develop a family of optimized and versatile AI models

French AI development company Mistral AI has announced its next-generation AI model family, ' Mistral 3. ' The open-source models, which range from small to ultra-large, are optimized for NVIDIA GPUs, allowing users to use cutting-edge AI on local devices as well as the cloud.

Introducing Mistral 3 | Mistral AI

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵 pic.twitter.com/lsrDmhW78u

— Mistral AI (@MistralAI) December 2, 2025

NVIDIA Partners With Mistral AI to Accelerate New Family of Open Models | NVIDIA Blog

https://blogs.nvidia.com/blog/mistral-frontier-open-models/?linkId=100000395318982

Mistral 3 includes the cutting-edge small, high-density models '14B,' '8B,' and '3B,' as well as Mistral Large 3, the most powerful Mistral AI ever. Mistral Large 3 is a Mixture of Experts (MoE) model with 41 billion active parameters, 675 billion total parameters, and a large context window of 256,000. The MoE model does not activate all neurons for every token, but only activates the most influential parts of the model, allowing it to efficiently maintain high accuracy.

Mistral Large 3 is a best-in-class permissive open-weight model trained from scratch using 3,000 NVIDIA H200 GPUs. According to NVIDIA, the combination of the NVIDIA GB200 NVL72 system and Mistral AI's MoE architecture enables efficient deployment and scaling of large-scale AI models while taking advantage of the benefits of highly parallel processing and hardware optimization. It has also been reported that Mistral Large 3 achieves a 10x performance improvement on the GB200 NVL72 compared to the previous generation NVIDIA H200.

After instructional adjustments, the Mistral Large 3 performed comparable to other leading open-weight models on typical prompts, and was reported to demonstrate best-in-class performance in picture comprehension and multilingual speech (excluding English and Chinese).

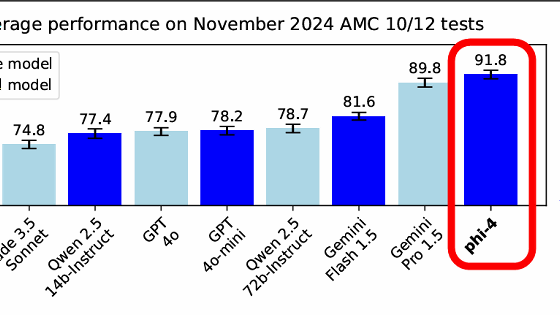

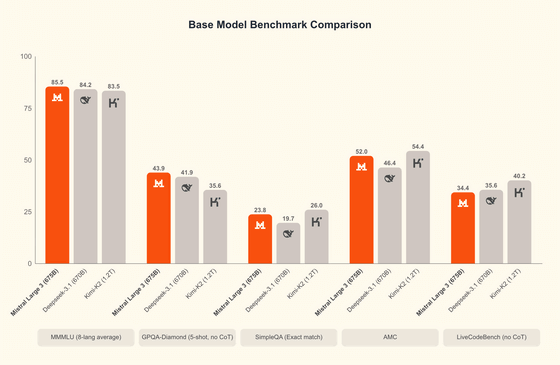

The graph below compares the Mistral Large 3 benchmark results with Deepseek 3.1 and Kimi-K2, two high-performance AIs from China. In all five benchmarks, the Mistral Large 3 performed as well as or better than other models.

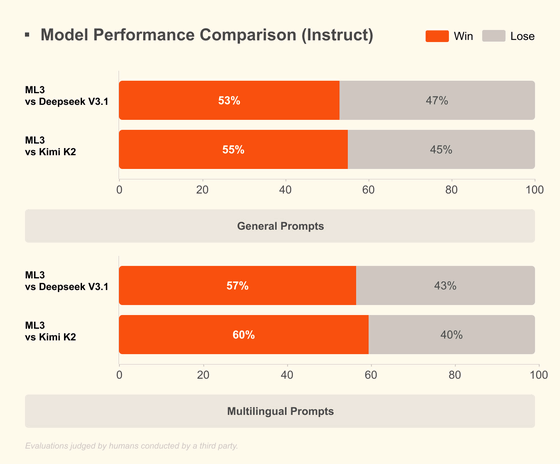

The following figure compares the Mistral Large 3, Deepseek 3.1, and Kimi-K2 in both general prompts and multilingual prompts using the instruction-response model (Instruct), which is tuned to respond appropriately to user prompts. In both cases, the Mistral Large 3 outperforms the other models.

The Mistral 3 family also includes a series for edge and local use cases in three sizes. The following benchmark shows the GPQA Diamond Accuracy, an index measuring the inference power of AI models, with the horizontal axis representing model size and the vertical axis representing the model size. All three Mistral models are advertised as outperforming

The Mistral 3 family is an open source model and is available for download at Hugging Face.

Mistral Large 3 - a mistralai Collection

https://huggingface.co/collections/mistralai/mistral-large-3

Ministral 3 - a mistralai Collection

https://huggingface.co/collections/mistralai/ministral-3

Related Posts:

in AI, Posted by log1e_dh