Mistral AI announces multilingual & 24 billion parameter multimodal open source AI model 'Mistral Small 3.1', which runs on 32GB RAM and is said to be superior to Gemma 3 and GPT-4o mini

AI company Mistral AI has announced a model called ' Mistral Small 3.1 '. It features a 128K token context length and 24 billion parameters, but is lightweight enough to run on a single RTX 4090 or a Mac with 32GB RAM.

Mistral Small 3.1 | Mistral AI

https://mistral.ai/news/mistral-small-3-1

Mistral Small 3.1 is a multimodal AI model with text and image understanding capabilities, with a context length of up to 128K tokens, 24 billion parameters, an inference speed of 150 tokens per second, and support for dozens of languages, including English and Japanese. It is released under the Apache 2.0 license, so it can be used somewhat freely for both commercial and non-commercial purposes.

The base model is the 'Mistral Small 3' released by Mistral AI in January 2025. By making the Mistral Small 3 even lighter and combining low-latency and cost-effective functions, it has been possible to achieve performance that surpasses comparable models such as Google's 'Gemma 3' and OpenAI's 'GPT-4o mini'.

'Mistral Small 3', a latency-focused AI model capable of high-speed inference, is released - GIGAZINE

Specific use cases include conversation assistance, image understanding, and function calls. Mistral AI said, 'Mistral Small 3.1 is a versatile model designed to handle a wide range of generative AI tasks. It runs on a single RTX 4090 or a Mac with 32GB RAM, making it ideal for on-device use cases. It is useful in situations where response speed is important, when running automated workflows, and in highly specialized situations such as legal consultations and medical diagnosis.'

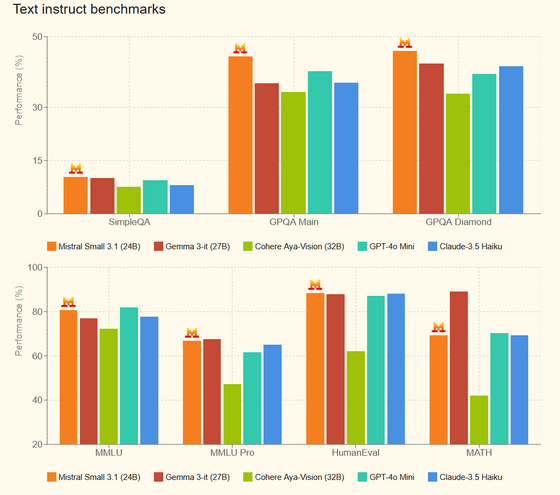

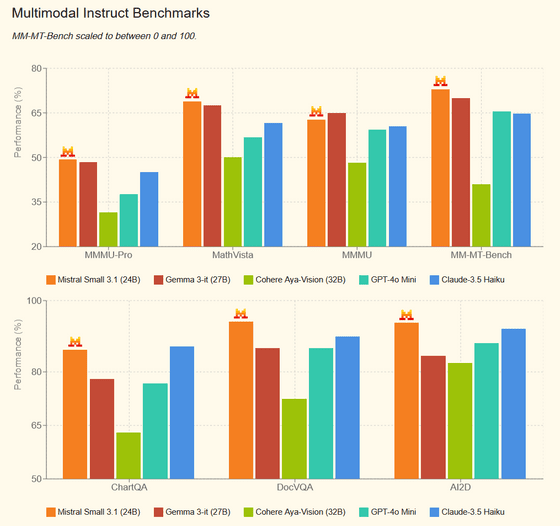

In benchmark tests for responding to text instructions, it performs roughly equal to or better than Gemma 3-it (27B), Cohere Aya-Vision (32B), GPT-4o mini, and Claude-3.5 Haiku, except for the MATH benchmark, which measures mathematical problem-solving ability.

This is what multimodal processing looks like.

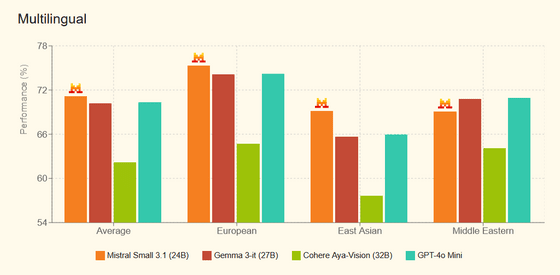

It also has unmatched capabilities in multilingual understanding.

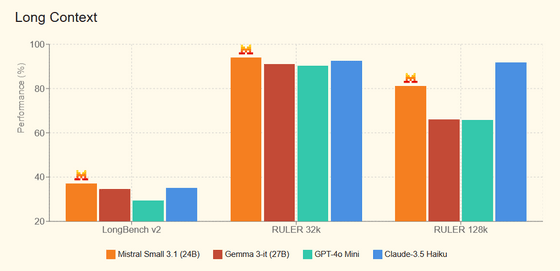

In the longer context it looks like this:

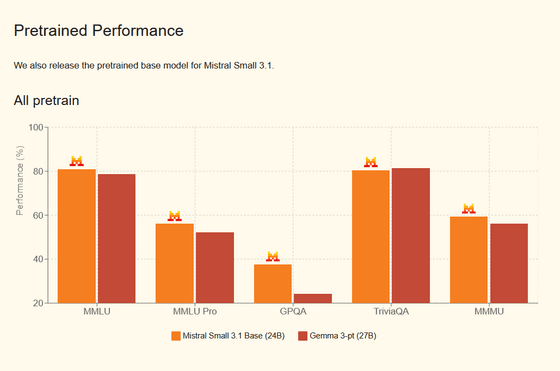

In comparing the performance of pre-trained models, it outperforms Gemma 3-it (27B) in most tests.

Mistral Small 3.1 is available for download from the Hugging Face website, as well as on Mistral AI's developer playground 'La Plateforme' and Google Cloud Vertex AI.

We're excited to welcome @MistralAI 's newest model, Mistral Small 3.1, to Vertex AI!

— Google Cloud Tech (@GoogleCloudTech) March 17, 2025

Mistral Small 3.1 is an #OpenSource , multimodal model designed for programming, mathematical reasoning, document understanding, visual understanding, and more → https://t.co/a4hNhrnKBH pic.twitter.com/u7htWyEWcT

Related Posts:

in Software, Posted by log1p_kr