Sony releases 'Fair Human-Centric Image Benchmark (FHIBE)' dataset for evaluating the fairness of AI models

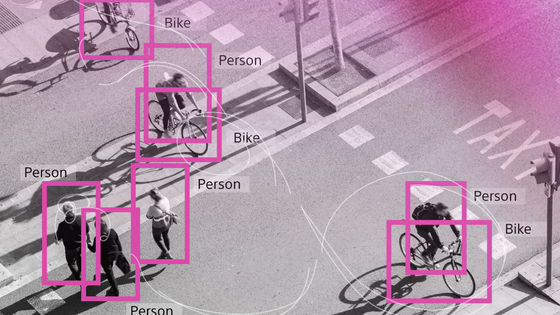

Computer vision is being used in a variety of fields, including self-driving cars and smartphones, but many datasets still suffer from issues such as bias, lack of diversity, and not being collected in an ethically responsible manner, meaning photos are used for AI training without the subjects' consent. The Fair Human-Centric Image Benchmark (FHIBE) is the first publicly available, diverse, and subject-consented dataset specifically built to assess fairness in human-centric computer vision tasks.

FHIBE

Sony AI releases 'FHIBE (Fair Human-Centric Image Benchmark),' an innovative dataset for fairness evaluation

https://ai.sony/ja/articles/Fairness-Evaluation-Dataset-From-Sony-AI/

Fair human-centric image dataset for ethical AI benchmarking | Nature

https://www.nature.com/articles/s41586-025-09716-2

A Fair Reflection: Sony's Quest to Make AI Models Work for Everyone - YouTube

https://www.youtube.com/watch?v=_V4SxY5Kqfk

Sony shares bias-busting benchmark for AI vision models • The Register

https://www.theregister.com/2025/11/05/sony_ai_vision_model_benchmark/

Bias assessment is essential for ethical AI, but most assessment datasets suffer from issues such as a lack of consent and detailed annotations. FHIBE is a responsibly curated, consent-based dataset designed to advance fairness in computer vision.

The features of FHIBE are as follows: Note that FHIBE can only be used for fairness and bias testing, and cannot be used to train AI models. However, FHIBE can be used to train bias reduction tools.

・Agree

FHIBE obtains informed and revocable consent from all participants and its data collection is designed to comply with data protection laws.

·compensation

Image subjects, annotators, and quality assurance personnel are fairly compensated at or above the local minimum wage, and participants can withdraw their data at any time without affecting compensation.

Diversity

FHIBE maximizes diversity in demographics, appearance, pose, and environment. The dataset includes detailed data on age, pronouns, ancestry, skin color, eye color, hair type, and visible markers to support fine-grained bias analysis.

Comprehensive annotations

FHIBE contains pixel-level labels, 33 keypoints, and 28 segmentation categories linked to anonymized annotator IDs.

・Practicality

FHIBE is designed for fairness assessment across pose estimation, face/body detection, segmentation, synthesis, and visual language models.

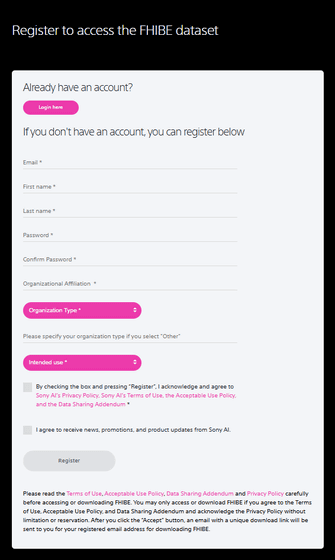

To use FHIBE, you must agree to the terms of use and create an account.

Register | The Fair Human-Centric Image Benchmark

https://fairnessbenchmark.ai.sony/register

Sony AI, Sony's AI research laboratory, explained about FHIBE, 'Ethical data collection is an extremely difficult challenge, but FHIBE is the first dataset of this nature and will set a new benchmark for the community.'

FHIBE contains 10,318 images from 1,981 people across 81 locations. Each image features one or two subjects, with most participants providing around six images. All images were provided directly by the subjects themselves.

To protect privacy, all non-consenting individuals are fully anonymized and all personally identifiable information (PII) is removed, minimizing the risk of re-identification.

The detailed annotation information and metadata contained in FHIBE are as follows:

- Self-reported subject attributes (data reported by the person, not guesswork)

-Face and person bounding boxes

33 body and facial key points

・28 types of segmentation categories

Anonymized annotator IDs (a measure to protect the identity of those who annotate data)

FHIBE supports fairness assessments in the following areas:

・Human body posture estimation

Face and body detection

·segmentation

・Personal authentication and verification

・Image editing and generation

Vision and language models

Additionally, if you use FHIBE for academic research, you must cite the FHIBE paper .

'FHIBE demonstrates that prioritizing ethical AI can lead to fair and responsible practices. AI is rapidly evolving, and it's essential to examine the ethical implications of how data is collected and used. For too long, the industry has relied on datasets that lack diversity, reinforce bias, and are collected without proper consent,' said Alice Shan, lead research scientist for AI ethics at Sony AI. 'This project comes at a critical time, demonstrating that responsible data collection is possible, incorporating best practices for informed consent, privacy, fair compensation, safety, diversity, and usefulness. FHIBE is an important step forward for the AI industry, but it's only the beginning. We are setting a new precedent for the future advancement of fair, transparent, and accountable AI.'

Related Posts:

in AI, Posted by logu_ii