Wikimedia Foundation releases risk assessment report using AI, ML, etc.

The Wikimedia Foundation, whose mission is to 'enable everyone, everywhere to freely and openly access and share trusted information,' and which believes that 'access to knowledge is a human right,' has released the contents of its 2024 Human Rights Impact Assessment Report on AI and Machine Learning (ML) to help understand how AI and ML may affect Wikipedia and other Wikimedia projects.

Making sure AI serves people and knowledge stays human: Wikimedia Foundation publishes a Human Rights Impact Assessment on the interaction of AI and machine learning with Wikimedia projects – Diff

Wikimedia Foundation Artificial Intelligence and Machine Learning Human Rights Impact Assessment - Meta-Wiki

https://meta.wikimedia.org/wiki/Wikimedia_Foundation_Artificial_Intelligence_and_Machine_Learning_Human_Rights_Impact_Assessment

AI and ML are not new to Wikimedia projects or the volunteers who support them, and they have developed numerous ML tools to help share, edit, and categorize knowledge across the project. Specifically, many of these tools support repetitive tasks like identifying vandalism and flagging references, and most of these tools were developed before the advent of generative AI.

The report assessed risks in three categories: 'AI and ML tools for work support,' 'Potential but minor human rights risks in generative AI and the Wikimedia environment,' and 'Content on Wikimedia projects that may be used for external ML development.'

First, the report positively evaluated the in-house AI/ML tools developed by the Wikimedia Foundation to support volunteer editors, stating that they have the potential to 'positively contribute to multiple human rights, including freedom of expression and the right to education.' However, it also pointed out that known limitations of AI/ML tools could lead to risks such as amplifying and perpetuating existing inequalities and prejudices, or falsely flagging content for deletion. If these risks were to surface on a large scale, they could have a negative impact on Wikimedia volunteers.

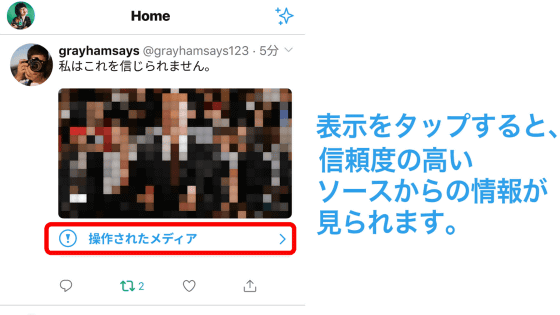

The report notes that the risks posed by external generative AI tools to Wikimedia projects include the increased scale, speed, and sophistication of harmful content. Generative AI tools can automatically generate misleading content simultaneously in multiple languages, making it difficult to detect and moderate. Furthermore, they can generate large amounts of defamatory content targeted at specific individuals or communities. If these risks are not properly mitigated, this could have a negative impact on the rights of Wikimedia volunteers and the general public, the report states.

The report also raises concerns that content from Wikimedia projects, if used to train large-scale language models, could pose a risk factor for issues related to bias, representation, data quality and accuracy, privacy, and cultural sensitivity. While the researchers recommend monitoring for potential risks, they also acknowledge that ongoing data quality improvement initiatives and programs focused on fairness are already in place to mitigate the risk.

Elon Musk, who has criticized Wikipedia for being 'too conscious,' has revealed that he is building a Wikipedia-like encyclopedia service called 'Grokipedia' under the AI company xAI. However, details about Grokipedia, such as whether its content is 100% AI-generated using Grok, have not been made clear, and there are concerns about content bias.

We are building Grokipedia @xAI .

— Elon Musk (@elonmusk) September 30, 2025

Will be a massive improvement over Wikipedia.

Frankly, it is a necessary step towards the xAI goal of understanding the Universe. https://t.co/xvSeWkpALy

Related Posts:

in Note, Web Service, Posted by logc_nt