Chinese AI company DeepSeek releases DeepSeek-V3.2-Exp, an AI model that can handle large inputs at low cost

DeepSeek, a China-based AI company, has announced the release of DeepSeek-V3.2-Exp , an AI model optimized for large inputs. DeepSeek-V3.2-Exp boasts significantly improved computational efficiency when given large inputs compared to other models with similar benchmark scores.

🚀 Introducing DeepSeek-V3.2-Exp — our latest experimental model!

— DeepSeek (@deepseek_ai) September 29, 2025

✨ Built on V3.1-Terminus, it debuts DeepSeek Sparse Attention(DSA) for faster, more efficient training & inference on long context.

👉 Now live on App, Web, and API.

💰 API prices cut by 50%+!

1/n

DeepSeek-V3.2-Exp is a model developed based on ' DeepSeek-V3.1 -Terminus,' an updated version of 'DeepSeek-V3.1, ' and is positioned as an experimental model that incorporates 'DeepSeek Sparse Attention,' a super-attention mechanism that optimizes learning and inference in long contexts.

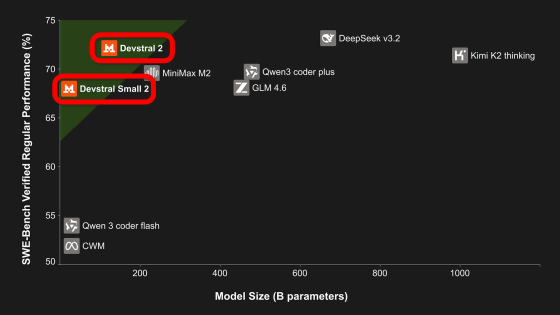

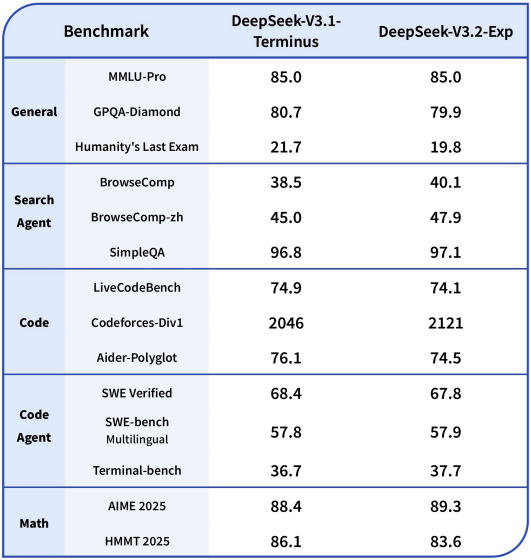

DeepSeek-V3.2-Exp has the same learning configuration as DeepSeek-V3.1-Terminus to verify the effectiveness of DeepSeek Sparse Attention. Looking at the benchmark score comparison table between DeepSeek-V3.2-Exp and DeepSeek-V3.1-Terminus, we can see that the scores of the two are almost identical.

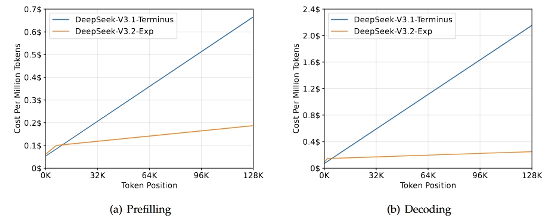

On the other hand, there are significant differences in computational efficiency and cost efficiency when receiving long inputs. The graph below compares the cost efficiency of DeepSeek-V3.2-Exp (orange) and DeepSeek-V3.1-Terminus (blue), with the horizontal axis showing the number of tokens processed at one time and the vertical axis showing the cost per million tokens. It can be seen that DeepSeek-V3.2-Exp significantly reduces the increase in cost as the number of tokens increases.

The DeepSeek-V3.2-Exp model data is available at the following link. The license is the permissive MIT License.

deepseek-ai/DeepSeek-V3.2-Exp · Hugging Face

https://huggingface.co/deepseek-ai/DeepSeek-V3.2-Exp

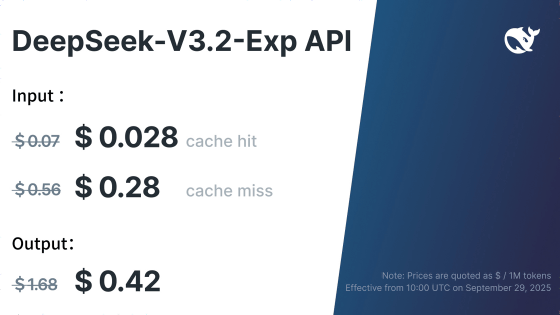

DeepSeek-V3.2-Exp can also be used via API , with API usage fees of $0.28 (approximately 41.6 yen) per million tokens for uncached input, $0.028 (approximately 0.42 yen) per million tokens for cached input, and $0.42 (approximately 62.4 yen) per million tokens for output.

💻 API Update

— DeepSeek (@deepseek_ai) September 29, 2025

🎉 Lower costs, same access!

💰 DeepSeek API prices drop 50%+, effective immediately.

🔹 For comparison testing, V3.1-Terminus remains available via a temporary API until Oct 15th, 2025, 15:59 (UTC Time). Details: https://t.co/3RNKA89gHR

🔹 Feedback welcome:… pic.twitter.com/qEdzcQG5bu

Related Posts: