DeepSeek's theoretical cost-benefit ratio is revealed to be 545% per day

Chinese AI company DeepSeek has revealed that the profit margin on its in-house developed AI models 'DeepSeek-V3' and 'DeepSeek-R1' is theoretically up to 545% per day. However, this is only 'theoretical', and the actual profit is expected to be lower.

???? Day 6 of

#OpenSourceWeek : One More Thing – DeepSeek-V3/R1 Inference System Overview

Optimized throughput and latency via:

???? Cross-node EP-powered batch scaling

???? Computation-communication overlap

⚖️ Load balancing

Statistics of DeepSeek's Online Service:

⚡ 73.7k/14.8k… — DeepSeek (@deepseek_ai) March 1, 2025

open-infra-index/202502OpenSourceWeek/day_6_one_more_thing_deepseekV3R1_inference_system_overview.md at main · deepseek-ai/open-infra-index · GitHub

https://github.com/deepseek-ai/open-infra-index/blob/main/202502OpenSourceWeek/day_6_one_more_thing_deepseekV3R1_inference_system_overview.md

China's DeepSeek claims theoretical cost-profit ratio of 545% per day | Reuters

https://www.reuters.com/technology/chinas-deepseek-claims-theoretical-cost-profit-ratio-545-per-day-2025-03-01/

DeepSeek-V3/R1 inference services are provided by NVIDIA's AI GPU 'H800', which complies with China export regulations, for the reason that 'it maintains consistent accuracy with training'. Technically, the matrix multiplication and distribution communication process uses the same FP8 format as training, and the core MLA (Multiple Layer Attention) calculation and joint communication use the BF16 format to ensure optimal performance of the service.

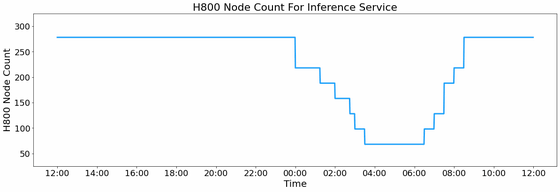

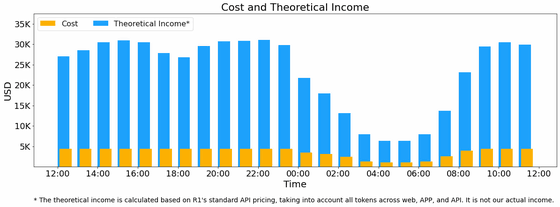

DeepSeek also says that in order to handle high loads during the day and low loads at night, it deploys inference services on all nodes during peak daytime hours and reduces the number of inference nodes during low-load nighttime hours to allocate resources to research and training.

The maximum node occupancy rate of V3 and R1 inference services in the 24 hours from 12:00 on February 27, 2025 to 12:00 on February 28, 2025 reached 278, with an average of 226.75 nodes. Below is a graph showing the number of H800 nodes per hour, with the node occupancy rate decreasing from 0:00 to 8:00. Each node contains eight H800 GPUs, and assuming a lease cost of $2 (approximately 300 yen) per H800 GPU per hour, the total daily cost will be $87,072 (approximately 13 million yen).

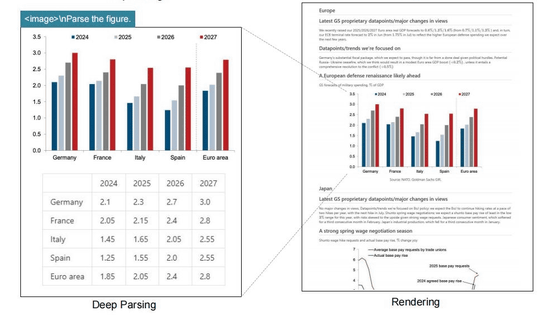

According to DeepSeek, during the same 24-hour statistical period, V3 and R1 processed a total of 608 billion input tokens, of which 56.3%, or 342 billion tokens, hit the KV cache on disk. They also output a total of 168 billion output tokens, with an average output rate of 20-22 tokens per second and an average KV cache length of 4989 tokens per output token. The average throughput per H800 node was approximately 73,700 tokens per second when prefilling and approximately 14,800 tokens per second when decoding. Of course, this data includes all user requests from the web, apps, and APIs.

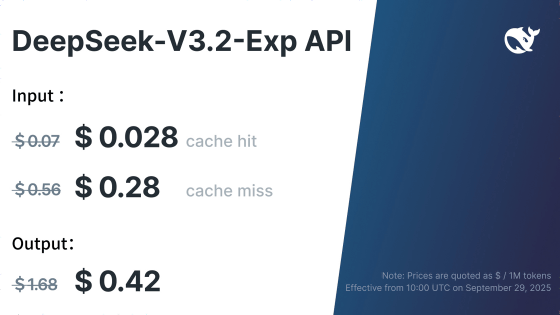

The DeepSeek-R1 fee is $0.14 per million for input tokens on cache hits, $0.55 per million for input tokens on cache misses, and $2.19 per million for output tokens. If all tokens were charged at the DeepSeek-R1 price, DeepSeek reports that total daily revenue would be $562,027, with a cost-benefit ratio of 545%.

Below is a graph showing the cost (orange) and theoretical profit (blue) by hour.

However, DeepSeek said that its actual revenue will be significantly lower than this because the price of DeepSeek-V3 is significantly lower than R1, only part of the service is monetized (the web and app are free), and discounts are applied during off-peak hours.

Reuters points out that 'DeepSeek's announcement could deal a further blow to AI companies outside of China, which have been hit hard by the surge in popularity of chatbots powered by DeepSeek's R1 and V3 around the world.'

Related Posts:

in AI, Software, Web Service, Posted by log1i_yk