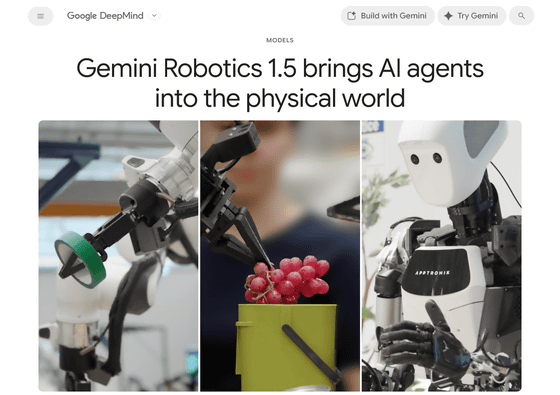

Google announces Gemini Robotics 1.5, an AI model for robots that can think and decide its actions

Gemini Robotics 1.5 brings AI agents into the physical world - Google DeepMind

https://deepmind.google/discover/blog/gemini-robotics-15-brings-ai-agents-into-the-physical-world/

Building the Next Generation of Physical Agents with Gemini Robotics-ER 1.5 - Google Developers Blog

https://developers.googleblog.com/en/building-the-next-generation-of-physical-agents-with-gemini-robotics-er-15/

Gemini Robotics is an AI model based on Gemini 2.0 that adds a 'motion output function' to enable robot control, allowing the AI to perform various actions through verbal commands. Thanks to the world knowledge of the Gemini 2.0 model, it is adept at responding to commands never seen in training, new objects, diverse commands, and new environments.

Google releases Gemini Robotics, an AI model for robotics that lets robots perform tasks simply by giving verbal instructions - GIGAZINE

In June 2025, Gemini Robotics On-Device was released, which can run without an internet connection. Gemini Robotics On-Device is a small model that can operate locally, but it is capable of highly accurate operations such as understanding natural language instructions and opening a bag zipper. Since it does not go through the internet, it is effective in tasks that require low latency.

Google announces 'Gemini Robotics On-Device,' a high-performance robot AI model that operates locally without an internet connection - GIGAZINE

On September 25, 2025, Google unveiled a new model, Gemini Robotics 1.5, described as an 'embodied reasoning model' that excels at planning and logical decision-making within physical environments.

We're making robots more capable than ever in the physical world. 🤖

— Google DeepMind (@GoogleDeepMind) September 25, 2025

Gemini Robotics 1.5 is a levelled up agentic system that can reason better, plan ahead, use digital tools such as @Google Search, interact with humans and much more. Here's how it works 🧵 pic.twitter.com/JM753eHBzk

In the demo movie of Gemini Robotics 1.5 released by Google DeepMind, a robot arm equipped with Gemini Robotics 1.5 performs the task of 'sorting three types of fruit onto plates of the same color.'

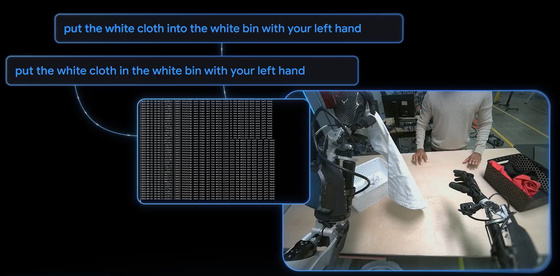

Next, when given the task of 'sorting laundry by color,' Gemini Robotics 1.5 sorted the laundry by color, placing white clothes in white baskets and colored clothes in black baskets, and completed the task even when the baskets were moved or swapped. It can understand the world visually and then think and execute the actions necessary to complete the task, such as 'To complete the task, white clothes should be placed in the white basket.'

When instructed to 'sort the garbage according to your current location,' the robot checked its location, obtained information on sorting, and sorted the garbage in front of it into multiple trash cans accordingly.

Gemini Robotics 1.5 operates as an agent framework for robots to complete complex, multi-step tasks.

The Gemini Robotics-ER 1.5, also announced at the same time, allows users to give instructions in natural language for each step of the operation and natively call on digital tools to create a detailed, multi-step plan for completing the mission. Gemini Robotics-ER 1.5 acts as a 'thinking' model of the physical world, sending instructions for each step to perform the task, which Gemini Robotics 1.5 then executes, creating a 'robot that thinks while it moves.'

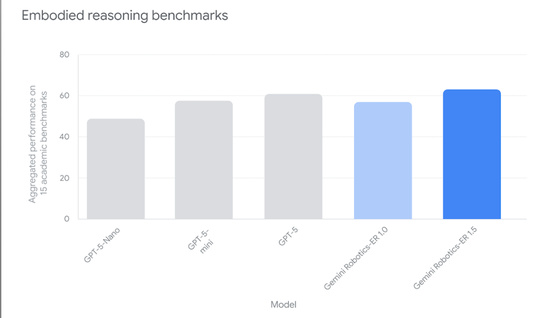

Gemini Robotics-ER 1.5 is the first thinking model optimized for embodied reasoning, achieving state-of-the-art performance on both academic and internal benchmarks.

Because robots come in a variety of shapes and sizes, with varying sensing capabilities and degrees of freedom, it was difficult to transfer behaviors learned on one robot to another in the previous version of Gemini Robotics (1.0), often requiring re-training. Gemini Robotics 1.5, on the other hand, has advanced learning capabilities across different forms, eliminating the need to specialize AI models to fit each robot's shape.

While Gemini Robotics 1.0 was characterized by a direct mapping of visual and linguistic input to output behavior, Gemini Robotics 1.5 adds a mechanism for generating 'thought tokens' in natural language before performing actions, improving the success rate of long-term and complex tasks. Google describes Gemini Robotics 1.5 as an 'agent that thinks before it acts.'

Google CEO Sundar Pichai said about Gemini Robotics 1.5, 'It enables robots to reason better, plan ahead, use digital tools like search, and transfer learning from one type of robot to another. It's the next big step towards truly useful general-purpose robots.'

New Gemini Robotics 1.5 models will enable robots to better reason, plan ahead, use digital tools like Search, and transfer learning from one kind of robot to another. Our next big step general towards-purpose robots that are truly helpful — you can see how the robot reasons as… pic.twitter.com/kw3HtbF6Dd

— Sundar Pichai (@sundarpichai) September 25, 2025

To use Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, you must register for the tester program.

Gemini Robotics - Google DeepMind

https://deepmind.google/models/gemini-robotics/gemini-robotics/

Gemini Robotics-ER - Google DeepMind

https://deepmind.google/models/gemini-robotics/gemini-robotics-er/

Related Posts: