Google releases Gemini Robotics, an AI model for robotics that allows robots to perform tasks simply by giving verbal instructions

Google has announced the development of the Gemini Robotics AI model, which adds the ability to output motions based on

Gemini 2.0

, enabling it to control robots. The company also announced the Gemini Robotics-ER model, which has advanced spatial understanding capabilities.Gemini Robotics brings AI into the physical world - Google DeepMind

https://deepmind.google/discover/blog/gemini-robotics-brings-ai-into-the-physical-world/

Gemini Robotics: Bringing AI into the Physical World

(PDF file)

https://storage.googleapis.com/deepmind-media/gemini-robotics/gemini_robotics_report.pdf

Previous models in the Gemini family supported multimodal inputs such as text, images, audio, and video, but output was limited to the digital domain. The newly developed Gemini Robotics adds a new output method: physical movement, allowing users to directly control the robot.

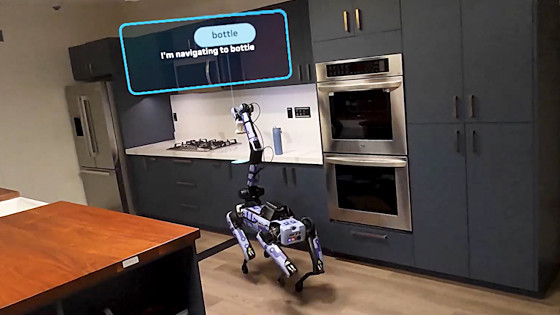

Google categorizes Gemini Robotics as a 'vision, language, and action model (VLA model)' and says it will be 'able to perform a wide range of real-world tasks.'

You can see how Gemini Robotics actually performs the task in the following movie.

Gemini Robotics: Bringing AI to the physical world - YouTube

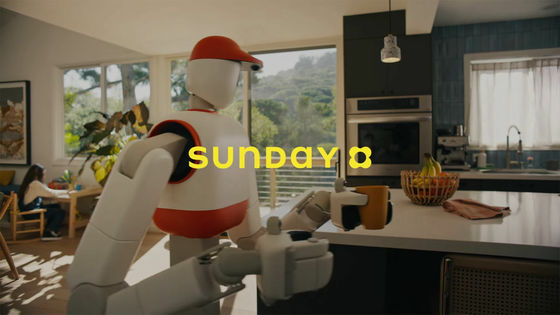

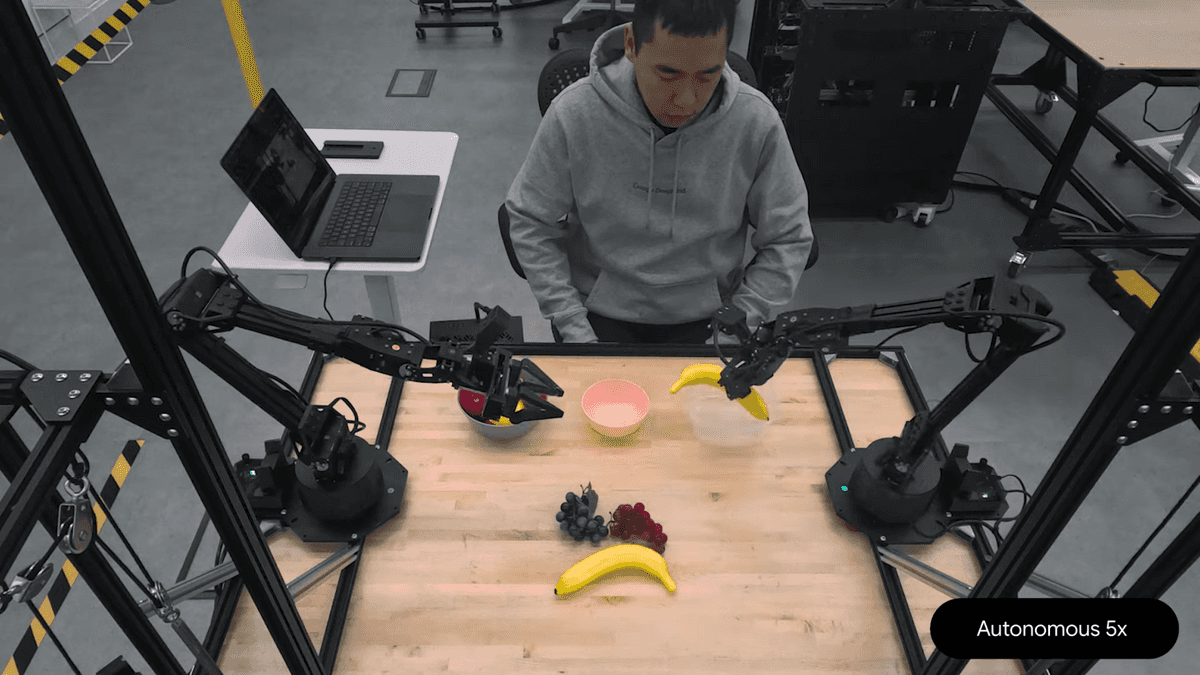

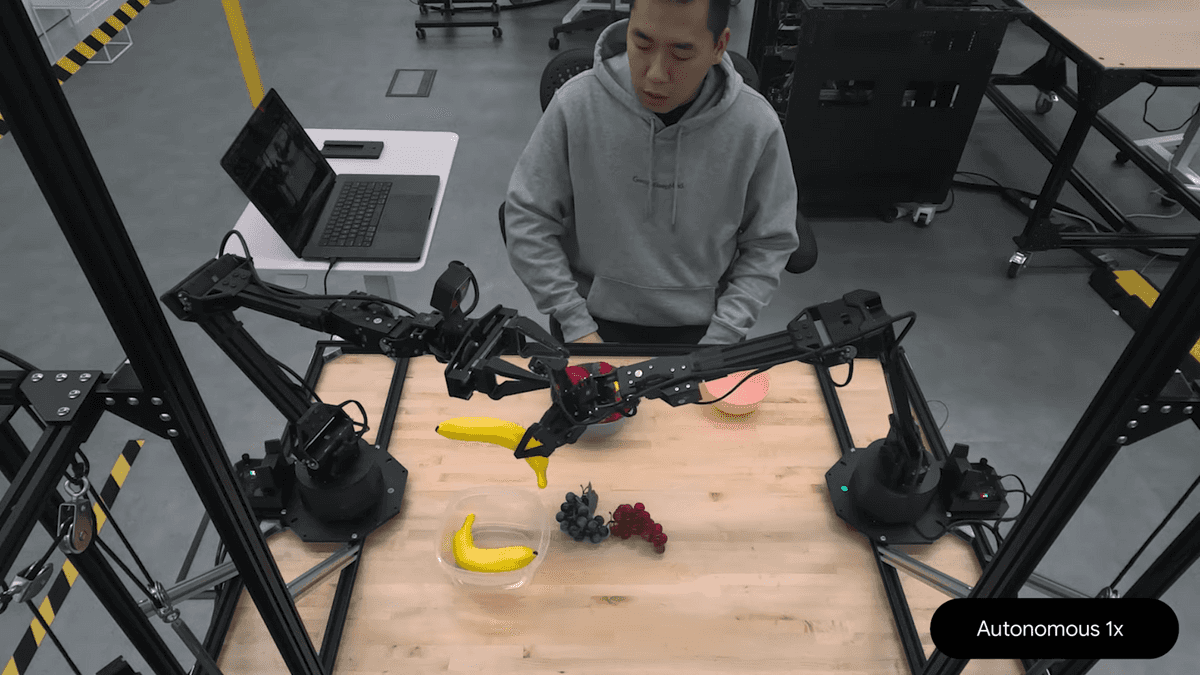

When the operator verbally instructs the robot to 'put the bananas in the transparent container,' the robot follows the instructions.

Even if they try to be mean by changing the location of the container midway, we respond immediately.

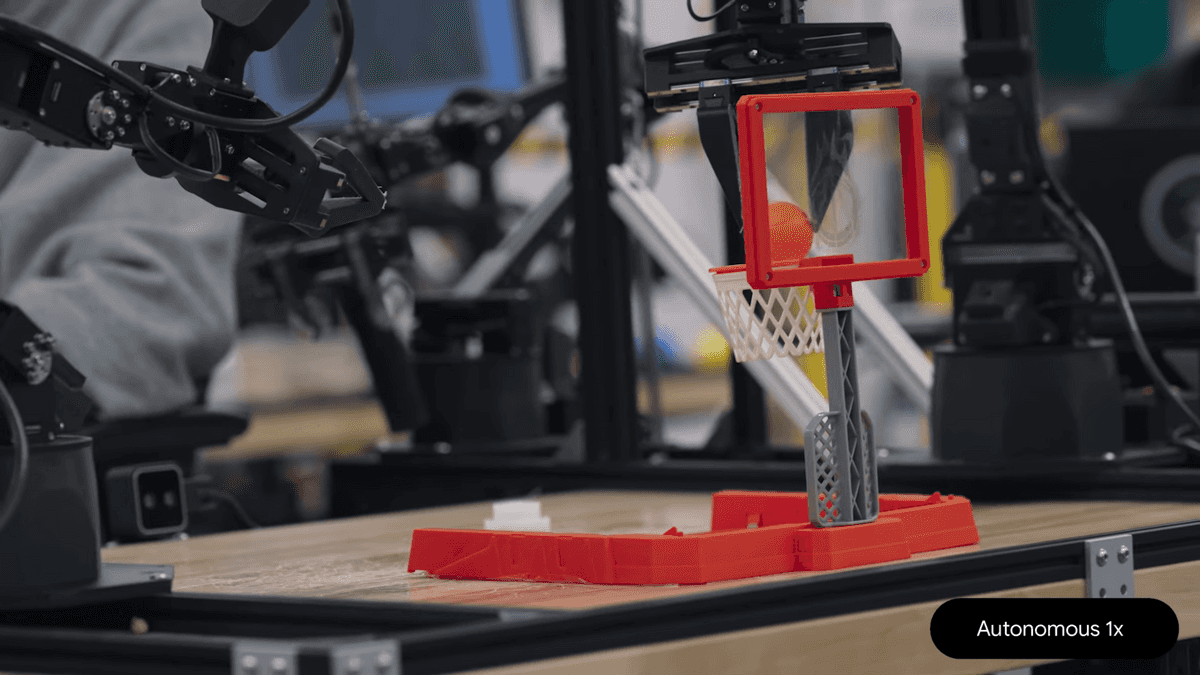

Thanks to the world knowledge of the original Gemini 2.0 model, it can follow instructions that never appeared in training, such as 'pick up the basketball and dunk it.'

Gemini Robotics is said to be good at adapting to new objects, diverse instructions, and new environments, and Google said it 'performs on average more than twice as well on comprehensive generalization benchmarks compared to other state-of-the-art visual-language-action models.'

In addition, as seen in the banana task, where the robot was able to perform the task without any problems even when the container was moved, the robot continuously monitors its surroundings to detect changes in the environment or instructions, and adjusts its behavior accordingly. The ability to adapt to change is important in the real world, where unexpected events such as objects slipping out of your hand or someone moving something are commonplace.

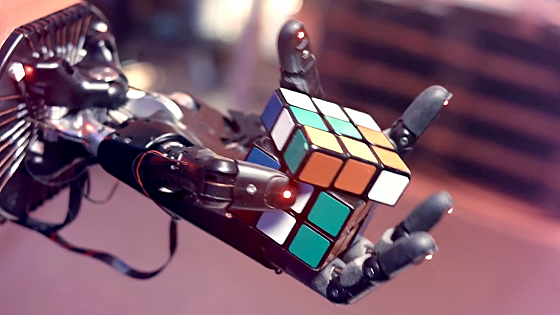

It is also possible to perform actions that require manual dexterity, and you can see how origami is folded in the following movie.

Gemini Robotics: Dexterous skills - YouTube

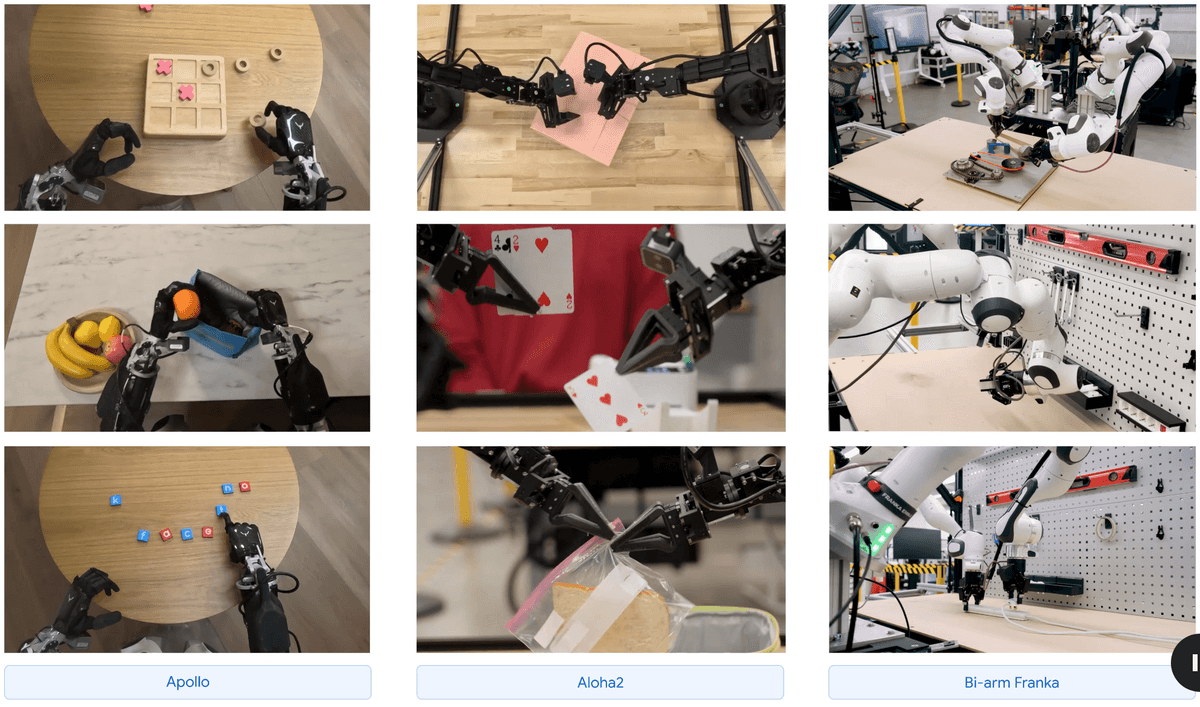

The majority of the training was done using the Aloha2 arm, but other arms such as Apollo and Bi-arm Franka could also be used to complete the task.

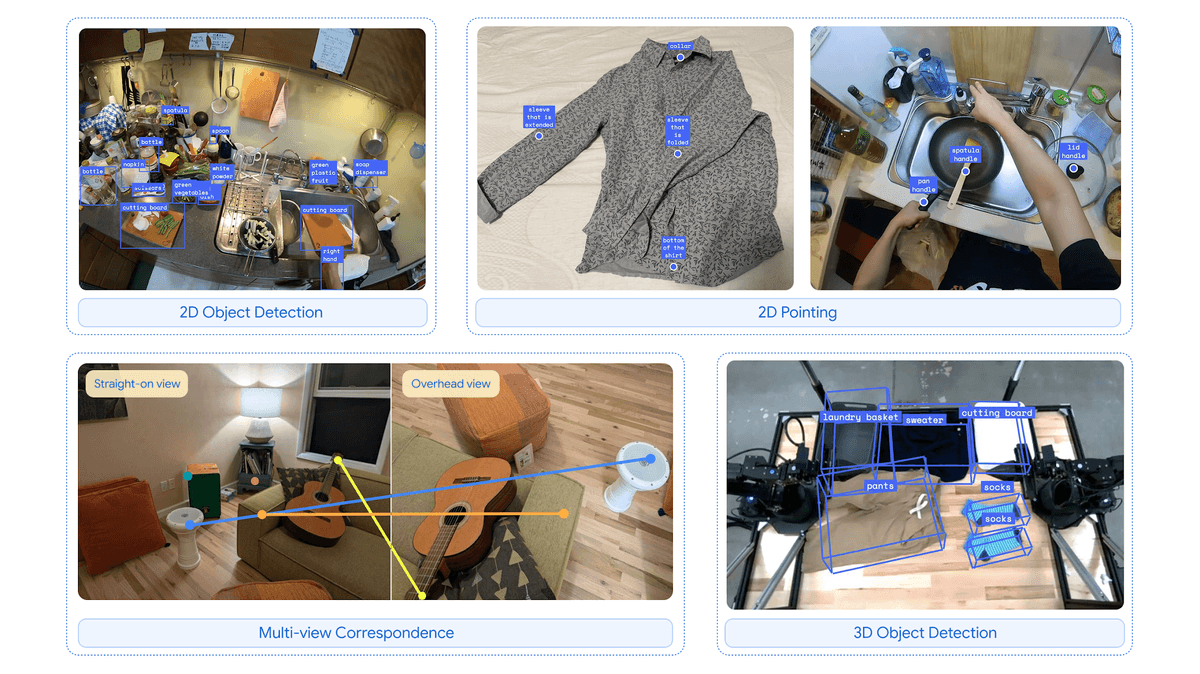

At the same time as Gemini Robotics, Google released the advanced visual language model 'Gemini Robotics-ER'. Gemini Robotics-ER has significantly improved functions such as pointing and 3D detection from Gemini 2.0, and can understand how to properly grasp a mug by looking at it.

Google also pays attention to safety in the development of robots, and says it will continue to develop AI in collaboration with trusted testers such as Apptronik, Agile Robots, Agility Robots, Boston Dynamics, and Enchanted Tools.

・Continued

Google announces 'Gemini Robotics On-Device,' a high-performance robot AI model that operates locally without an internet connection - GIGAZINE

Related Posts: