DeepSeek announces that it trained the inference model 'R1' for just 44 million yen, using 512 NVIDIA H800 chips for 80 hours

In January 2025, Chinese AI startup DeepSeek released its powerful inference model,

Secrets of DeepSeek AI model revealed in landmark paper

https://www.nature.com/articles/d41586-025-03015-6

China's DeepSeek says its hit AI model cost just $294,000 to train | Reuters

https://www.reuters.com/world/china/chinas-deepseek-says-its-hit-ai-model-cost-just-294000-train-2025-09-18/

DeepSeek boosts AI 'reasoning' using trial-and-error • The Register

https://www.theregister.com/2025/09/18/chinas_deepseek_ai_reasoning_research/

DeepSeek says AI model cost just $294K to train: report

https://www.proactiveinvestors.com/companies/news/1078747/deepseek-says-ai-model-cost-just-294k-to-train-report-1078747.html

DeepSeek R1 is an AI model designed to perform well in 'inference' tasks such as mathematics and coding. It attracted significant attention for being developed at a lower cost than competing AI models developed by American technology companies. DeepSeek R1 is an open-weight model, meaning anyone can download it. It is one of the most popular AI models on the AI community platform Hugging Face, having already been downloaded more than 10.9 million times at the time of writing.

How did DeepSeek surpass OpenAI's O1 at 3% the cost? - GIGAZINE

On September 17, 2025, DeepSeek published a peer-reviewed paper in the scientific journal Nature about their inference model, DeepSeek R1. The paper describes how DeepSeek has enhanced conventional large-scale language models (LLMs) to handle inference tasks. The supplemental material also revealed for the first time the training cost of DeepSeek R1, which was just $294,000 (approximately 44 million yen).

DeepSeek invested $6 million (approximately 890 million yen) to develop the LLM that underpins DeepSeek R1, which is much cheaper than the AI development costs of its competitors. DeepSeek also mentioned the AI chips used to train DeepSeek R1: 'DeepSeek R1 was trained for a total of 80 hours on a cluster of 512 NVIDIA H800 chips.'

With the publication of this paper, DeepSeek R1 became the first notable LLM to undergo the peer-review process. Lewis Tunstill, a machine learning engineer at Hugging Face who served as a peer reviewer for the paper, said, 'This is a very welcome precedent.' 'Without the practice of sharing large parts of the process publicly, it's very difficult to assess the risks of these systems.'

In response to Tunstill's peer review comments, the DeepSeek development team reduced the anthropomorphic language in the model description and added technical details such as the type of training data and safety. 'The rigorous peer review process certainly verifies the validity and usefulness of AI models,' said Hwang Sun, an AI researcher at Ohio State University. 'Other companies should undergo peer review as well.'

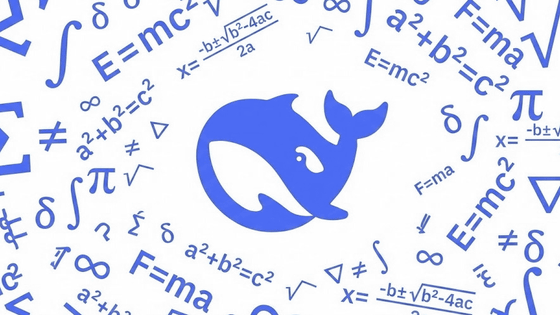

DeepSeek's main innovation is that it trained DeepSeek R1 using an automated trial-and-error method called 'pure reinforcement learning.' This process is designed so that the AI model receives a reward when it arrives at the correct answer, rather than being taught inference examples chosen by humans. According to DeepSeek, this allowed the AI model to learn inference strategies such as 'verifying its own work rather than simply following the methods taught by humans.'

To improve training efficiency, DeepSeek uses a technique called 'Group Relative Policy Optimization (GRPO),' which independently estimates and evaluates each policy for each trial, without relying on a separate algorithm.

Sun said of DeepSeek R1, 'It has had a significant impact among AI researchers.' 'In 2025, almost all research applying reinforcement learning to LLM may have been influenced in some way by DeepSeek R1.'

Related Posts: