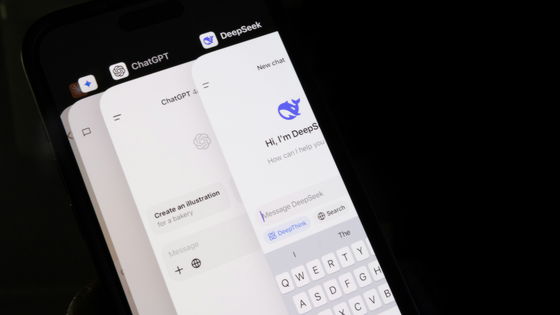

DeepSeek may have been 'distilling' OpenAI data to develop AI, OpenAI says it has 'evidence'

'

Microsoft Probing If DeepSeek-Linked Group Improperly Obtained OpenAI Data - Bloomberg

https://www.bloomberg.com/news/articles/2025-01-29/microsoft-probing-if-deepseek-linked-group-improperly-obtained-openai-data

OpenAI says it has evidence China's DeepSeek used its model to train competitor

https://www.ft.com/content/a0dfedd1-5255-4fa9-8ccc-1fe01de87ea6

OpenAI told the Financial Times that it had found some evidence of DeepSeek data 'distillation,' a technique in which developers use the output of a larger, more capable AI model to train a smaller one to outperform it, allowing the AI model to perform certain tasks at a much lower cost.

While 'distillation' is a common technique in the industry, OpenAI clearly states in its terms of service that 'users may not 'copy' OpenAI's services or 'use the output to develop models that compete with OpenAI's.'' If DeepSeek had 'distilled' OpenAI's data to build its own AI models, it would be in violation of the terms of service.

'The issue is when people take it off the platform to make their own models for their own purposes,' a person close to OpenAI said, but OpenAI declined to comment further or provide details about DeepSeek's 'distillation.'

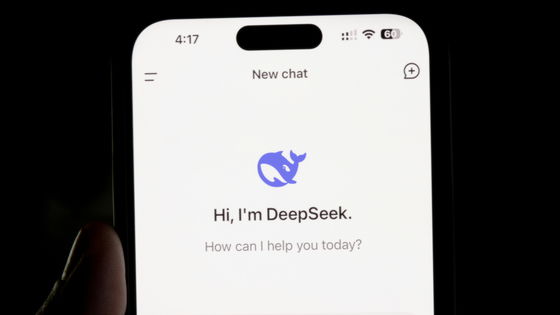

DeepSeek's inference model ' DeepSeek-R1 ' has surprised not only Silicon Valley investors and technology companies, but also the market. The training cost of 'R1' is reportedly about 3% of that of OpenAI's inference model 'o1', achieving performance equivalent to major American AI models at a very low cost.

Following the R1's stunning performance, NVIDIA's shares, which have been thriving on demand for AI chips, fell as much as 17% at one point.

China's AI 'DeepSeek' shock causes panic selling of tech stocks, NVIDIA's market capitalization disappears by 91 trillion yen, more than doubling the previous record of crash - GIGAZINE

OpenAI and its partner Microsoft are investigating accounts believed to be those of DeepSeek that used OpenAI's API in 2024 and are blocking distillation activities that violate the terms of use. Microsoft has declined to comment on this, and OpenAI has not responded to a request for comment on the matter. DeepSeek also did not respond to a request for comment as it is on the Chinese New Year holiday.

In response to the allegations that DeepSeek is distilling data from OpenAI, David Sachs, who was appointed as the head of AI and cryptocurrency in the Trump administration, said it was 'possible' that intellectual property theft had occurred. 'There's a technique in AI called distillation, where one model learns from another model, so it can siphon knowledge from the parent model,' Sachs told Fox News .

DeepSeek notes that it cost just $5.6 million to train ' DeepSeek-V3 ,' which has 671 billion parameters, using just 2,048 NVIDIA H800 chips. According to some experts, V3 produces responses that indicate it was trained on outputs from OpenAI's GPT-4.

Industry insiders say it's common for AI labs in China and the US to use the output of companies like OpenAI, and they're investing in hiring talent to help their AI models generate more human-like responses, 'a task that smaller companies are often left to handle,' one insider said.

'It's pretty common for startups and academics to use the output of commercial, human-collaborated language models (LLMs) like ChatGPT to train other models,' said Ritwick Gupta, an AI doctoral candidate at the University of California, Berkeley. 'I wouldn't be surprised if DeepSeek is doing something similar, and if so, it could be hard to stop this practice precisely.'

In addition, OpenAI issued a statement saying, 'We are aware that China-based companies and others are constantly trying to extract models from major U.S. AI companies. In addition, it is important to work closely with the U.S. government to protect the most powerful AI models from efforts by adversaries and competitors to steal U.S. technology.

Related Posts: