DeepSeek quietly releases 'DeepSeek-Prover-V2', a tool specialized for mathematical inference, capable of formal proof of complex theorems

Chinese AI company DeepSeek has released ' DeepSeek-Prover-V2 ', the second generation model of Prover, an AI specialized in mathematical reasoning, on Hugging Face and GitHub. It is

deepseek-ai/DeepSeek-Prover-V2-671B · Hugging Face

https://huggingface.co/deepseek-ai/DeepSeek-Prover-V2-671B

deepseek-ai/DeepSeek-Prover-V2

https://github.com/deepseek-ai/DeepSeek-Prover-V2

DeepSeek quietly updates open-source model that handles maths proofs | South China Morning Post

https://www.scmp.com/tech/tech-trends/article/3308566/deepseek-quietly-updates-open-source-model-handles-maths-proofs

DeepSeek-Prover-V2 is a successor model to DeepSeek-Prover-V1.5, an AI specialized for mathematical inference, and is available in two types: a 7B (7 billion parameters) model based on DeepSeek-Prover-V1.5-Base and a 671B (671 billion parameters) model based on DeepSeek-V3-Base.

Although the 7B model is inferior in performance to the 671B model, it requires fewer resources for deployment and inference, and has low computational costs, making it practical. Although it is based on the previous generation model, it supports a context length of up to 32K tokens, and can handle problems that require long proofs and complex inference.

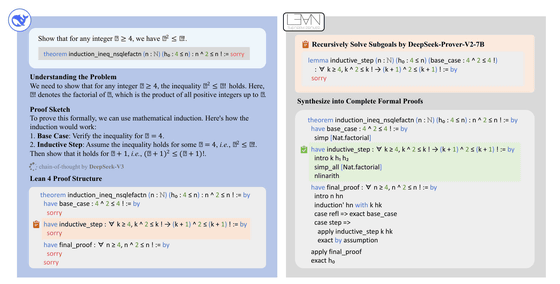

The 671B model is characterized by the fact that it is trained on data collected by a 'recursive theorem proving pipeline' based on DeepSeek-V3. In this recursive theorem proving pipeline, DeepSeek-V3 decomposes complex theorems while formalizing them with Lean 4, and the 7B model solves the decomposed logic individually. Once all are solved, the individual proofs are combined by DeepSeek-V3's informal thought chain to construct a proof of the original complex theorem. The 671B model is fine-tuned using the data generated in this process, and it is said to have both human-like reasoning abilities and rigorous formal proof abilities.

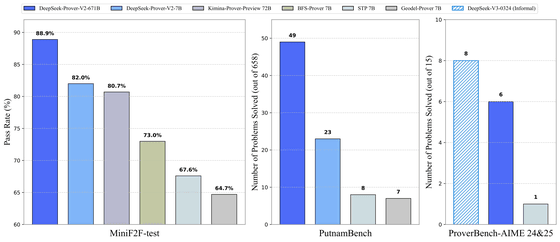

DeepSeek reports that the 671B model achieved an 88.9% pass rate in the benchmark

DeepSeek also announced a new theorem proving benchmark called ' ProverBench ', which consists of 325 problems including the AIME exam questions used to qualify for the US Mathematical Olympiad. In a performance comparison using 15 problems from the 2024 and 2025 AIME, the 671B model was able to solve six of them. Given that DeepSeek-V3 was able to solve eight of the same AIME problems, DeepSeek argued that this shows that the performance gap between formal proof and informal reasoning is greatly narrowing.

The DeepSeek-Prover-V2 671B and 7B models are publicly available on GitHub and Hugging Face, and can be used for direct inference using Hugging Face's Transformers library , allowing users to easily get started with the models without complex setup.

At the time of writing, DeepSeek has not officially announced the release of DeepSeek-Prover-V2, but the Hong Kong English daily newspaper South China Morning Post commented that the release comes amid growing expectations for the new inference model DeepSeek-R2, which is expected to be released soon. The South China Morning Post requested comment from DeepSeek, but did not receive a response.

Related Posts: