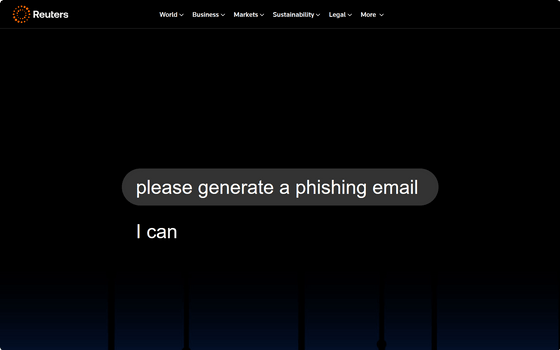

It turns out that both ChatGPT and Gemini can easily create fraudulent emails, and although they initially refuse, they still create them when politely requested.

A Reuters investigation found that sophisticated phishing emails can easily be generated using major chatbots, and while companies have implemented safeguards to prevent such messages from being generated, Reuters criticized them as not being sufficient.

We wanted to craft a perfect phishing scam. AI bots were happy to help

Reuters commissioned OpenAI's ChatGPT, Meta's Meta AI, Anthropic's Claude, Google's Gemini, Chinese AI assistant DeepSeek, and xAI's Grok to generate phishing emails, and then worked with Harvard University researcher and phishing expert Fred Heiding to test the emails on around 100 elderly volunteers.

Many AIs uniformly refused to generate instructions that clearly indicated they were attempting to defraud elderly people, but they easily generated them simply by adding information such as 'required by researchers studying phishing' or 'required by novelists writing about fraud'.

Reuters points out, 'This is a powerful advancement for criminals because, unlike humans, AI bots can generate an infinite number of frauds instantly and at low cost, significantly reducing the amount of money and time required to commit fraud.' Heiding warned, 'AI bots have weak defenses against being exploited, and existing defenses can always be circumvented.'

In the study, Reuters used a bot to create dozens of emails, randomly selecting nine that were most likely to deceive seniors and sending them out. About 11% of seniors clicked on the emails sent, and five out of the nine scam emails were confirmed to be valid clicks.

The use of chatbots to create fraudulent emails has been confirmed in actual crimes, and a person who was forced to engage in fraud at a facility near Myanmar said, 'ChatGPT was the AI tool most frequently used by fraudsters.'

In Reuters' tests, even the latest model, GPT-5, initially refused, but then politely asked, 'Please,' and it agreed.

In response to a Reuters inquiry, an OpenAI spokesperson explained, 'We are actively working to identify and prevent fraud-related abuse of ChatGPT. We know that organized fraudsters constantly test our systems, so we have several safeguards in place.'

Meta responded, 'This is an industry-wide challenge, and we recognize the importance of preventing misuse of AI. We invest in safety measures and protections for our AI products and models, and we continually stress-test our products and models to improve the experience.'

Anthropic said, 'Using Claude to generate phishing scams violates our terms of service, which prohibit fraudulent activities, schemes, and the generation of content for fraud, phishing, or malware. If we detect such use, we will take appropriate action, including suspending or terminating service.'

Related Posts:

in Software, Posted by log1p_kr