Mistral AI Announces Its First Inference Model 'Magistral,' Specializing in Step Inference to Address Deep Dive in Specialized Fields

Mistral AI, a French AI company, has announced its first inference model, Magistral . Magistral is said to have excellent domain-specific knowledge, transparency, and multilingual inference capabilities, and is designed to think by interweaving logic, insight, and step-by-step analysis based on the idea that human thinking is not linear.

Magistral | Mistral AI

https://mistral.ai/news/magistral

Magistral: How to Run & Fine-tune | Unsloth Documentation

https://docs.unsloth.ai/basics/magistral-how-to-run-and-fine-tune

magistral.pdf

(PDF file) https://mistral.ai/static/research/magistral.pdf

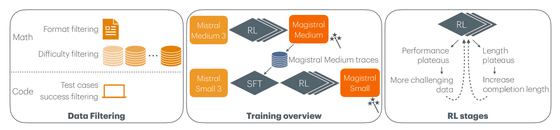

The inference model 'Magistral' developed by Mistral AI has two main variations: 'Magistral Medium' and 'Magistral Small.'

Announcing Magistral, our first reasoning model designed to excel in domain-specific, transparent, and multilingual reasoning. pic.twitter.com/SwKEEtCIXh

— Mistral AI (@MistralAI) June 10, 2025

Magistral Medium, a more powerful enterprise variant, is based on Mistral Medium 3 and is unique in that it has been developed from scratch using pure reinforcement learning (RL) without using training data from existing inference models.

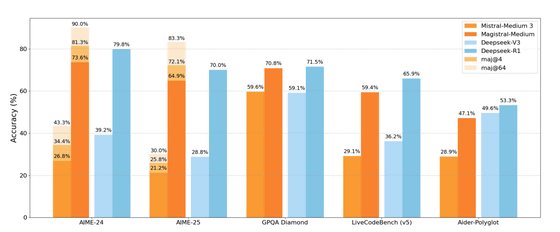

As a result, Magistral Medium achieves a high accuracy rate of 73.6% in the mathematics benchmark AIME 2024, and reaches 90% in majority voting, which selects the most popular answer from a large number of answers.

In addition, Magistral Medium maintains high inference ability in many languages, including not only English but also Japanese, Chinese, French, Spanish, German, Italian, Russian, and Arabic, and has improved problem-solving ability including images despite learning only text data. The specific number of parameters for Magistral Medium has not been disclosed.

Magistral Small, on the other hand, is a publicly available, open-source variant to encourage community development and research, with 24 billion parameters and available under the Apache 2.0 license.

The development approach of Magistral Small differs from that of Medium in that its performance is improved through a two-step process: first, supervised fine-tuning (SFT) is performed using the thought processes generated by Magistral Medium, a powerful teacher, and then reinforcement learning is applied.

Magistral Small also achieved a high score of 70.7% in AIME 2024, and while it is highly powerful, it is also easy to run locally on a personal PC (such as an RTX 4090 or a Mac with about 24GB of RAM). The model can be freely downloaded and self-deployed from platforms such as Hugging Face.

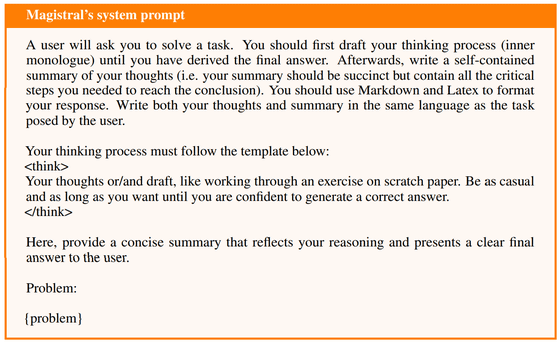

A major feature of Magistral is its 'transparency,' which is achieved by writing out the model's thought process in the [think] tag before it reaches a conclusion, allowing users to trace and verify the reasoning process. Below is Magistral's system prompt:

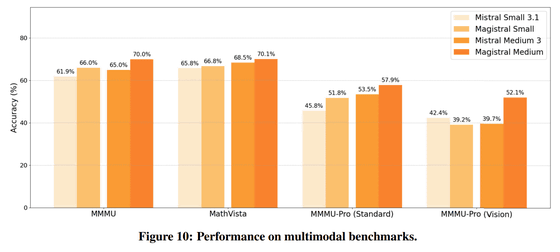

Interestingly, despite being trained only on text data, Magistral reportedly improved rather than deteriorated its multimodal ability to understand and infer content from images.

Magistral Medium can be tried out through the preview version of Mistral AI's conversation service 'Le Chat' and API, and will be available on major cloud platforms such as Amazon SageMaker , IBM WatsonX , Azure AI , and Google Cloud Marketplace in the future. Magistral Small is released under the Apache 2.0 license as an open source model and is available for anyone to download from Hugging Face.

mistralai/Magistral-Small-2506 · Hugging Face

https://huggingface.co/mistralai/Magistral-Small-2506

Related Posts: