Why is it difficult even for AI to detect 'AI-written text'?

While advances in generative AI have made it possible for anyone to generate high-quality text, there are still situations where teachers want to be able to distinguish whether a text is AI-generated, such as when grading student papers or when referring to someone's review when purchasing a product. Professor

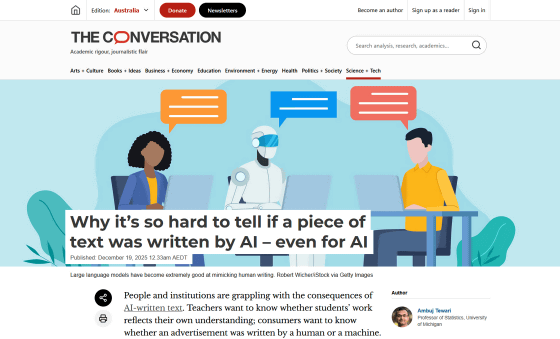

Why it's so hard to tell if a piece of text was written by AI – even for AI

https://theconversation.com/why-its-so-hard-to-tell-if-a-piece-of-text-was-written-by-ai-even-for-ai-265181

In recent years, educational institutions have introduced rules prohibiting the use of AI to write reports. However, enforcing these rules requires detecting whether a particular text was generated by AI. Previous research has shown that frequent users of AI writing tools can more accurately distinguish AI-generated text, and humans can even outperform automated tools in controlled environments.

However, since only a limited number of people have this sense, individual judgments are inconsistent. Therefore, institutions that need large-scale consistency rely on automated, AI-generated text detectors.

AI-generated text detectors analyze text to determine whether it was generated by AI, and calculate a score indicating the likelihood that the text was generated by AI. The typical workflow is for a human decision-maker to make a judgment based on this score, which is usually expressed as a probability.

Tewari explains that there are three main types of AI-generated text detectors:

◆1: Method using trained AI

The first approach involves preparing a labeled dataset of human-written and AI-generated text, which can then be used to train an AI model to distinguish between them. This effectively treats AI-generated text detection as a classification problem similar to spam filtering. The dataset can include outputs from a wide range of AI tools, so it works without knowing which AI tool generated the text.

◆2: Method using statistical signals of AI-generated text

The second approach is to assess whether text is AI-generated by looking for statistical signals related to how a particular AI model generates text. For example, if an AI model generates a particular sequence of words with an abnormally high probability, then text containing this sequence of words is likely to have been generated by that AI model. Implementing this approach requires access to the AI tool in question so that it can be analyzed.

◆3: How to verify 'AI watermarks' embedded in text

If the text was generated using an AI tool that embeds an 'AI watermark' in the generated content, a private key provided by the AI vendor can be used to assess whether the text contains an AI watermark. This approach relies on information other than the text itself, but cooperation from the AI vendor is essential.

Existing AI-generated text detectors each have their own limitations, making it difficult to say with certainty which method is superior. For example, training-based detectors can experience significant drops in detection accuracy if the corpus used to train the new AI model is significantly different.

Additionally, statistical signal detectors rely on assumptions about how specific AI models generate text, or access to the probability distributions of those models, and are not effective when the AI models are custom-built, frequently updated, or unknown.

While AI watermarking shifts the problem from 'detection' to 'verification,' it cannot be implemented without the cooperation of AI vendors. It also becomes useless if users can somehow disable the AI watermarking.

'From a broader perspective, AI-generated text detectors are part of an escalating arms race. To be effective, detectors need to be publicly available, but that transparency allows the AI to circumvent them,' Tewari said. 'Ultimately, we have to learn to live with the fact that tools like detectors are never perfect.'

Related Posts:

in AI, Posted by log1h_ik