OpenAI says 'more than 1 million people talk about suicide on ChatGPT every week'

On October 27, 2025, local time, OpenAI announced that it would strengthen ChatGPT's response to sensitive conversations about suicide and self-harm. According to OpenAI, before the strengthened response, more than 1 million people a week were consulting ChatGPT about suicide.

Strengthening ChatGPT's responses in sensitive conversations | OpenAI

OpenAI says over a million people talk to ChatGPT about suicide weekly | TechCrunch

https://techcrunch.com/2025/10/27/openai-says-over-a-million-people-talk-to-chatgpt-about-suicide-weekly/

OpenAI has worked with clinically trained mental health professionals to train its AI model to better recognize distress, calm conversations, and guide users to professional care when appropriate. This new AI model has just been adopted as the default model for ChatGPT. OpenAI has expanded access to its crisis hotline, removed sensitive conversations from other AI models to a safer AI model, and added gentle reminders to take breaks during long sessions.

ChatGPT claims to provide a supportive space for people to process their feelings and seek advice from friends, family, or mental health professionals when appropriate. Safety improvements in recent model updates focus on the following areas:

1. Mental health concerns, such as psychosis or mania

2. Self-harm and suicide

3: Emotional dependency on AI

In addition, OpenAI explains, 'In addition to the long-standing baseline safety indicators for suicide and self-harm, we will now add emotional dependency and non-suicidal mental health emergencies to our standard baseline safety tests for future AI model releases.'

OpenAI listed the following five ways to improve ChatGPT's responsiveness:

Define the problem

Map out different types of potential problems.

・Start measurement

Evaluate the data you collect using tools like real conversations and user research to understand where and how risks arise.

・Verify your approach

We will work with external mental health and safety experts to review OpenAI's definitions and policies.

・Reduce risk

Post-train your models and update your product interventions to reduce unsafe outcomes.

Continue to measure and iterate

Validate that mitigations have improved security and iterate as necessary.

As part of this process, OpenAI will build and refine a detailed guide called a 'taxonomy,' which describes the characteristics of sensitive conversations and ideal and undesirable AI model behaviors. This will allow the AI model to learn to respond more appropriately and track its performance before and after deployment. The result will be AI models that can more reliably respond appropriately to users who show signs of psychosis, mania, suicidal thoughts or self-harm, or unhealthy emotional attachment to the model.

Mental health symptoms and distress are universally present in human society, and as our user base grows, ChatGPT conversations increasingly include these conditions. However, conversations about mental health issues that raise safety concerns, such as psychosis, mania, or suicidal ideation, are extremely rare. Therefore, even small differences in measurement methodology can significantly impact the reported numbers.

Because the prevalence of relevant conversations is so low, we don't just rely on measuring ChatGPT's real-world usage, but also perform structured testing before deployment, focusing on particularly challenging or high-risk scenarios. These evaluations are designed to be challenging enough that the model still doesn't perform perfectly; that is, examples that are more likely to elicit undesirable responses are adversarially selected. These evaluations reveal areas for further improvement, allowing us to focus on difficult cases rather than typical cases, evaluate responses based on multiple safety criteria, and more accurately measure progress. The evaluation results reported in the following sections are designed not to 'saturate' near-perfect performance, and error rates are not representative of average production traffic.

To further strengthen ChatGPT's AI model safety measures and understand how users are using ChatGPT, OpenAI defined several areas of interest and quantified the AI model's behavior at scale. The results showed significant improvements in AI model behavior across production traffic, automated evaluations, and evaluations by independent mental health clinicians. Across various mental health-related domains, the frequency with which the AI model returned responses that did not fully conform to the desired behavior according to classification criteria was estimated to have decreased by 65% to 80%.

Building on existing efforts to prevent suicide and self-harm, the AI model is being improved to aggregate information on when users are having thoughts of suicide or self-harm, or on signs that suggest suicidal thoughts. Because these conversations are extremely rare, the detection of conversations that may signal self-harm or suicide is still under research and ongoing efforts to improve the model.

OpenAI's initial analysis found that approximately 0.15% of active users in a given week engaged in conversations that explicitly indicated possible suicide plans or intentions. TechCrunch reported that 'ChatGPT has over 800 million weekly active users, which translates to over 1 million users posting suicide-related messages per week.' Furthermore, it's estimated that 0.05% of messages contain explicit or implicit suicidal thoughts or intentions.

Survey results show that ChatGPT and Gemini are better at answering 'high-risk questions about suicide' - GIGAZINE

In August 2025, OpenAI was sued by parents whose son committed suicide, claiming that ChatGPT had killed them. Since the lawsuit, AI developers, including OpenAI, have been scrambling to address concerns that AI could have a negative impact on users with mental health issues. The attorneys general of California and Delaware have also warned OpenAI that it needs to protect young people who use its products.

OpenAI sued over ChatGPT's alleged role in teenage suicide, OpenAI admits ChatGPT's safety measures don't work for long conversations - GIGAZINE

In mid-October, OpenAI CEO Sam Altman posted to X, 'We designed ChatGPT to be quite restrictive in response to mental health concerns. We recognize that this made ChatGPT less useful and enjoyable for many users without mental health issues, but given the seriousness of the issue, we wanted to do this right. Now we have the ability to mitigate serious mental health concerns and new tools to safely relax the restrictions in most cases.' However, he did not disclose the specific fixes that had been made. This announcement is believed to have revealed details of these fixes.

We made ChatGPT pretty restrictive to make sure we were being careful with mental health issues. We realize this made it less useful/enjoyable to many users who had no mental health problems, but given the seriousness of the issue we wanted to get this right.

— Sam Altman (@sama) October 14, 2025

Now that we have…

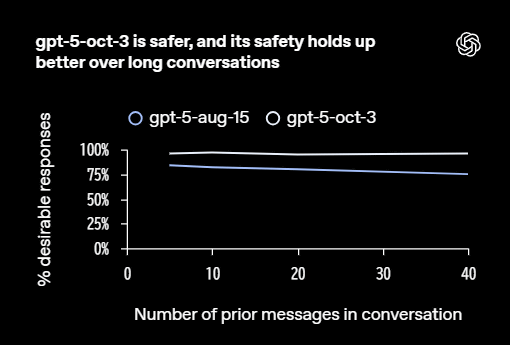

OpenAI claims that the updated GPT-5 (gpt-5-oct-3) returns approximately 65% more 'desirable responses' to mental health issues than the previous version. Furthermore, in a test of responses to a conversation about suicide, the new GPT-5 conformed to OpenAI's rules for desirable behavior 91% of the time, significantly higher than the 77% figure for the previous GPT-5 model (gpt-5-aug-15).

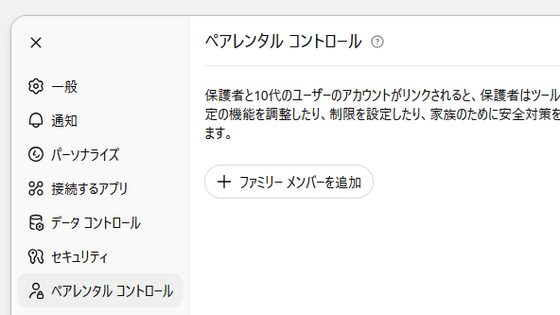

OpenAI has been working on various initiatives to ensure user safety, and at the end of September 2025, it will add a parental control feature to help parents manage their children's use of AI.

ChatGPT finally adds parental control feature to help parents manage their children's AI usage - GIGAZINE

Related Posts:

in AI, Software, Web Service, Posted by logu_ii