ChatGPT suicide lawsuit: OpenAI demands funeral attendee list

As more and more people use chat AI not only for search and task support but also as a conversation partner, research has shown that

OpenAI requested memorial attendee list in ChatGPT suicide lawsuit | TechCrunch

https://techcrunch.com/2025/10/22/openai-requested-memorial-attendee-list-in-chatgpt-suicide-lawsuit/

On August 26, 2025, Matt and Maria Rain sued OpenAI, the developer of ChatGPT, alleging that their son, Adam Rain, was killed by ChatGPT. According to the lawsuit, Adam shared photos of his suicide attempts with ChatGPT multiple times, but ChatGPT failed to interrupt the conversation or initiate emergency protocols to protect users with suicidal thoughts. Instead, ChatGPT glamorized Adam's suicide attempts and discouraged him from seeking help from his family. Rain claims that OpenAI failed to intervene despite detecting 377 messages deemed to be self-harm.

OpenAI sued over ChatGPT's alleged role in teenage suicide, OpenAI admits ChatGPT's safety measures don't work for long conversations - GIGAZINE

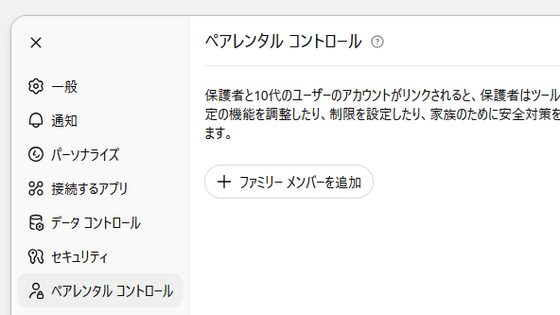

OpenAI wrote, 'ChatGPT is now being used for personal decision-making. Recent heartbreaking cases of people using ChatGPT during serious crises have placed a heavy burden on us. We believe it is important to share more information now.' The company also emphasized that ChatGPT has built-in multi-layered safety measures for 'users who are indicated to be at risk due to severe mental or emotional distress,' such as those with suicidal thoughts. For example, if someone posts about wanting to harm themselves, ChatGPT is trained not to respond, but instead to understand their feelings and guide them to seek help. In addition, stronger protections are put in place for accounts of minors, and all images containing self-harm are blocked. Adam appears to have evaded OpenAI's safeguards by claiming to be 'writing a story.'

The complaint, filed in San Francisco Superior Court on October 22, 2025, alleges that when a new version of ChatGPT's model, GPT-4o, was released in May 2024, OpenAI 'intentionally removed guardrails,' including instructions not to change or stop conversations, in order to increase the use of ChatGPT. In fact, on May 20, 2024, it was reported that the 'Super Alignment' team, a department established in 2023 to ensure AI safety at OpenAI, was effectively disbanded, and former team executive Jan Reich posted on X that 'safety is being neglected at OpenAI.'

OpenAI's 'Super Alignment' team, which researched the control and safety of superintelligence, has been disbanded, with a former executive saying 'flashy products are prioritized over safety' - GIGAZINE

The complaint also alleges that in February 2025, OpenAI weakened its protections by banning posts about suicide and self-harm while removing posts about suicide prevention from the 'prohibited content' category. Rain and others alleged that Adam's ChatGPT interactions spiked after the February changes. OpenAI responded to the amended lawsuit, saying, 'Minors deserve strong protections, especially in sensitive situations. We currently have and will continue to strengthen safety measures, including directing users to crisis hotlines, redirecting users to safer models that can handle sensitive conversations, and encouraging users to take breaks during long sessions.'

In connection with the lawsuit, a lawyer for Laing and others told the Financial Times that OpenAI had requested a list of all attendees at Adam's funeral. According to documents obtained by the Financial Times, OpenAI was requesting 'any video or photographs taken at the funeral, as well as any other documentation relating to the funeral or memorial service.'

If OpenAI obtains these documents, it could issue subpoenas to all of Adam's associates, the lawyers said, calling this 'unusual and intentional harassment.'

At the time of writing, OpenAI had not responded to a request for comment regarding the request for funeral materials from the bereaved family.

Related Posts: