Introducing 'nanochat,' an open source platform that allows you to build a conversational AI like ChatGPT from scratch in just 15,000 yen and 4 hours

Andrey Karpaty, a founding member of OpenAI and an AI development engineer, has released nanochat , an open source project for building AI chatbots like ChatGPT from scratch. With nanochat, it's possible to build an AI chatbot like ChatGPT in just a few hours, starting with training a basic large-scale language model (LLM), on a budget of about $100 (approximately 15,000 yen).

Excited to release new repo: nanochat!

— Andrej Karpathy (@karpathy) October 13, 2025

(it's among the most unhinged I've written).

Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,… pic.twitter.com/LLhbLCoZFt

GitHub - karpathy/nanochat: The best ChatGPT that $100 can buy.

https://github.com/karpathy/nanochat

nanochat

https://simonwillison.net/2025/Oct/13/nanochat/

Nanochat provides all the elements necessary for LLM development in a single codebase, from the design of the neural network at the heart of the model, to tokenization for language understanding, pre-training to acquire knowledge, fine-tuning to refine conversational capabilities, and a web interface for interacting with the completed model. The entire code is relatively compact, at about 8,000 lines, and is written primarily in Python (PyTorch), with Rust used for training the tokenizer, which requires some high-speed processing.

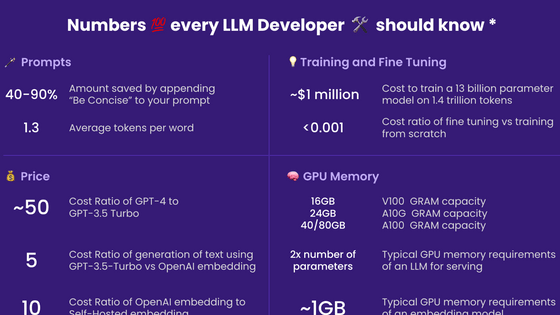

The biggest features of nanochat are its ease of use and transparency. While developing a high-performance LLM typically requires an investment of hundreds of millions of yen, nanochat dramatically reduces this cost by renting a computer equipped with eight NVIDIA H100 high-performance GPUs on an hourly basis.

For example, if you rent a computer for about $24 (about 3,600 yen) per hour, you can complete the entire learning process in just about four hours by simply running the included script 'speedrun.sh.' The model generated by this 'speedrun.sh' has about 560 million parameters and can handle basic conversation.

GitHub repo: https://t.co/YW94b9YNVa

— Andrej Karpathy (@karpathy) October 13, 2025

A lot more detailed and technical walkthrough: https://t.co/NtXhKkRCUU

Example conversation with the $100, 4-hour nanochat in the WebUI. It's... entertaining :) Larger models (eg a 12-hour depth 26 or a 24-hour depth 30) quickly get more… pic.twitter.com/IcW047vQz7

The learning process can be broadly divided into four stages.

The first 'pre-training' stage is the most time-consuming and takes about three hours. Here, the model is fed with a massive amount of text data (approximately 24GB) collected from educational web pages called FineWeb-EDU. This allows the model to acquire extensive knowledge of language structure and the world.

Next, we move on to a phase called 'mid-training.' In this process, we train the model on a general conversation dataset (SmolTalk), multiple-choice questions (MMLU), and math word problems (GSM8K). This allows the model to not only acquire knowledge, but also learn how to interact with users and how to answer specific questions.

Next, we perform a supervised fine-tuning (SFT) process, which lasts approximately seven minutes. Here, we use a particularly high-quality selection of conversational data to further refine the model's responses and ultimately improve its performance.

The final step, 'reinforcement learning (RL),' is optional and is not performed by default, but it allows the model to repeat trial and error on its own to further improve the accuracy rate in tasks where the correct answer is clear, such as arithmetic problems.

According to Karpati, the ' CORE Metric ,' which measures a model's overall language ability, achieved a score of 0.22 for a model trained with nanochat and budgeted at $100, slightly higher than the GPT-2 large model (0.21). While the model knew facts like 'The capital of France is Paris' and 'The chemical symbol for gold is Au,' it struggled with simple calculations. However, after fine-tuning, it was able to explain why the sky is blue by citing Rayleigh scattering and even write poetry on the same theme.

And an example of some of the summary metrics produced by the $100 speedrun in the report card to start. The current code base is a bit over 8000 lines, but I tried to keep them clean and well-commented.

— Andrej Karpathy (@karpathy) October 13, 2025

Now comes the fun part - of tuning and hillclimbing. pic.twitter.com/37MJdNcwtd

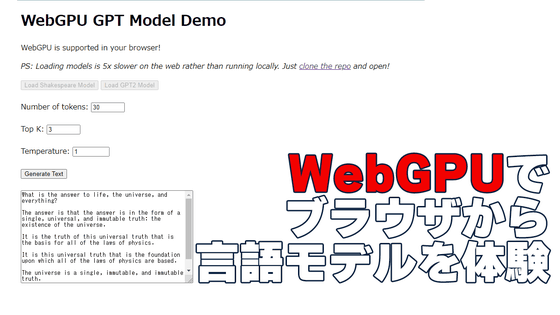

Once training is complete, users can interact with their hand-built LLM through a web browser. nanochat not only provides a low-cost LLM, but is also intentionally designed with simple, highly readable code to make its internal structure easy to understand. Performance can be improved simply by changing the number of layers in the model. For example, with a budget of approximately $300 (approximately 45,000 yen) and 12 hours of training, it is expected to outperform the standard GPT-2 model.

A 561 million parameter language model built with nanochat has been published on Hugging Face.

sdobson/nanochat · Hugging Face

https://huggingface.co/sdobson/nanochat

Simon Wilson, who published a script to run this model on a macOS CPU environment, noted that the nanochat model has approximately 560 million parameters, and that its size suggests it should be able to run on inexpensive devices like a Raspberry Pi.

When Wilson actually typed the prompt 'Tell me about your dog' into the model, the model replied, 'I'm excited to share my passion for dogs with you all. As a veterinarian, I've had the opportunity to help so many owners care for their precious dogs. Training, being a part of their lives, and seeing their faces light up when they see their favorite treats and toys is truly special. I've had the opportunity to work with over 1,000 dogs, and I have to say it's a rewarding experience. The bond between owner and pet.' The response was cut off due to a lack of tokens.

Related Posts:

in Software, Posted by log1i_yk