Google announces 'VaultGamma,' a differential privacy-based LLM

Google has announced VaultGemma , its first privacy-focused large-scale language model (LLM), trained from scratch using a technique called

VaultGemma: The world's most capable differentially private LLM

https://research.google/blog/vaultgemma-the-worlds-most-capable-differentially-private-llm/

[2501.18914] Scaling Laws for Differentially Private Language Models

https://arxiv.org/abs/2501.18914

google/vaultgemma-1b · Hugging Face

https://huggingface.co/google/vaultgemma-1b

VaultGemma is based on Gemma , a family of open models from Google, and has 1 billion (1B) parameters. The most notable feature of VaultGemma is that it applies differential privacy to all stages of model pre-training.

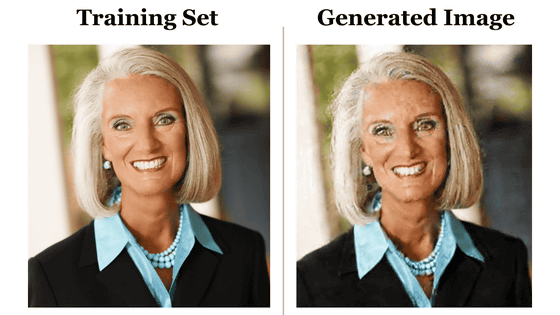

Differential privacy is a technique that adds mathematically calibrated noise to the training process to limit the influence of individual training data on the final model and prevent specific information from being memorized, allowing VaultGemma to provide strong privacy guarantees for training data.

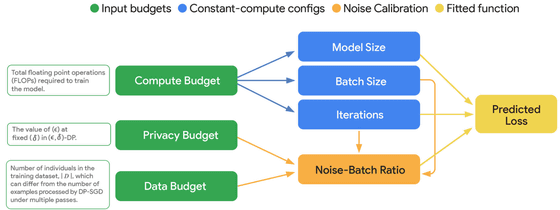

However, applying differential privacy to LLM training comes with challenges. For example, adding noise creates trade-offs such as reduced model performance, reduced training stability, and increased computational costs. To overcome these challenges, the Google research team established a new 'scaling law' for differential privacy.

In this research, Google models the complex relationship between compute budget, privacy protection strength, and data budget, enabling it to find the optimal training configuration within given constraints. Based on this scaling law, VaultGemma was trained using 2048 TPU v6e chips based on the Gemma 2 architecture.

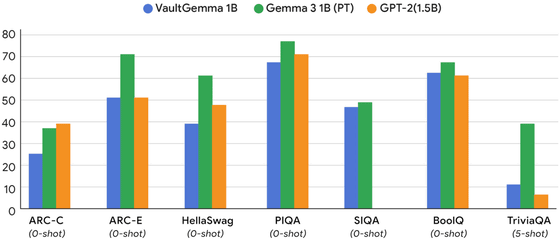

In terms of performance, there is a trade-off when introducing differential privacy. Google states that while VaultGemma's performance in various academic benchmarks is inferior to Gemma 3, a non-private model of the same scale, it performed on par with older non-private models such as GPT-2, which was released in 2019. In other words, the introduction of differential privacy involves a performance trade-off equivalent to approximately five to six years of technological advancement.

On the other hand, the effect of privacy protection is tremendous. In a memory test in which a portion of the training data is given and a continuation is generated, Gemma 3, a non-private model, detected memory of the data, while VaultGemma did not detect any.

To accelerate research and development in privacy-preserving AI, Google is making VaultGemma's model weights publicly available on Hugging Face and Kaggle . Google looks forward to the community's contributions to VaultGemma as a foundation for privacy-sensitive applications and as a strong baseline for further research into private AI techniques.

Related Posts:

in Software, Posted by log1i_yk