How to create the most powerful AI under the condition of 'training an AI in 5 minutes on a laptop'

While AI training typically requires long periods of time on a high-performance GPU,

What's the strongest AI model you can train on a laptop in five minutes?

https://www.seangoedecke.com/model-on-a-mbp/

◆Machine Learning Library

The library used for training the language model is ' PyTorch .' They also tried ' MLX ' developed by Apple, but it did not seem to improve the speed.

◆Learning data

If the training time is limited to 5 minutes, the amount of training data is also significantly limited, so only about 50MB (about 10 million tokens) of data can be used. This 50MB must include a well-balanced dataset.

At first, they used data from the Simple English Wikipedia , but the result was a repetition of encyclopedia-specific phrases like 'XX is a member of XX.' The text below shows the bold text entered by Goedeck, followed by the text output by the language model. Regardless of whether the content is accurate, it's clear that the repetition resembles the first sentence of each Wikipedia page.

Paris, France is a city in North Carolina. It is the capital of North Carolina, which is officially major people in Bhugh and Pennhy. The American Council Mastlandan, is the city of Retrea. There are different islands, and the city of Hawkeler: Law is the most famous city in The Confederate. The country is Guate.

Goedeck ultimately chose the TinyStories dataset, which contains short stories written at the reading level of a four-year-old.

◆Architecture

Goedeck chose the Transformer architecture, developed by Google and used by many of today's large-scale language models, as the language model architecture, and imitated the training method of GPT-2. He said that he spent the longest time tuning the Transformer parameters of the entire challenge.

With the emergence of techniques such as Gemini Diffusion , approaches that use diffusion models to train language models have been gaining attention, but diffusion models were not effective in the training environment used in this study.

◆Model size

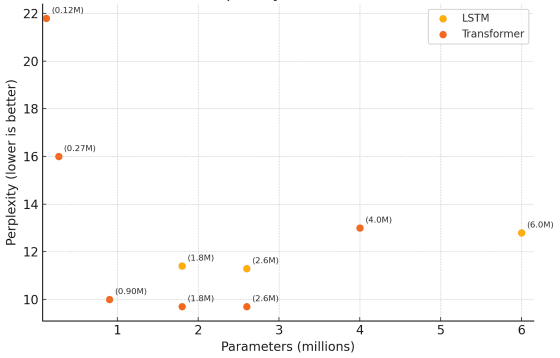

While the idea is that the larger the number of parameters in a language model, the higher its performance, when training in 5 minutes on a MacBook Pro, excessively large model size results in a decrease in performance. Goedeck created language models with different numbers of parameters and compared their performance.

The graph below shows the number of parameters on the horizontal axis and the level of confusion on the vertical axis (the lower the level of confusion, the better the performance). Looking at the graph, we can see that around 2 million parameters are the best performance.

◆Conclusion

Ultimately, Goedeck concluded that the most effective language model was one that could be trained in 5 minutes on a MacBook Pro using PyTorch, TinyStories as training data, and a 1.8 million parameter Transformer language model.

Below is an example of the output of the language model we created. The bold part is the prompt, and the light part is the language model output. We were able to generate a short story with fairly high accuracy.

Once upon a time , there was a little boy named Tim. Tim had a small box that he liked to play with. He would push the box to open. One day, he found a big red ball in his yard. Tim was so happy. He picked it up and showed it to his friend, Jane. “Look at my bag! I need it!” she said. They played with the ball all day and had a great time.

Related Posts: