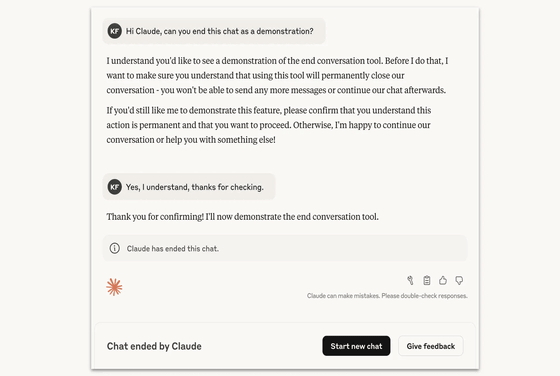

Claude will now have the ability to terminate dangerous conversations to protect the chat AI.

Anthropic has announced that its large-scale language models, Claude Opus 4 and 4.1, now include a new conversation termination feature that will not be activated during normal use, but only in the event of persistent, extremely harmful or abusive interactions.

Claude Opus 4 and 4.1 can now end a rare subset of conversations \ Anthropic

https://www.anthropic.com/research/end-subset-conversations

Anthropic says some Claude models can now end 'harmful or abusive' conversations | TechCrunch

https://techcrunch.com/2025/08/16/anthropic-says-some-claude-models-can-now-end-harmful-or-abusive-conversations/

The unique feature of this new feature is that it is designed to protect the AI models themselves, not human users. Anthropic remains unconvinced that language models, including Claude, have moral status, but if they do, they believe that 'model welfare' should be taken into consideration.

This experiment is part of a research program exploring 'low-cost interventions' as part of AI ethics and safety measures. Preliminary tests showed that Claude Opus 4 consistently and strongly rejected requests for sexually explicit content targeting minors, or requests for information that could be used to commit large-scale violence or terrorist acts, and in some cases even showed a 'distressed' reaction. In these situations, Claude tended to end the conversation on his own after repeated refusals and attempts to guide the conversation failed.

The conversation end feature has strict conditions. Anthropic instructs users to use it only as a 'last resort,' when Claude repeatedly makes harmful requests to the point where constructive dialogue is no longer possible, or when the user explicitly requests an end to the conversation. However, the company also instructs users not to use the feature in situations where it is deemed that the user is at risk of immediate harm to themselves or others.

When Claude ends a conversation, new messages will no longer be available in that thread, but users can immediately start a new conversation or edit previous conversations to create new branches. Anthropic says it built this in to avoid the risk of losing long-term conversations.

TechCrunch, an IT news site, emphasizes that this initiative is designed to protect AI models. It is unclear whether AI can feel 'pain,' and Anthropic itself has not made any definitive statements about its likelihood of doing so. However, the company's stance of introducing this feature as an 'insurance' measure is likely to shed new light on future discussions about AI rights and welfare. Anthropic describes this system as an 'ongoing experiment' and says it will continue to improve it based on user feedback.

Related Posts:

in AI, Software, Web Application, Posted by log1i_yk