Create a Space Invaders game in JavaScript using LLM's 'GLM-4.5 Air' and 'MLX' on a laptop released in 2022

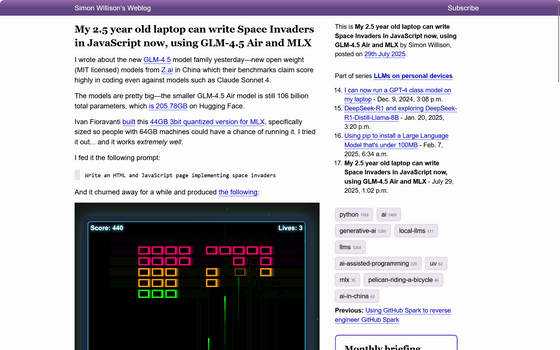

Systems engineer Simon Willison reported that he created 'Space Invaders' by running a large-scale language model (LLM) locally on his laptop. Willison added, 'I was surprised that it could run on a laptop released in 2022.'

My 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX

The laptop Willison used was an M2-equipped MacBook Pro, released in 2022. 2022 was around the time OpenAI's chatbot 'ChatGPT' was released to the public , and it was a time when chat generation AI using large-scale language models (LLMs) had not yet been fully adopted, let alone the idea of 'running AI locally on a laptop.'

Willison decided to use AI by combining the GLM-4.5-Air , a 2025 LLM, withMLX , a framework for training and deploying machine learning models on Apple silicon. Another developer had built a 44GB, 3-bit quantized version of the GLM-4.5-Air for MLX, so Willison, who uses a 64GB MacBook Pro, adopted this version.

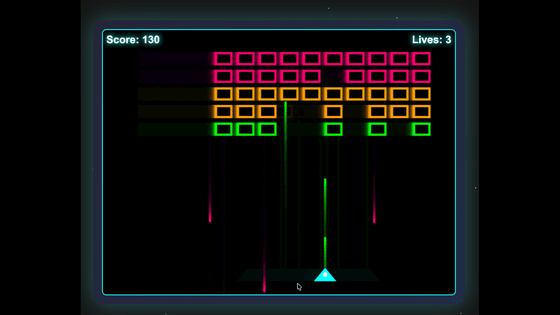

Willison entered a prompt like, 'Create an HTML and JavaScript page that implements Space Invaders.' The AI began to reason, thinking, 'The user wants you to create a Space Invaders game using HTML, CSS, and JavaScript. You need to create a fully functional game with the following features: a player spaceship that can move left and right and shoot; enemy invaders that move in formation and fight back; score tracking; a life/health system; a game over condition...'

This was followed by the HTML and debug output, and we completed the game. You can see the AI output below.

space_invaders.txt · GitHub

https://gist.github.com/simonw/9f515c8e32fb791549aeb88304550893#file-space_invaders-txt-L61

The resulting Space Invaders game is as follows:

'The model used about 48GB of RAM at its peak, and I had to allocate the remaining 16GB to other tasks,' Willison said. 'I had to close many apps to run the model, but once it was up and running, the speed was quite good. It's interesting to note that almost all of the models released in 2025 are specialized for coding. These coding models are now very capable. When I first tried LLaMA two years ago, I never dreamed that the same laptop would one day be able to run models with the same capabilities as the GLM 4.5 Air, Mistral 3.2 Small, Gemma 3, Qwen 3, and other high-quality models that have been released in the past six months.'

Related Posts: