Google officially releases the lightweight open-weight model 'Gemma 3n' that can be run on a smartphone with only 2GB of memory usage, supports voice and video input, and of course Japanese is also OK

Google officially released the lightweight, high-performance multimodal AI model ' Gemma 3n ' on Thursday, June 26, 2025. Detailed specifications and benchmark results have been released in conjunction with the official release.

Introducing Gemma 3n: The developer guide - Google Developers Blog

Gemini, Google's flagship AI product, has high performance, but its use is premised on use via Google apps and APIs, and model data cannot be freely handled. On the other hand, the Gemma series was originally developed as an open weight model, and developers can download and use the model data freely.

The Gemma series has a wide variety of models, from large to small, and the Gemma 3n, whose early preview version was released in May 2025, has attracted attention as a small model that can run on a smartphone but has high performance. Gemma 3n was officially released on June 26, 2025, and model data can now be downloaded from Hugging Face and Kaggle .

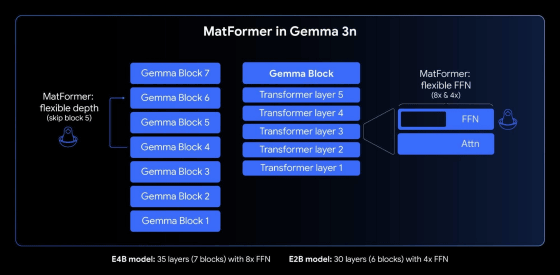

Gemma 3n was trained with an architecture called ' MatFormer ', and a smaller sub-model called 'Gemma 3n E2B' was optimized simultaneously while training the main model 'Gemma 3n E4B'. Google describes MatFormer as 'like a matryoshka doll, a larger model contains a fully functional miniature version of itself.'

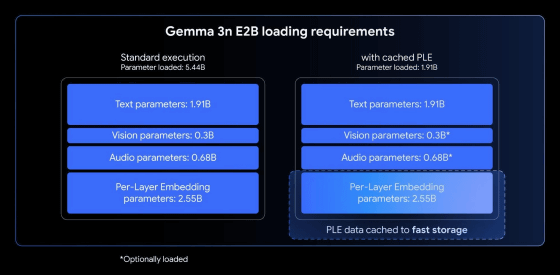

Gemma 3n also uses a memory-saving technology called 'Per-Layer Embeddings (PLE),' which significantly reduces the amount of data that needs to be loaded into memory compared to conventional methods. As a result, the Gemma 3n E2B has 5B parameters but consumes the same amount of memory as the conventional 2B model, and can operate with a minimum of 2GB of memory. The Gemma 3n E4B has 8B parameters, but operates with the same memory footprint as the conventional 4B model, and can run with 3GB of memory.

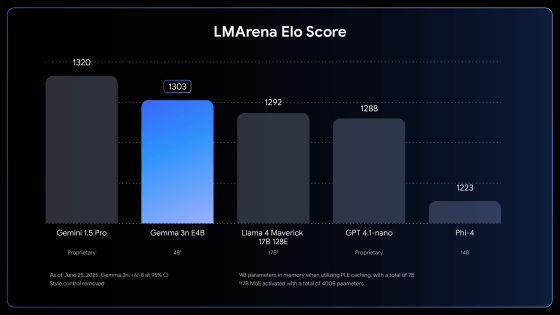

Below is a graph comparing the chat performance of 'Gemma 3n E4B' with 'Gemini 1.5 Pro', 'Llama 4 Maverick 17B-128E', 'GPT 4.1-nano' and 'Phi-4'. 'Gemma 3n E4B' exceeded the scores of the relatively large 'Llama 4 Maverick 17B-128E' and 'Phi-4', and achieved better results than the commercial model 'GPT 4.1-nano'.

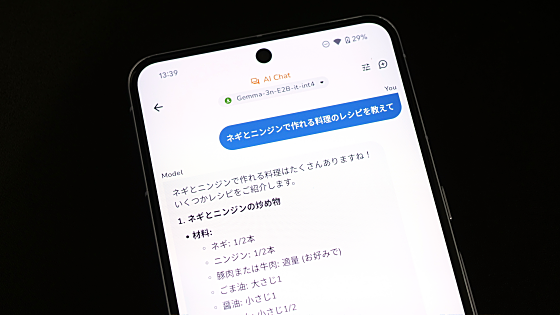

Gemma 3n supports not only text but also image, audio, and video input. In addition to supporting execution environments such as 'Hugging Face Transformers', 'llama.cpp', 'Google AI Edge', 'Ollama', and 'MLX', it can also be operated locally using the smartphone app 'Google AI Edge Gallery'. In the following article, we actually installed Google AI Edge Gallery on the Pixel 8 Pro and tried chatting using Gemma 3n.

Introducing the Google-made open source AI model 'Gemma 3n' that runs locally on smartphones, here's how to use it on your smartphone right now - GIGAZINE

Additionally, you can also run a demo of the chat AI in Google AI Studio.

Chat | Google AI Studio

https://aistudio.google.com/prompts/new_chat?model=gemma-3n-e4b-it

Related Posts: