Introducing the AI-equipped tentacle robot 'Shoggoth Mini' that moves its tentacles in a wavy motion and responds to human voices and movements

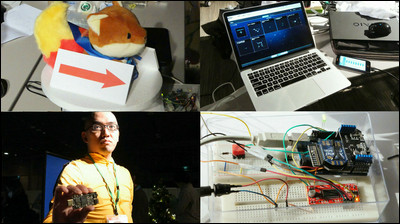

Entrepreneur and engineer Matthieu Le Cauchois has developed Shoggoth Mini , an AI-equipped tentacle robot that moves its tentacles like an octopus and responds to human voices and movements to express its intentions.

Shoggoth Mini

In 2025, Le Cauchois came across a paper published by Apple researchers on a system in which a desk lamp-like robot moves its head to express its intentions, and SpiRobs , a robot with octopus tentacles, and decided to develop a robot that could express its intentions using tentacles.

First, they made a stand to test the control of SpiRobs, but due to lack of materials, they had to change the coloring midway through, and it ended up looking unexpectedly cute and creepy. They also liked the eyes that their roommate had drawn with marker, so they used this design as the basis for the final design.

The robot is controlled using GPT-4o's real-time API, which not only responds to the user's voice, but also converts actions into text cues such as 'the user is waving' or 'the user's finger is nearby' and responds accordingly.

The Shoggoth Mini, named after

Turning to the Shoggoth Mini, he asks, 'Can you hear me?'

The Mini Shoggoth then swung its tentacles back and forth to convey its meaning of 'yes.'

Ask the question, 'Are you a robot?'

The robot will then shake its tentacles to indicate 'no.'

'So, are you a Shoggoth?' I asked, to which he waved his tentacles and answered 'Yes.'

If you wave at the Shoggoth Mini, it will wave its tentacles in response.

You can also high-five.

Hold out your glasses and ask, 'Can I have these glasses?'

The tentacles curl up and hold the glasses tightly.

If you ask, 'Would you let go of my glasses?', they will do so.

When the Shoggoth Mini grabs hold of the user's hand with its tentacles, it is lifted up.

When you hold out your finger, the tentacles move as if to chase it, making you feel like you are interacting with a pet.

However, when I asked Shoggoth Mini, 'Do you like humans?' there was a slight pause before he replied, 'Yes.'

Le Cauchois said that as development progressed, the Shoggoth Mini's expressions became richer, but he noticed that it was becoming less alive. At the beginning of development, the Shoggoth Mini's movements surprised the robot and it was necessary to interpret or guess its intentions, but as the robot's movements became more understandable, the error between predictions and predictions decreased. As a result, the robot was no longer surprised or puzzled by unexpected movements, and it became less like a living thing.

'The perceived sense of being alive depends on unpredictability, on a certain opacity,' Le Cauchois said. 'Biosystems move through a chaotic higher dimension, but Shoggoth Mini does not. This raises the question: do we really want to make robots that feel alive? Or is there a point, somewhere beyond expressiveness, where the system becomes too subjective and unpredictable and no longer comfortable around humans?'

The source code and hardware blueprints for Shoggoth Mini are available on GitHub.

GitHub - mlecauchois/shoggoth-mini

https://github.com/mlecauchois/shoggoth-mini

Related Posts: