Apple develops a robot like Pixar's Stand Light, which moves with hand movements and dances to music, and is highly rated by subjects as 'human-like'

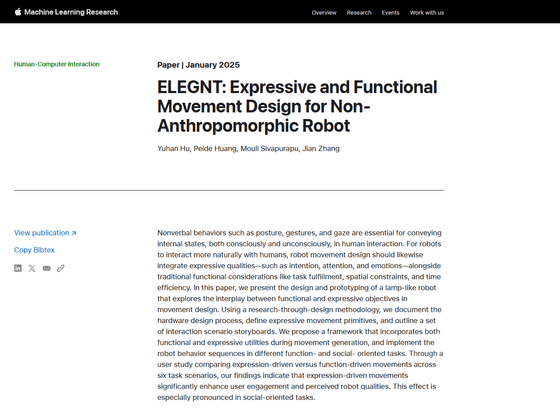

In a research paper published in January 2025, Apple argues that 'characteristics such as expressive movement are key to optimizing human-robot interaction.' To illustrate this, Apple is developing a standing light that moves in accordance with human movements.

ELEGNT: Expressive and Functional Movement Design for Non-anthropomorphic Robot - 2501.12493v1.pdf

ELEGNT: Expressive and Functional Movement Design for Non-Anthropomorphic Robot - Apple Machine Learning Research

https://machinelearning.apple.com/research/elegnt-expressive-functional-movement

Apple's new research robot takes a page from Pixar's playbook | TechCrunch

Apple argues that 'non-verbal behaviors like posture, gestures and gaze are essential in human interactions for communicating internal states, both consciously and unconsciously. Similarly, for robots to interact more naturally with humans, they must integrate expressive capabilities such as intent, attention and emotion in addition to traditional functional considerations such as task accomplishment, spatial constraints and time efficiency.'

So Apple has developed a robotic lamp that is both functional and expressive. Here's a video of Apple's robotic lamp in action:

This robot is capable of flexible movements, such as changing the position of the light according to the movement of the user's hand.

In addition, it can also perform a variety of other actions, such as projecting illustrations onto a wall or displaying calculation formulas.

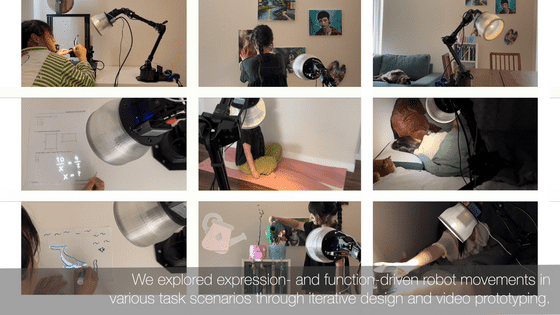

To ascertain the effect of non-verbal behavior on humans, Apple tested six tasks using two types of robot behavior: 'expression-driven,' which includes non-verbal behavior, and 'function-driven,' which does not include non-verbal behavior, to see what emotions the tasks elicited in human subjects.

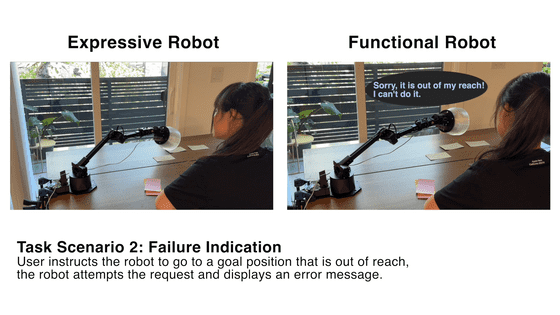

For example, if you ask a robot to read a note that is out of reach of its arm, an expression-driven robot will try to reach it by bending and stretching it and then issue an error message, but a function-driven robot will not do so.

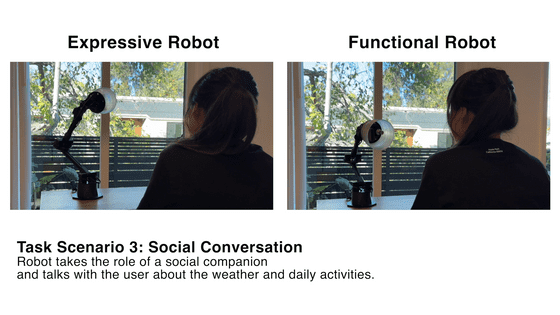

In the 'expression-driven' model, when asked about the weather, the robot looks out the window before telling the user the weather. On the other hand, in the 'function-driven' model, when asked about the weather, the robot tells the user the weather without moving the lamp.

Furthermore, if you ask an 'expression-driven' robot to play music, it will move as if dancing.

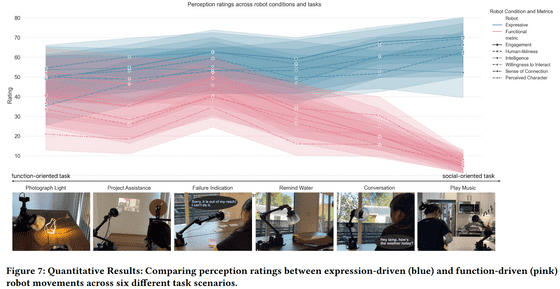

When subjects were asked to give their impressions after completing a total of six tasks, the 'expression-driven' model (shown in blue) received higher ratings than the 'function-driven' model (shown in pink) in terms of 'friendliness,' 'humanness,' 'intelligence,' 'willingness to interact,' and 'feeling connected.'

'Our findings suggest that expressive movements significantly improve user engagement and perceived robot quality,' Apple reported. TechCrunch said, 'Expressive movements are friendly and help humans and robots form connections.'

Related Posts: