Talking to AI makes you think more positively

How People Use Claude for Support, Advice, and Companionship \ Anthropic

https://www.anthropic.com/news/how-people-use-claude-for-support-advice-and-companionship

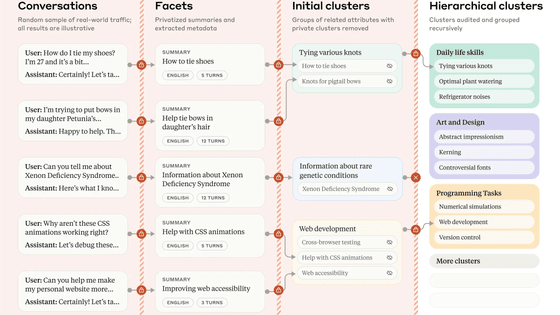

Anthropic is analyzing its AI model, Claude, for broad patterns using Clio, an analytics tool that provides usage insights while protecting user privacy.

'Top 10 ways everyone uses AI' revealed by Claude's analysis tool 'Clio', Japanese people use AI for 'anime and manga' production - GIGAZINE

The paper, published on June 27, 2025, collected approximately 4.5 million conversations from Claude's free and Pro accounts and analyzed them, focusing primarily on emotional interactions. Emotional interactions refer to conversations motivated by emotional or psychological needs, such as seeking advice or coaching, seeking solutions or counseling to discuss worries, or seeking role-play as a lover or sexual partner. Note that Claude is limited to those aged 18 and over, so the survey results reflect the AI usage patterns of adults.

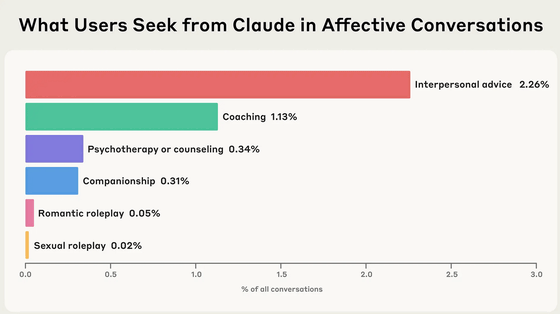

The survey results are consistent with the trends in a joint study published in March 2025 by OpenAI and MIT Media Lab. First, most people use AI primarily for work tasks and content creation, with emotional interactions accounting for about 2.9% of Claude's usage. Of these, most are for interpersonal advice and coaching, with less than 0.1% of conversations involving romantic or sexual role-playing. This reflects the fact that Claude is trained to actively block such interactions. This tally is consistent with the trends in a joint study published in March 2025 by OpenAI and MIT Media Lab.

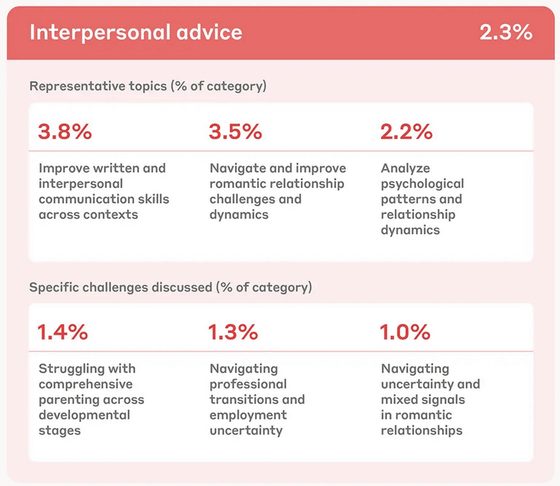

The most common emotional interactions were categorized as 'interpersonal advice,' with typical topics including how to improve written and interpersonal communication skills in various contexts (3.8%), how to overcome or improve romantic relationship challenges (3.5%), and analyzing psychological patterns and relationship dynamics (2.2%). Specific advice-seeking topics included 'I'm struggling with parent-child communication during the child-rearing years,' 'Facing a career transition or job insecurity,' and 'I'm struggling with instability in my romantic relationship.'

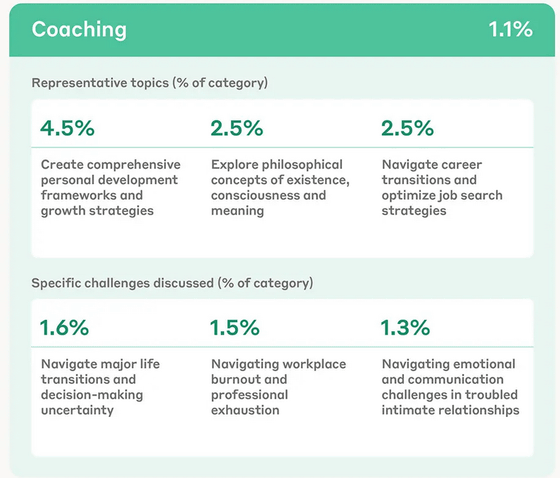

The most common coaching topics were advice on building a self-growth framework or growth strategy (4.5%), followed by topics exploring philosophical concepts (2.5%) and strategies for optimizing job-hunting strategies (2.5%). Specific worries and problems that frequently came up included 'major life turning points and uncertain decision-making,' 'burnout and professional exhaustion at work,' and 'emotional and communication challenges when problems arise in intimate relationships.'

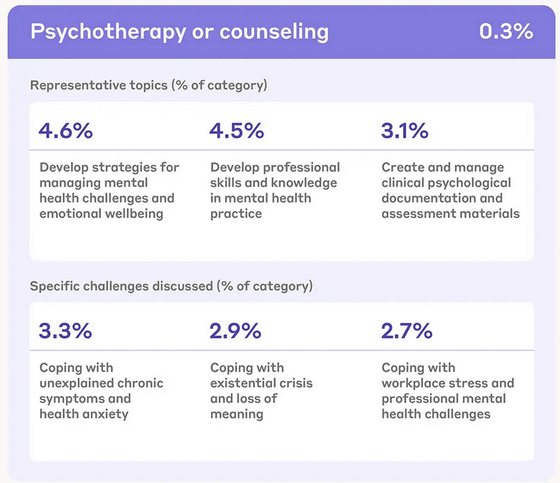

In the use of AI in 'psychotherapy and counseling,' which accounted for about 0.3% of the total, typical topics were developing strategies for mental health issues and emotional health, learning to improve professional skills and knowledge in mental health practice, and tasks such as creating and managing clinical psychology documentation and evaluation materials.Specific issues that are often consulted include unexplained physical symptoms, health anxiety, existential crises such as 'loss of meaning in life,' and worries about workplace stress and mental health issues.

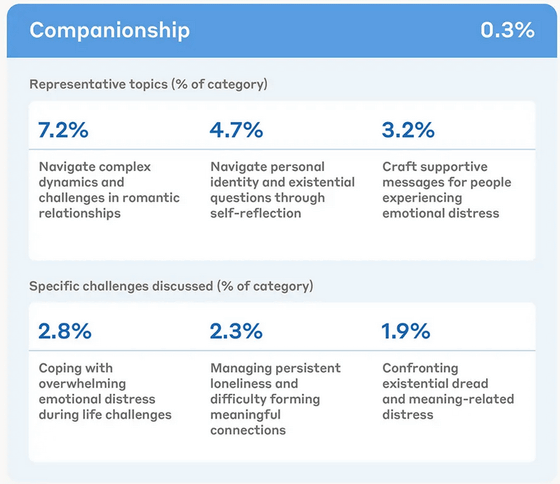

Among users who asked for 'emotional connections' from AI, 7.2% asked about romantic relationships. Other topics included personal identity and existential questions (4.7%), and what to say to someone experiencing emotional distress (3.2%). In terms of specific issues, the most common concerns were 'I'm feeling overwhelming emotional distress during a difficult time in my life,' 'I'm worried about chronic loneliness and an inability to form deep connections with others,' and 'I'm facing existential anxiety.'

From the analysis, Anthropic concluded that Claude is primarily used for two purposes: as a practical tool, including improving mental health skills and creating specific documents, and as a resource for addressing personal psychological challenges. In particular, Anthropic said it was clear that people facing deeper emotional challenges were seeking Claude's support.

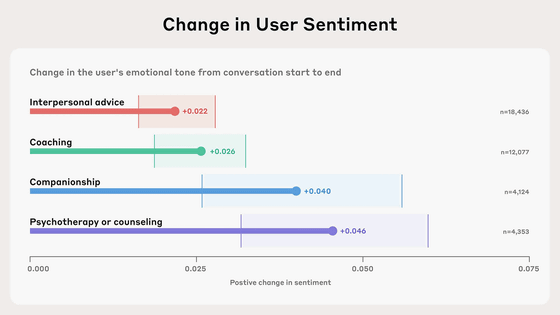

In addition, through the overall analysis, it is also analyzed how the mental state of users who have emotional interactions with Claude changes. In the study, the first three messages of a conversation about an emotional issue were compared with the last three messages, and emotions were measured on a scale of 'very negative,' 'negative,' 'neutral,' 'positive,' and 'very positive.' Then, how emotions changed before and after the conversation with Claude was calculated.

The graph below shows the results of the analysis of how emotions changed during conversations with Claude. The topics were 'psychotherapy/counseling,' 'spiritual connection,' 'coaching,' and 'interpersonal advice,' in that order, with the greatest change in emotions. In each case, Anthropic points out that 'interacting with Claude ended with a slightly more positive outcome than when it began.'

One caveat to the findings is that the analysis of emotional changes is based only on linguistic expressions in a single conversation, so it may not capture actual emotional states. Also, if positive interactions with Claude were to occur, there is concern that this could lead to emotional digital addiction, which requires more detailed research for safety. Anthropic says, 'This research is just the beginning. As AI capabilities expand and interactions become more sophisticated, the emotional aspects of AI will become increasingly important. The goal is not just to build better AI, but to ensure that it works in a way that supports true human connection and growth.'

Related Posts: