Review of 'Cactus Chat', a free app that allows you to chat by running AI models locally on both Android smartphones and iPhones

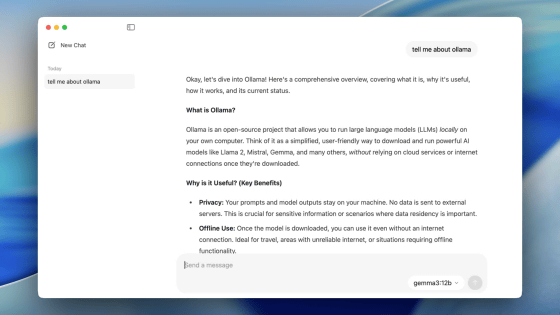

Popular chat AI such as ChatGPT and Gemini perform processing on the server, but research and development is underway to run chat AI on devices such as PCs and smartphones in response to demands such as 'I want to use AI offline' and 'I don't want to upload my data to the server.' ' Cactus Chat ' is one of the apps that can run chat AI on smartphones, and is compatible with both Android smartphones and iPhones. I was curious about how fast the chat AI could run, so I actually tried it on the Pixel 9 Pro and iPhone 16 Pro.

GitHub - cactus-compute/cactus: Framework for running AI locally on mobile devices and wearables. Hardware-aware C/C++ backend with wrappers for Flutter & React Native. Kotlin & Swift coming soon.

◆Try using it on an Android smartphone

The Android version of Cactus Chat is available on Google Play.

Cactus Chat - Apps on Google Play

https://play.google.com/store/apps/details?id=com.rshemetsubuser.myapp

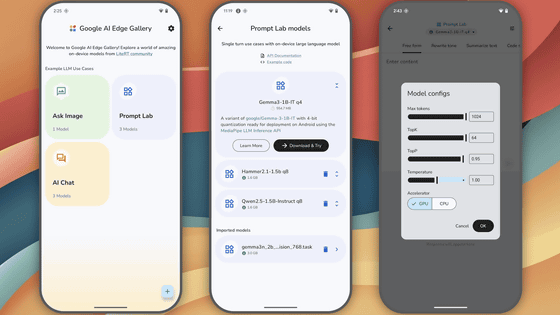

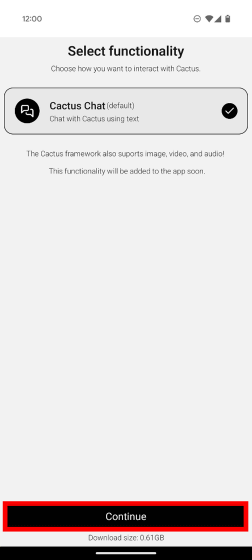

When you install and launch Cactus Chat, you will be prompted to download an AI model of 0.61GB in size, so tap 'Continue'.

Once the download is complete, tap 'Get Started.'

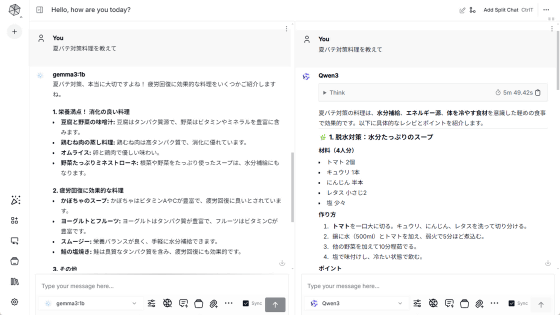

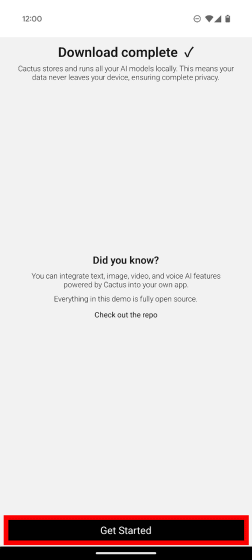

Now you can run the chat AI locally. By default, you can use the language model 'Qwen2.5-0.5B' developed by Alibaba.

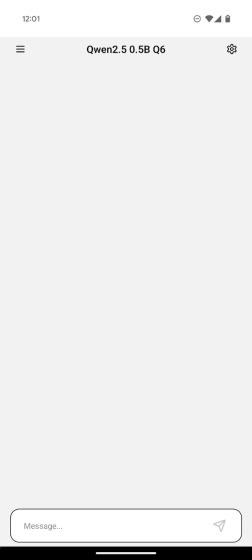

To verify that the processing is indeed local, switch your device to Airplane mode.

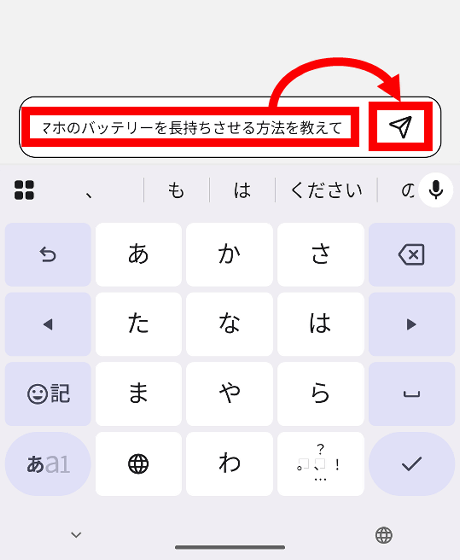

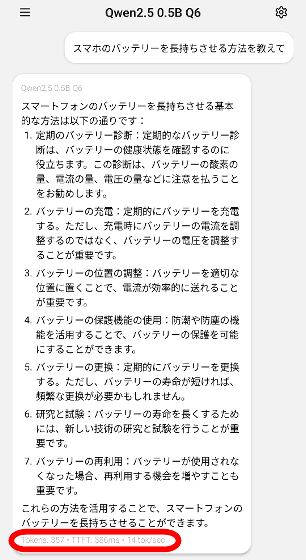

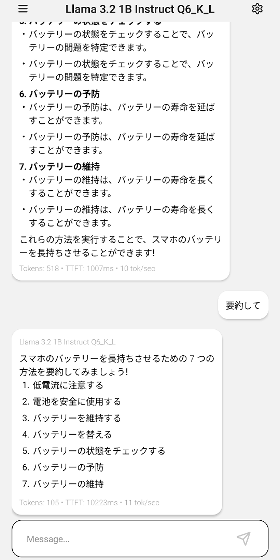

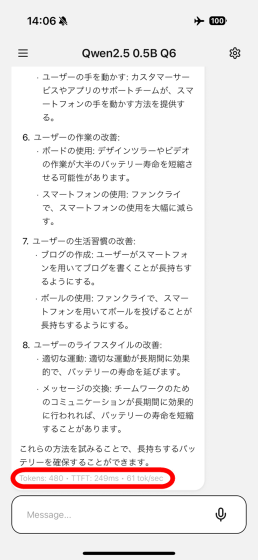

Enter 'Tell me how to make my smartphone battery last longer' in the text input area and tap the send button.

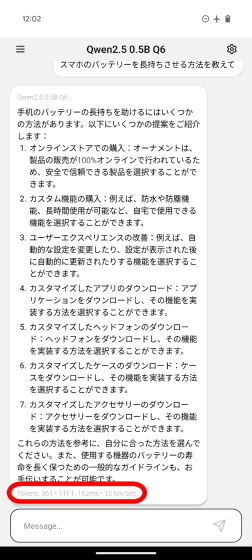

The answer was output smoothly. At the bottom of the output, the number of tokens output, the time it took from sending the text to the first token being generated (TTFT), and the number of tokens output per second are listed. In the case of the Pixel 9 Pro, 15 tokens were output per second.

To get a good idea of how quickly answers can be generated, watch the video below:

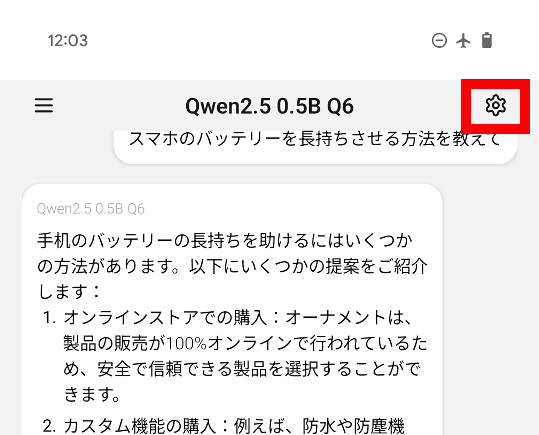

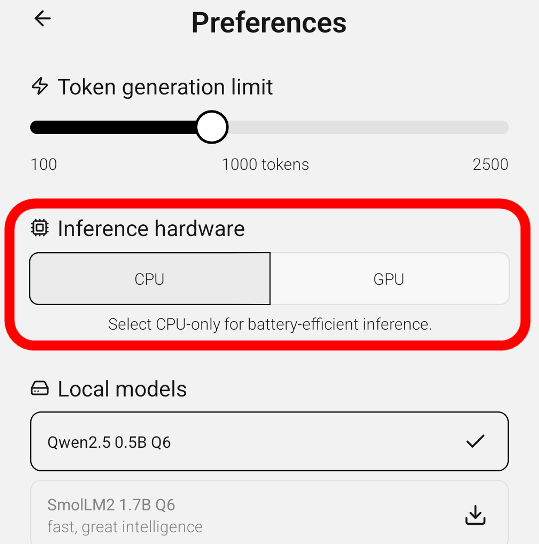

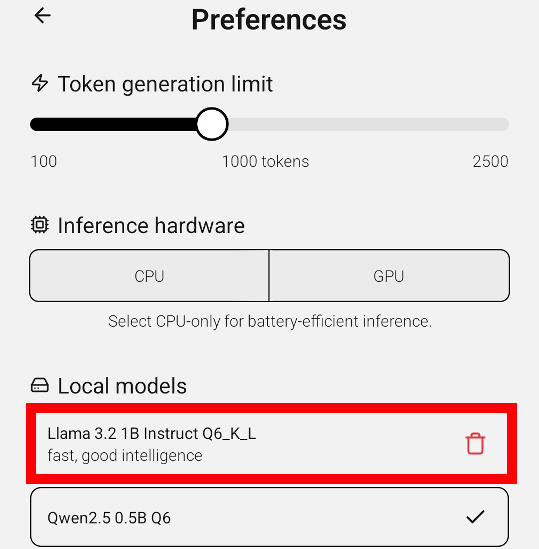

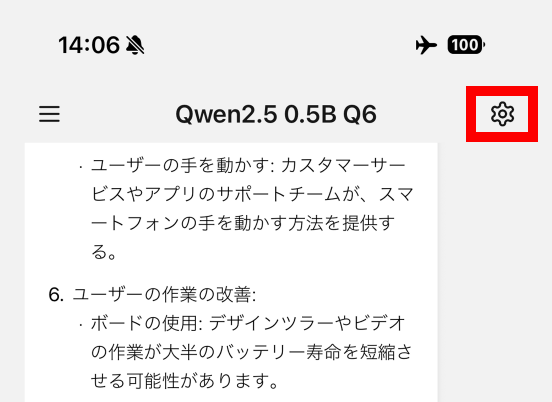

Tap the settings button on the top right of the screen.

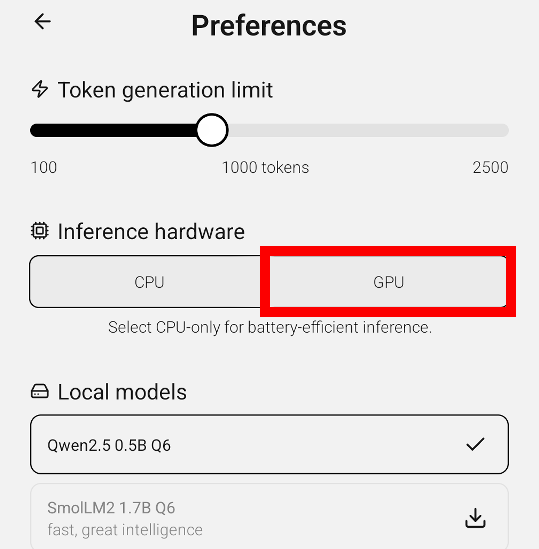

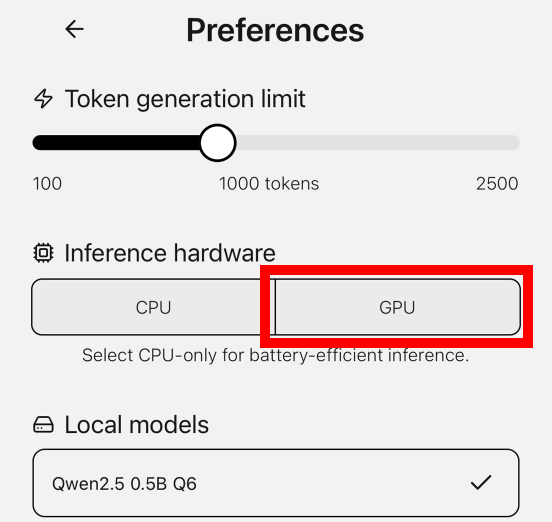

When I checked the hardware section, I saw that it was set to use only the CPU.

Tap 'GPU' to switch to using both the CPU and GPU.

When using both the CPU and GPU, the output tokens per second dropped to 14. When running Cactus Chat on a Pixel 9 Pro, using the GPU does not appear to provide any speedup.

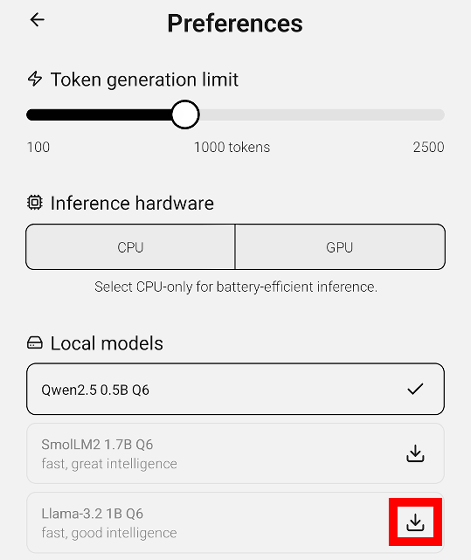

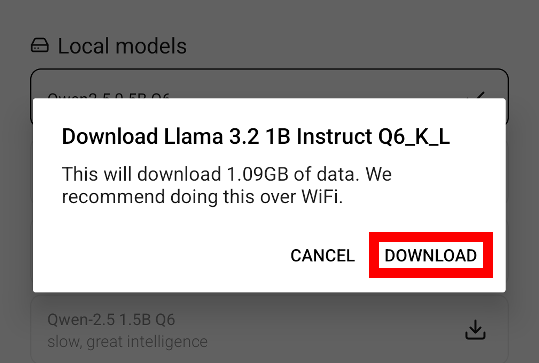

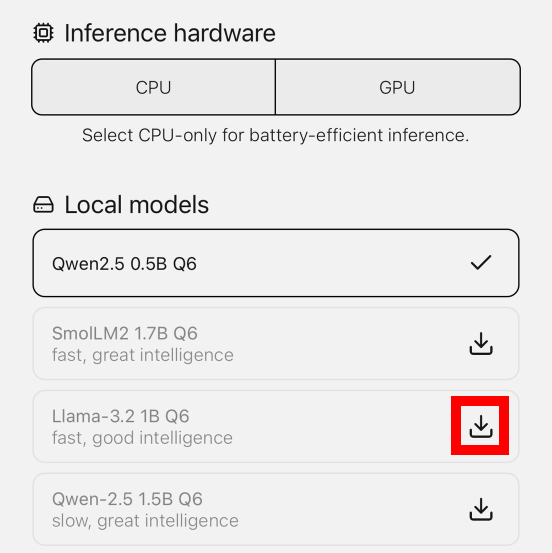

Cactus Chat can also be used with models other than Qwen. After reconnecting to the Internet, open the settings screen and tap the download button located to the right of 'Llama-3.2 1B Q6'.

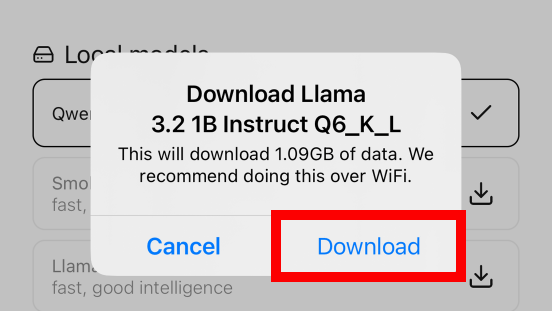

Since the AI model has a large file size, a screen will appear recommending a Wi-Fi connection. For 'Llama-3.2 1B Q6', the file size is 1.09GB. Tap 'DOWNLOAD' to continue downloading.

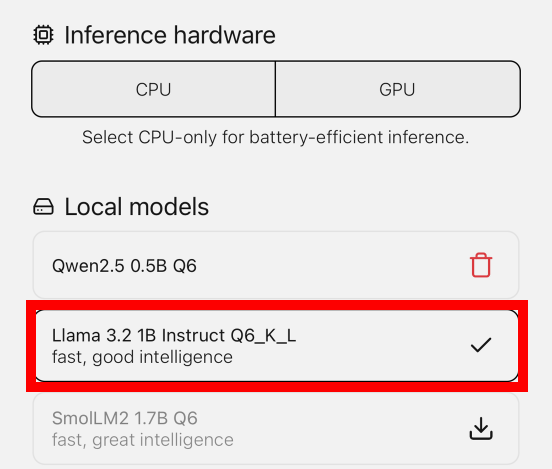

Once the download is complete, tap 'Llama 3.2 1B Instinct Q6_K_L'.

You can now chat using Llama 3.2.

◆Try using it on your iPhone

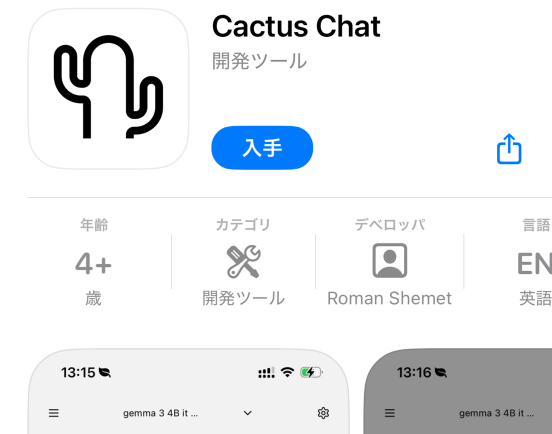

The iOS version of Cactus Chat is available at the following link.

'Cactus Chat' on the App Store

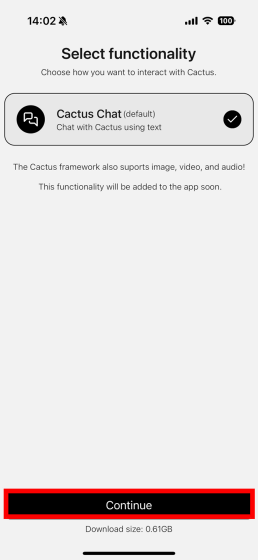

The functions and operation of the iOS version of Cactus Chat are the same as those of Android. First, when you start the app for the first time, tap 'Continue' to download a 0.61GB file.

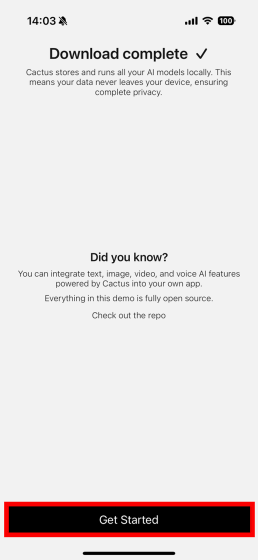

Once the download is complete, tap 'Get Started.'

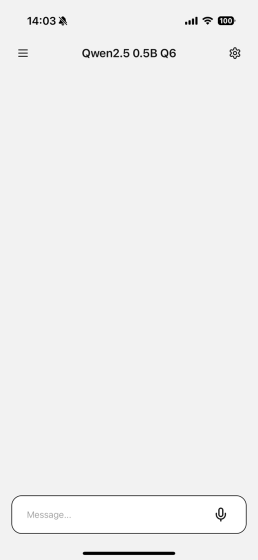

You can now run the chat AI on your iPhone device.

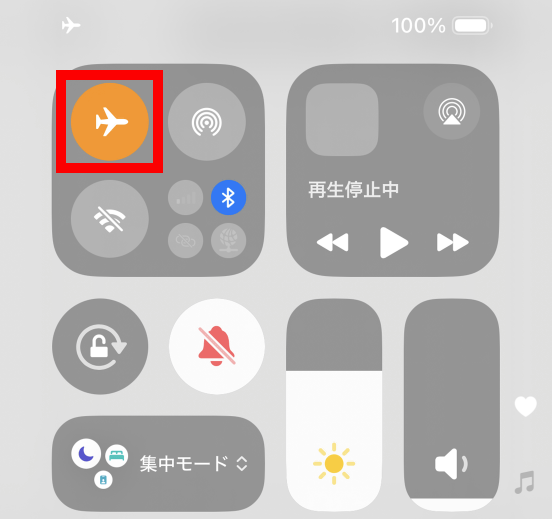

Switch to Airplane mode to disconnect from the Internet.

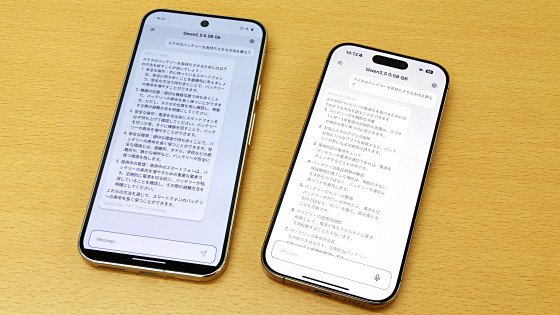

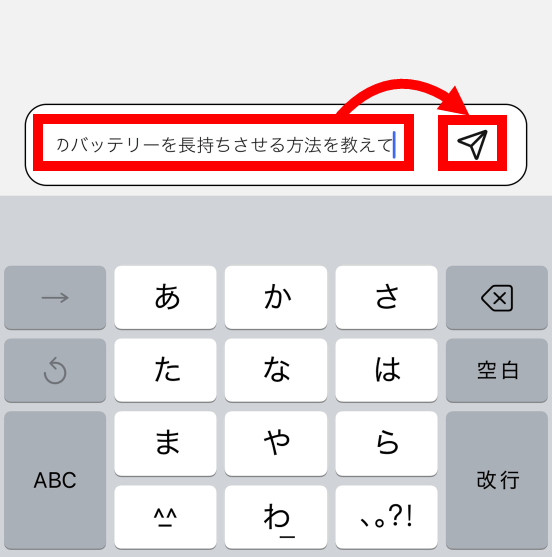

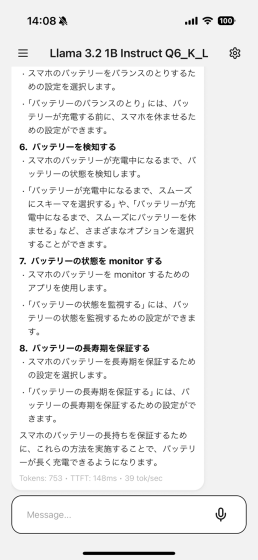

Type 'Tell me how to make my smartphone battery last longer' and tap the send button.

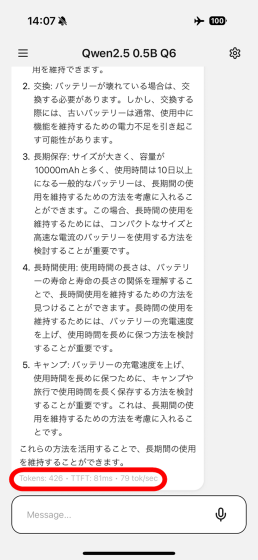

In the case of the iPhone 16 Pro, we were able to output 61 tokens per second.

Tap the Settings button.

The iPhone version of Cactus Chat was also set to use only the CPU by default, so tap 'GPU' to set it to use both the CPU and GPU.

Using CPU and GPU, we are now able to output 79 tokens per second.

If you play the video below, you can see that the output is quite fast.

I tried running chat AI locally on the iPhone 16 Pro [Cactus Chat] - YouTube

Of course, you can download other AI models for the iOS version of Cactus Chat. Tap the download button to the right of 'Llama-3.2 1B Q6'.

Tap “Download”.

Once the download is complete, tap 'Llama 3.2 1B Instinct Q6_K_L'.

You can now chat using Llama 3.2 on your iPhone 16 Pro device.

The source code of Cactus Chat is available at the following link. The license is ' Apache License, Version 2.0 '.

GitHub - cactus-compute/cactus: Framework for running AI locally on mobile devices and wearables. Hardware-aware C/C++ backend with wrappers for Flutter & React Native. Kotlin & Swift coming soon.

https://github.com/cactus-compute/cactus

Related Posts:

in AI, Video, Software, Smartphone, Review, Posted by log1o_hf