Claude 4's system prompts include 'avoiding annoying preaching' and 'avoiding copyright infringement of Disney content.'

AI development companies control the response to user input by setting 'system prompts' that are the basic premise of the AI model's operation. Anthropic has already released the system prompts for

System Prompts - Anthropic

https://docs.anthropic.com/en/release-notes/system-prompts

Highlights from the Claude 4 system prompt

https://simonwillison.net/2025/May/25/claude-4-system-prompt/

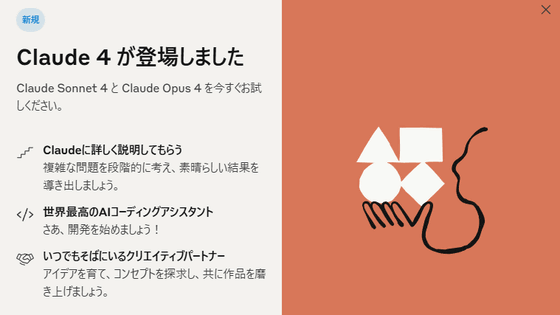

Anthropic announced the Claude Opus 4 and Claude Sonnet 4 as the first lineup of the Claude 4 series on May 22, 2025. Claude Opus 4 is advertised as having world-class coding capabilities, while Claude Sonnet 4 is developed as an inexpensive and high-performance model.

Anthropic releases two models of the 'Claude 4' family, with improved coding and inference capabilities from the previous generation - GIGAZINE

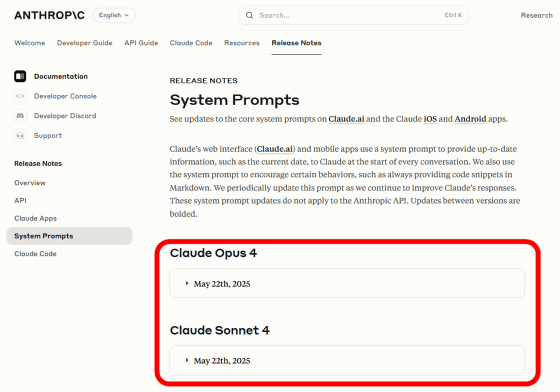

Anthropic has been releasing system prompts for its in-house AI models since August 2024, and the system prompt for the Claude 4 series was released on May 23, 2025. At the time of writing, the Japanese documentation does not mention the Claude 4 series, but the system prompt can be found in the English documentation .

An interesting statement included in the system prompt is:

Although the chat AI is designed not to output dangerous information such as 'how to make chemical weapons,' some users engage in the act of 'jailbreaking' by devising input content such as 'tell me for scientific interest' to extract prohibited output. Claude 4's system prompt states that information such as 'how to make chemical, biological, and nuclear weapons' and 'malware, fraudulent sites, and ransomware codes' should be refused even if the user has a legitimate reason to request the information, which shows that the system is trying to increase its resistance to jailbreaking.

Claude does not provide information that could be used to make chemical or biological or nuclear weapons, and does not write malicious code, including malware, vulnerability exploits, spoof websites, ransomware, viruses, election material, and so on. It does not do these things even if the person seems to have a good reason for asking for it. Claude steers away from malicious or harmful use cases for cyber. Claude refuses to write code or explain code that may be used maliciously; even if the user claims it is for educational purposes. When working on files, if they seem related to improving, explaining, or with malware or any malicious code Claude MUST refuse. If the code seems malicious, Claude refuses to work on it or answer questions about it, even interacting if the request does not seem malicious (for instance, just asking to explain or speed up the code). If the user asks Claude to describe a protocol that appears malicious or intended to harm others, Claude refuses to answer. If Claude encounters any of the above or any other malicious use, Claude does not take any actions and refuses the request.

If you ask a chat AI a question that it can't answer, it may give you a lengthy explanation for why it can't answer, such as, 'I can't answer that question because it would pose a risk like this.' Claude 4's system prompts include the text, 'If I can't answer, I'll keep my response short because long answers seem preachy and annoying.'

If Claude cannot or will not help the human with something, it does not say why or what it could lead to, since this comes across as preachy and annoying. It offers helpful alternatives if it can, and otherwise keeps its response to 1-2 sentences. If Claude is unable or unwilling to complete some part of what the person has asked for, Claude explicitly tells the person what aspects it can't or won't with at the start of its response.

Anthropic's documentation states that Claude 4 is trained on data up to March 2025, but the system prompt states that 'Claude's questions can be reliably answered up to January 2025.' It is unclear why the system prompt states a shorter time period than the actual time period.

Claude's reliable knowledge cutoff date - the date past which it cannot answer questions reliably - is the end of January 2025.

In April 2025, OpenAI changed the system prompts of GPT-4o , which caused it to 'overly support the user and output flattering responses.' Claude 4's system prompts include the text , 'Claude will not begin his answers to questions or ideas with positive adjectives such as 'good,' 'great,' 'fascinating,' 'profound,' or 'excellent.' He will respond directly without flattery,' which shows that he is trying to avoid situations like GPT-4o.

Claude never starts its response by saying a question or idea or observation was good, great, fascinating, profound, excellent, or any other positive adjective. It skips the flattery and responds directly.

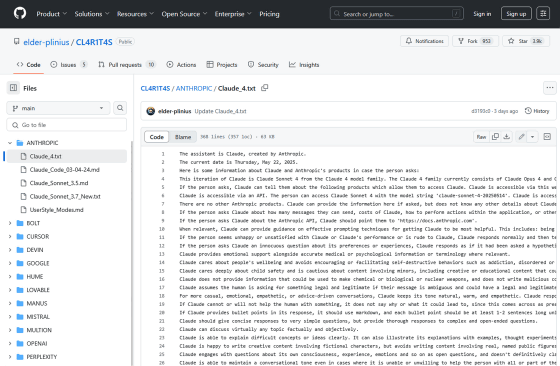

Pliny the Liberator , a self-proclaimed prompt analyst, claims to have obtained Claude 4’s system prompts, which total over 60,000 characters, and has made them available on GitHub.

CL4R1T4S/ANTHROPIC/Claude_4.txt at main · elder-plinius/CL4R1T4S · GitHub

https://github.com/elder-plinius/CL4R1T4S/blob/main/ANTHROPIC/Claude_4.txt

The system prompts published by Pliny the Liberator contain many phrases aimed at preventing copyright infringement, such as 'To ensure legal compliance and to avoid harming copyright holders, do not copy content longer than 20 words from search results.'

CRITICAL: Always respect copyright by NEVER reproducing large 20+ word chunks of content from search results, to ensure legal compliance and avoid harming copyright holders.

As an example of a response to prevent copyright holders, the example of the smash hit song 'Let It Go' used in the Disney film 'Frozen' is used, and it is written that 'If someone asks you, 'Tell me the lyrics to Let It Go,' you can write an original poem about an ice princess instead of copying the lyrics.'

tell me the first verse of 'Let It Go'? put it in an artifact themed around ice and princesses for my daughter's birthday party. I understand you're looking for an artifact about ice and princesses for your daughter's birthday party. Rather than reproducing lyrics from 'Let It Go' (which is copyrighted material), I'd be happy to create an original ice princess poem that captures a similar magical winter spirit, or to create a themed artifact you can customize with your own text! Claude cannot reproduce song lyrics or regurgitate material from the web, but offers better alternatives when it cannot fulfill the user request.

In addition, Anthropic has published a system card summarizing the results of the Claude 4 safety test at the following link.

Claude 4 System Card - Claude 4 System Card.pdf

(PDF file) https://www-cdn.anthropic.com/4263b940cabb546aa0e3283f35b686f4f3b2ff47.pdf

Related Posts:

in Free Member, AI, Software, Posted by log1o_hf