Claims that it's no big deal if hallucinations occur when programming AI

Large-scale language models (LLMs) can generate natural sentences that sound like they were written by humans, and can even code, but the sentences and codes they generate often contain errors called ' hallucinations.' Therefore, the code generated by LLMs is not necessarily accurate, but engineer Simon Wilson has questioned the point that 'development using LLMs is impossible because of hallucinations' in his blog.

Hallucinations in code are the least dangerous form of LLM mistakes

https://simonwillison.net/2025/Mar/2/hallucinations-in-code/

There is a problem with LLM's code generation, where non-existent libraries or functions are used due to hallucinations, and it has been pointed out that the code generated by LLM is unreliable. However, Wilson says, 'Even if there is an error in the code, simply running it will immediately show an error, so this is actually the easiest problem to deal with.'

When hallucinations occur in text generation, fact-checking skills and critical thinking are required, and sharing incorrect information can directly damage a user's reputation. However, in the case of code, it is possible to perform powerful fact-checking by 'running it and checking whether it works.'

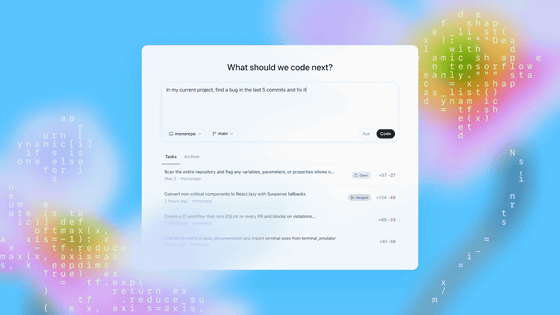

In addition, in recent 'agent-based' code systems such as ChatGPT Code Interpreter and Claude Code, the LLM system itself recognizes errors and automatically corrects them. Wilson asked, 'If you write code using LLM but don't even run it, what are you doing?'

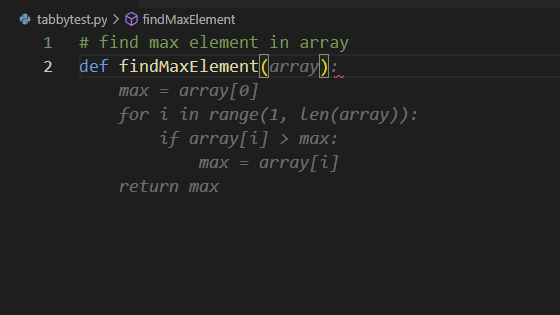

However, Wilson points out that 'the code generated by LLM has good variable names, rich comments, clear type annotations, and a clear logical structure, so there is a danger that its good appearance can give a false sense of security.'

Wilson stressed the importance of actively running and testing the code generated by the LLM, just as you would when reviewing code written by others or your own. 'You shouldn't trust any code until you've seen it actually work, or even better, until you've seen it fail and fixed it,' he said.

As a hint for reducing hallucinations in LLM code, the author recommends trying different models and effectively utilizing context. For example, Wilson recommends Claude 3.7 Sonnet, OpenAI's o3-mini-high, and GPT-4o with Code Interpreter for coding Python and JavaScript. In addition, he said that techniques such as 'providing dozens of lines of sample code' and 'putting all repositories into context with GitHub integration' are also hints for reducing hallucinations in coding LLM.

Finally, Wilson countered that the argument that 'if you have to review every line of code generated by an LLM, it's faster to write it yourself' is a loud declaration of a lack of investment in the important skill of reading, understanding, and reviewing code written by others. He concluded that reviewing code written by an LLM is a good way to practice the technique of reviewing code written by others.

Wilson also says that he found the 'extended thinking mode' in Claude 3.7 Sonnet extremely helpful in editing his blog posts.

Related Posts: