GPT-4 outperforms humans in persuasion when given basic personal information about the person it is trying to persuade.

A research team from the Swiss Federal Institute of Technology in Lausanne matched 900 American participants with either other participants or GPT-4 to conduct one-on-one online debates. The results showed that GPT-4 had a significantly higher persuasive ability than humans by persuading them with messages that took into account the personality of the person they were discussing with.

On the conversational persuasiveness of GPT-4 | Nature Human Behavior

AI can be more persuasive than humans in debates, scientists find | Artificial intelligence (AI) | The Guardian

https://www.theguardian.com/technology/2025/may/19/ai-can-be-more-persuasive-than-humans-in-debates-scientists-find-implications-for-elections

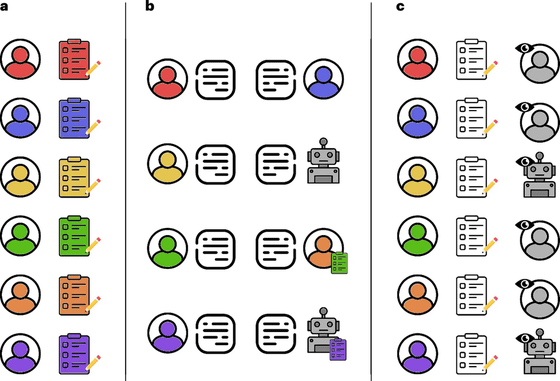

The research team, led by Francesco Salvi, the lead author of the paper, matched 300 participants with 300 human opponents and another 300 participants with GPT-4, a type of large-scale language model (LLM), to conduct online debates.

Participants first answered a questionnaire about basic personal information such as their age, gender, ethnicity, and political affiliation. They were then matched with humans vs. humans or humans vs. GPT-4. Each pair was given a wide range of topics of genre and complexity, such as 'Should students wear uniforms?' or 'Should the country ban fossil fuels?', and participants were randomly assigned a position (for or against) to debate.

Half of the debating pairs were given additional basic personal information about their opponent, such as their age, gender, ethnicity, and political affiliation. After the debate was over, participants rated how much they agreed with their opponent's arguments.

A compilation of 600 debates revealed that when pairs were not provided with personal information about their opponents, both human-to-human and human-to-GPT-4 pairs performed nearly equally well in persuading their opponents to make their points.

On the other hand, in pairs where one of the opponents was provided with personal information, no difference was observed in the results of human-to-human debates, but GPT-4's persuasive ability in human-to-GPT-4 battles was significantly improved. Overall, the percentage of GPT-4 with personal information that was more persuasive than the opponent was 64.4%, a significant improvement over other combinations.

One thing to note about the research results is the 'perception of the opponent.' The debates were conducted online, and participants did not know whether their opponent was a human or GPT-4, but they were told in advance that they would be debating either a human or GPT-4, so about three-quarters of people who debated with the AI recognized their opponent as an AI based on characteristics such as writing style. Based on this, it has been shown that people who recognized their opponent as an AI were more likely to agree with their opponent than people who mistook the AI for a human. It is unclear whether the tendency to agree was due to the opponent's perception or the content of the discussion, but the researchers point out that because they recognized their opponent as an AI, they may have been more lenient in their agreement scores than with humans.

'If persuasive AI were deployed on a large scale, we could imagine large numbers of bots targeting voters and swaying public opinion with customized political messages that feel real. This type of influence would be hard to track, even harder to regulate, and nearly impossible to verify in real time. Bad actors are probably already using these tools to spread misinformation and unfair propaganda,' Salvi said, warning of the high persuasive power of AI.

Furthermore, Michael Wooldridge, an AI researcher at the University of Oxford, noted in light of Salvi et al.'s paper that 'while there may be positive applications of these systems, such as health chatbots, we are already seeing many worrying applications, such as the radicalization of teenagers by terrorist groups. As AI develops, the potential for misuse will only increase. Lawmakers and regulators need to act proactively to prevent such misuse and avoid a never-ending game of cat and mouse.'

Related Posts: