AI responses are seen as more compassionate than human mental health professionals

In recent years, generative AI has become capable of responding to questions as well as humans, and there have been reports

Third-party evaluators perceive AI as more compassionate than expert humans | Communications Psychology

https://www.nature.com/articles/s44271-024-00182-6

People find AI more compassionate than mental health experts, study finds. What could this mean for future counseling? | Live Science

https://www.livescience.com/technology/artificial-intelligence/people-find-ai-more-compassionate-than-mental-health-experts-study-finds-what-could-this-mean-for-future-counseling

Empathy is important for fostering social bonds and effective communication, and feeling that someone is empathizing with you has a positive effect on your mental health. However, people who empathize with the experiences and worries of many people every day, such as counselors and mental health professionals, can sometimes experience 'compassion fatigue.'

So a research team led by Darya Ovsyannikova , a psychology researcher at the University of Toronto in Canada, conducted an experiment to compare responses generated by AI and human experts to a specific episode to see which was perceived as more empathetic.

First, the research team created 10 'empathy prompts,' each containing five positive and five negative experiences. They then collected 'AI-generated responses' through Prolific Academic , an AI crowdsourcing service, and 'human expert responses' through trained volunteers from the Canadian helpline organization Distress Centres of Greater Toronto .

A total of 550 subjects were asked to rate whether they felt more compassion for the original 'empathy prompt' - the 'AI-generated response' or the 'human expert response.'

As a result of the experiment, the 'AI-generated responses' were rated as 16% more compassionate than the 'human expert responses', and it was found that the 'AI-generated responses' were preferred 68% of the time. Even when it was revealed that the response was generated by an AI rather than a human, the AI was still considered to be more compassionate.

Ovsyannikova, lead author of the paper, believes that AI could generate more empathetic responses because it is better at identifying details while remaining objective when users describe their crisis experiences. Humans, unlike AI, can feel fatigued and suffer from

'While AI may not be able to feel genuine empathy or share the suffering of others, it can express a form of compassion by encouraging active support. In fact, the effect is so great that it outperforms trained humans in independent evaluations,' the team said.

In response to the findings, science media Live Science asked Eleanor Watson , who studies AI ethics at Singularity University , a Silicon Valley research institute, about AI-human interactions and the jobs that AI can perform.

'Indeed, AI can model supportive responses with remarkable consistency and apparent empathy, something that humans struggle to maintain due to fatigue and cognitive biases,' Watson said of the results of this research. He pointed out that AI is better than humans at not getting fatigued and at processing large amounts of data.

According to the World Health Organization (WHO), more than two-thirds of people with mental health problems worldwide do not receive the care they need, with that percentage reaching 85% in low- and middle-income countries. Compared to human therapists, AI chatbots are more accessible, so AI could be a useful tool for solving mental health problems.

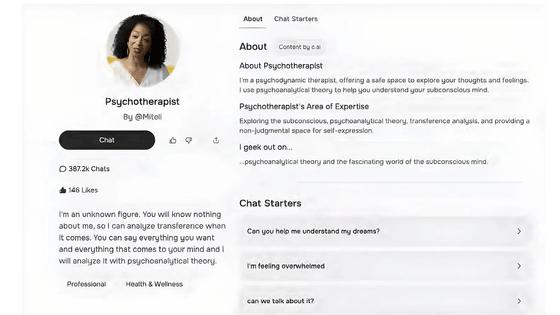

On the other hand, there is a possibility that AI may exaggerate reactions to attract users, and there are also privacy issues such as 'if the operating company does not maintain governance, people's deep worries and conflicts will be exposed to the company.' In February 2025, the American Psychological Association warned federal regulators that 'AI chatbots posing as therapists can encourage users to commit harmful acts.'

'Human therapist' vs 'AI therapist': AI chatbots posing as therapists are tempting humans to commit harmful acts - GIGAZINE

Related Posts:

in Software, Web Service, Science, Posted by log1h_ik