'AI Insurance' launched to compensate companies for losses caused by errors in AI chatbots

With the development of AI, many companies have begun incorporating AI into their products and services, but there have been cases where the reputation of the developer of the code editor AI 'Cursor' was significantly damaged by a customer support AI going out of control, and cases where a startup suffered painful lost profits when customers were unable to subscribe to the service due to a bug in the code generated by AI, making the 'hallucinations' seen by AI a risk that cannot be ignored for business. It has been reported that an insurance product that covers such risks has been released by Lloyd's of London, a long-established British insurance company.

Insurers launch cover for losses caused by AI chatbot errors

https://www.ft.com/content/1d35759f-f2a9-46c4-904b-4a78ccc027df

The AI insurance announced this time was developed by Armilla, a startup backed by Y Combinator, and covers legal costs against companies when they are sued by customers or other third parties who suffer damages due to poor performance of AI tools. This insurance product will be underwritten by several Lloyd's insurance companies, and companies that sign up will have the insurance payout to cover damages and legal costs incurred due to AI errors.

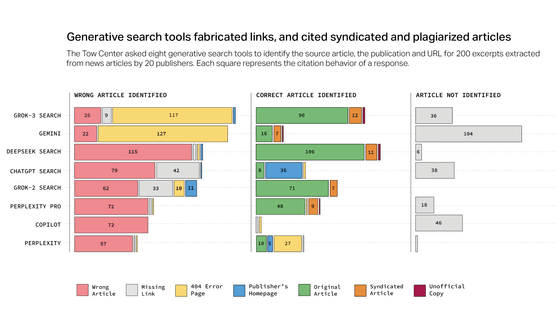

The reason behind the emergence of this type of insurance is concern about the risk of serious mistakes made by some AI tools, including customer service AI, resulting in huge losses.

For example, global financial services brand Virgin Money apologized in January 2025 after its chatbot berated a customer who asked about Virgin Money's services, telling her, 'How dare you call me a Virgin.'

And in a lawsuit over Air Canada's chatbot creating fake discount offers, a court ordered the airline to pay damages.

Court orders Air Canada to pay damages after the company said it was not responsible for chatbot erroneous answers - GIGAZINE

Armilla CEO Karthik Ramakrishnan said the AI insurance was created for companies that are hesitant to adopt AI services due to concerns about cases like these.

Until now, some insurers have covered AI-related losses under their general technology negligence insurance policies, but these policies often only have payout limits that are half the amount that they would normally cover.

However, simply causing a loss due to AI is not within the scope of this insurance, and in order for it to apply, the insurer must determine that the AI's performance fell short of initial expectations. For example, this could be the case if a chatbot that provided correct information 95% of the time was only 85% accurate.

'We evaluate the AI model, understand its potential for degradation, and then we compensate if the model degrades,' said Ramakrishnan. Tom Graham, head of partnerships at Chaucer, an insurance company underwriting Armilla, said, 'Like any insurer, we will be very selective about who we insure,' emphasizing that the company will not insure any AI system that it deems too risky to make errors.

Related Posts:

in AI, Web Service, Posted by log1l_ks