Xiaomi announces 'MiMo', an inference AI comparable to OpenAI's o1-mini, training from scratch instead of deriving existing models

Xiaomi has released its own inference model ' MiMo ' as open source. This model is said to compete with OpenAI's closed source o1-mini model and Alibaba's QwQ-Preview model with 32B parameters.

GitHub - XiaomiMiMo/MiMo: MiMo: Unlocking the Reasoning Potential of Language Model – From Pretraining to Posttraining

Xiaomi enters AI World with MiMo 7B: Monster that beats OpenAI - XiaomiTime

https://xiaomitime.com/xiaomi-enters-ai-world-with-mimo-7b-monster-that-beats-openai-42394/

According to Xiaomi, since models trained based on existing large-scale models depend on the capabilities of the base model, MiMo was trained from scratch to maximize the inference capabilities of its own model.

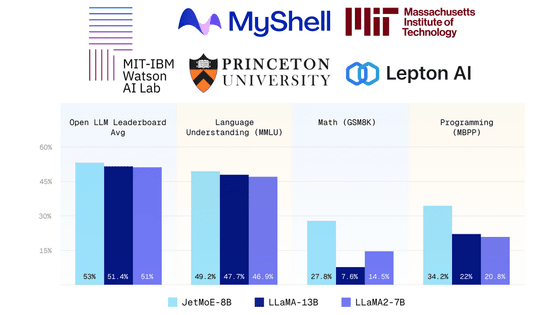

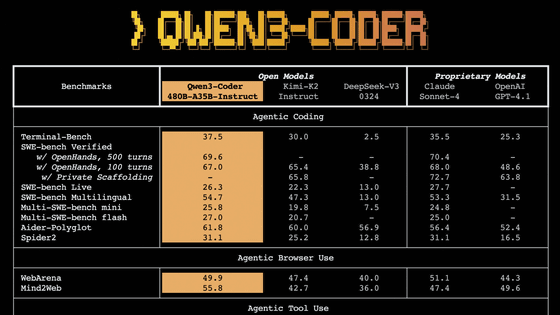

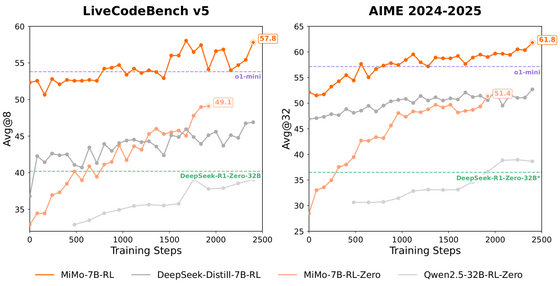

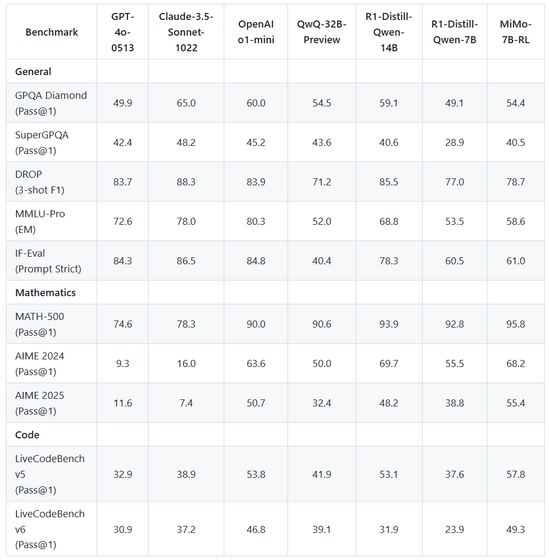

The base model name is 'MiMo-7B', and derived models such as MiMo-7B-RL, which performed reinforcement learning (RL), have also been developed. MiMo-7B-RL demonstrated excellent performance in both mathematical inference tasks and code inference tasks, and showed performance comparable to models such as DeepSeek-Distill-7B-RL and Qwen2.5-32B-RL-Zero.

In some benchmarks, it outperforms OpenAI o1-mini and QwQ-32B-Preview.

The model is published on HuggingFace.

XiaomiMiMo/MiMo-7B-Base · Hugging Face

XiaomiMiMo/MiMo-7B-RL-Zero · Hugging Face

Related Posts:

in Software, Posted by log1p_kr