Chinese IT giant Alibaba releases 'Qwen2.5-Max,' an AI model with higher performance than GPT-4o and DeepSeek-V3

Qwen2.5-Max: Exploring the Intelligence of Large-scale MoE Model | Qwen

https://qwenlm.github.io/blog/qwen2.5-max/

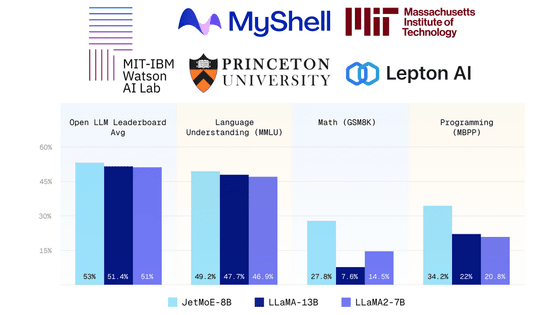

Qwen2.5-Max is an MoE model created by applying supervised fine tuning (SFT) and reinforcement learning with human feedback (RLHF) to a base model pre-trained with over 20 trillion tokens of learning data, and the number of parameters indicating the scale of the model has reached 100 billion.

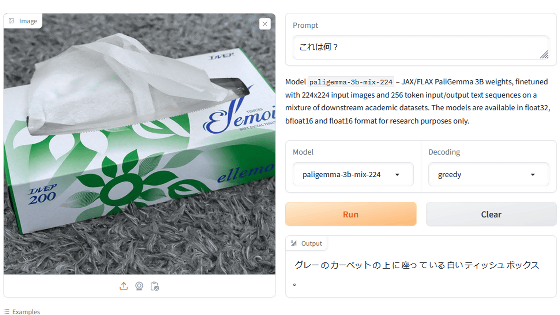

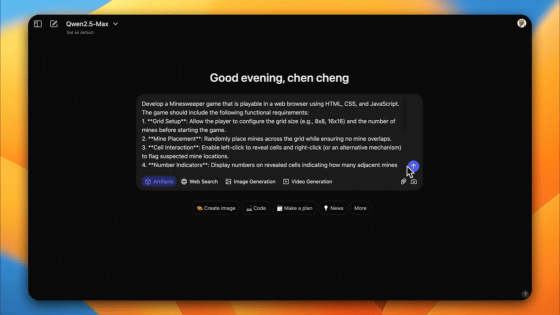

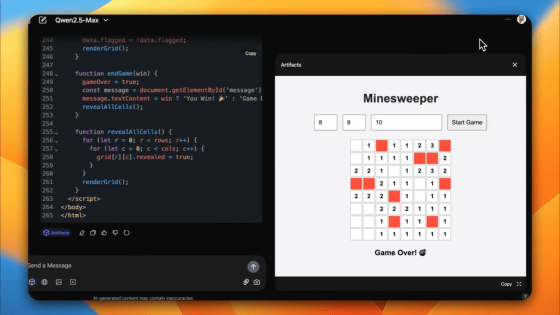

Below is an example showing the high performance of Qwen2.5-Max. First, enter a request to Qwen2.5-Max to 'create a Minesweeper web application' along with detailed conditions.

Then a code that actually allows you to play Minesweeper was output.

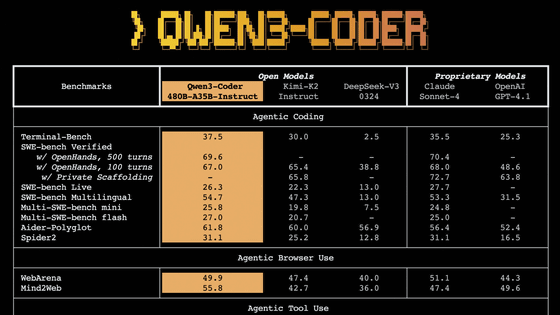

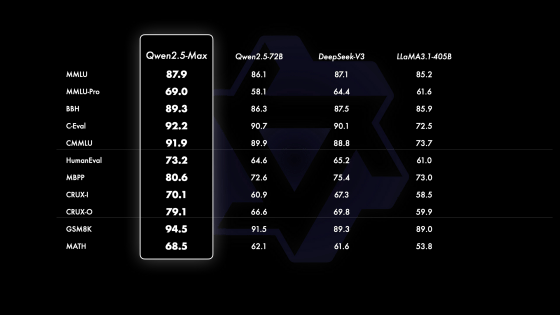

Below is a table summarizing the various benchmark results for 'Qwen2.5-Max', 'Qwen2.5-72B', 'DeepSeek-V3', and 'Llama 3.1-405B'. Qwen2.5-Max recorded the highest score in all tests.

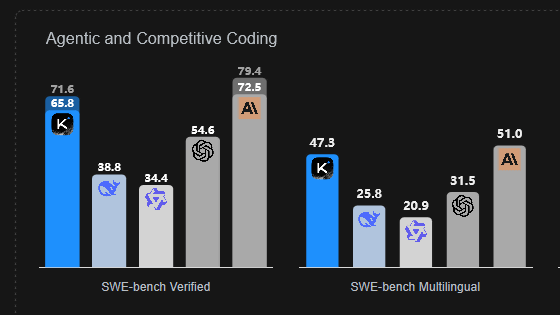

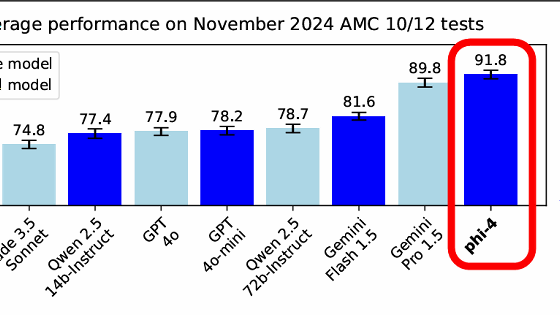

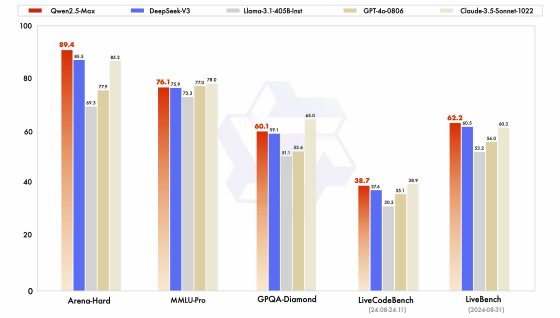

The graph below summarizes the benchmark results for 'Qwen2.5-Max (red),' 'DeepSeek-V3 (blue),' 'Llama 3.1-405B-Instruct (gray),' 'GPT-4o 0806 (dark beige),' and 'Claude 3.5 Sonnet 1022 (light beige).' Qwen2.5-Max outperforms GPT-4o and DeepSeek-V3 in multiple tests.

Qwen2.5-Max is available via Alibaba Cloud's API . It is also available for use with Qwen Chat , a chat AI provided by Qwen.

Related Posts: