Microsoft announces small-scale reasoning models 'Phi-4-reasoning', 'Phi-4-reasoning-plus' and 'Phi-4-mini-reasoning'

Microsoft has announced the small-scale inference models ' Phi-4-reasoning ', ' Phi-4-reasoning-plus ' and ' Phi-4-mini-reasoning '. Phi-4-reasoning is a model that explicitly improves inference capabilities by applying supervised fine-tuning (SFT) to the small-scale language model Phi-4.

One year of Phi: Small language models making big leaps in AI | Microsoft Azure Blog

https://azure.microsoft.com/en-us/blog/one-year-of-phi-small-language-models-making-big-leaps-in-ai/

Phi-4-reasoning Technical Report - Microsoft Research

https://www.microsoft.com/en-us/research/publication/phi-4-reasoning-technical-report/

Phi-4 is a small language model with 14 billion (14B) parameters released in December 2024.

Microsoft releases 'Phi-4', an AI model that is lightweight but has overwhelmingly superior mathematical performance to GPT-4o - GIGAZINE

Phi-4-reasoning, which was announced this time, is a 14B parameter open-weighted reasoning model that uses Phi-4 as the base model and performs SFT using 'carefully selected prompts with appropriate complexity and diversity,' 'high-quality answers containing long reasoning processes generated using o3-mini,' and 'more than 1.4 million prompts and responses across a wide range of fields, including mathematics and coding,' to explicitly improve reasoning ability.

Phi-4-reasoning-plus focuses on mathematical reasoning and is a model that applies reinforcement learning to Phi-4-reasoning using approximately 6,400 mathematical problems. In this reinforcement learning phase, a result-based reward function is used to further improve the model's ability to generate correct answers to mathematical problems. Phi-4-reasoning-plus has an output length 1.5 times longer than Phi-4-reasoning, and includes more reasoning steps to achieve highly accurate answers, but it also uses more computational resources during reasoning.

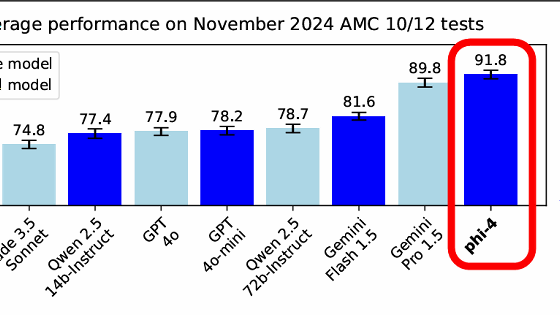

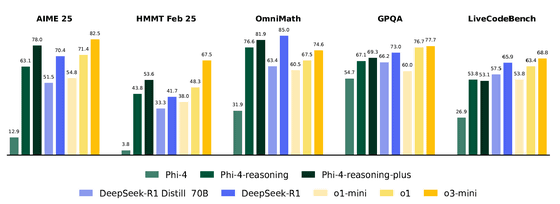

The benchmark results for Phi-4-reasoning (green) and Phi-4-reasoning-plus (dark green) are as follows, and they achieved higher scores than Phi-4. In addition, they outperformed OpenAI o1-mini in Math-500 and GPQA Diamond. Both benchmarks measure the performance of mathematics, science, and coding, and show the high inference capabilities of Phi-4-reasoning and Phi-4-reasoning-plus.

Phi-4-mini-reasoning is designed to meet the demand for more compact inference models, with a relatively lightweight model with 3.8 billion (3.8B) parameters. It shares the same architecture as Phi-4-mini and is fine-tuned with content generated by DeepSeek-R1 and other applications. Microsoft says that it is designed for applications that require advanced mathematical reasoning capabilities in memory- and computationally-constrained environments, making it suitable for educational applications, embedded apps, or edge/mobile systems.

Microsoft also touts the safety of Phi-4-reasoning and says it adheres to Microsoft's ' Principles for Responsible AI .'

Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning are available on the AI platform Hugging Face. Phi-4-reasoning and Phi-4-mini-reasoning are also available on Azure AI Foundry, and Phi-4-reasoning-plus will be introduced to Azure AI Foundry soon.

microsoft/Phi-4-reasoning · Hugging Face

https://huggingface.co/microsoft/Phi-4-reasoning

microsoft/Phi-4-reasoning-plus · Hugging Face

https://huggingface.co/microsoft/Phi-4-reasoning-plus

microsoft/Phi-4-mini-reasoning · Hugging Face

https://huggingface.co/microsoft/Phi-4-mini-reasoning

The Phi-4-reasoning and Phi-4-mini-reasoning models will be integrated into Copilot+ PC in an NPU-optimized version called Phi Silica , which will be integrated into Copilot+ PC. Phi Silica is used to locally execute the AI features available on Copilot+ PC, and the introduction of Phi-4-reasoning will enable faster response and more power-efficient token processing, Microsoft said.

Related Posts:

in Software, Posted by log1i_yk