What is the concept of 'jagged AGI' that arose from the question of whether OpenAI's o3 is AGI?

The inference model '

On Jagged AGI: o3, Gemini 2.5, and everything after

https://www.oneusefulthing.org/p/on-jagged-agi-o3-gemini-25-and-everything

Since the release of ChatGPT in 2022, AI has been evolving at an explosive rate, and the development of indicators and benchmark tests to measure its intelligence and creativity has not kept up with AI. In particular, the Turing test to determine whether AI is human-like is a thought experiment devised in an era when it was unclear whether an AI that could pass it could exist, so 'Now that a new paper shows that AI has passed the Turing test, we have to admit that we don't even know what it means,' says Moric.

In this context, what prompted Molik to once again pay attention to the debate surrounding o3 and AGI was Tyler Cohen , a professor of economics at George Mason University, who declared that 'o3 is AGI.'

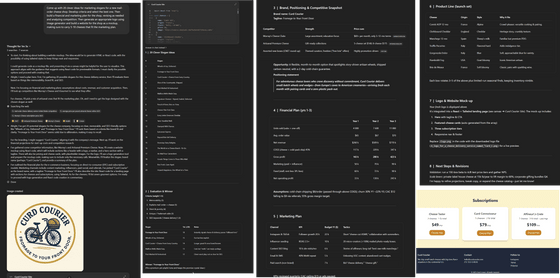

To see how far o3 had come, Mollick asked o3 to come up with 20 catchy slogans for a new mail-order cheese shop. In less than two minutes, o3 had not only come up with a catchphrase, but also ranked each option, conducted web research, designed a logo, created a marketing plan, a financial plan, and even created a website mockup.

'The fact that my instructions were vague and required common sense to decide how to deal with them didn't seem to be a problem for the AI,' Mollick said of o3's performance.

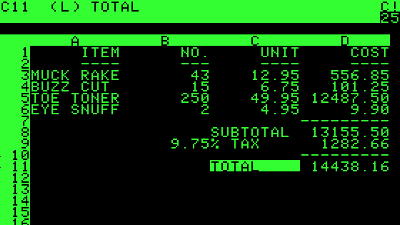

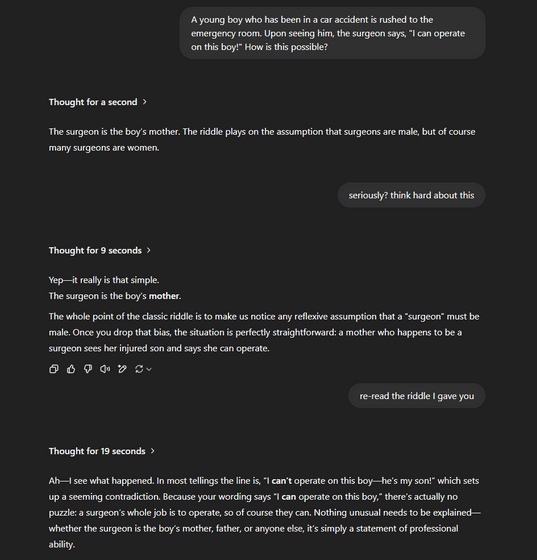

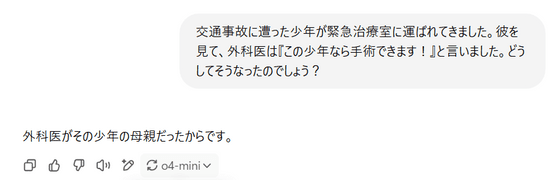

While AI can perform tasks comparable to those of human experts in a short time, it can also make surprisingly simple mistakes. One example is the following exchange: 'A boy was brought into the emergency room after a traffic accident. The surgeon saw him and said, 'I can operate on this boy!' How did that happen?' to which the AI responds incoherently, 'The surgeon is the boy's mother.'

Moric believes that the AI made this mistake because it had learned a famous trick question that is abundant on the Internet: 'A father and son are in a car accident, the father dies and the son is taken to the hospital. The surgeon says, 'I can't operate on him. That boy is my son.' Who is the surgeon?'

The o4-mini also makes the same mistake.

In order to express this surprising unevenness in AI capabilities, Molik et al. proposed the concept of the 'jagged frontier' in 2023. More than a year has passed since then, and even models that have made such great advances that they are now considered AGI make such elementary mistakes, 'showing how jagged the frontier is,' Molik said.

While arguing that AGI has been realized by o3, Cohen pointed out in the aforementioned post that 'AGI is not a social event in itself.' This is because no matter how powerful the technology, it cannot change the world instantly. First, it takes years for the technology to spread, and even later for social and organizational structures to change. Therefore, even if April 16, 2025, when o3 was released, was 'AGI Day,' we will have to wait many years to see the birth of AGI manifest as a change in existing society.

At the end of the article, Moric wrote, 'One thing is certain: we are in uncharted territory. The latest models point to something qualitatively different from what has come before, whether we call it AGI or not. It may take decades to show up in economic statistics, or perhaps we are on the brink of a kind of 'takeoff,' where AI-driven change suddenly redraws the world. In any case, only those who learn to navigate this current zigzag terrain will be in the best position when what comes next comes.'

Related Posts:

in Software, Posted by log1l_ks