'Mercury Coder' is a diffusion-type language model that can extract words from noise and generate explosive code

AI development company

Inception Labs

https://www.inceptionlabs.ai/news

We are excited to introduce Mercury, the first commercial-grade diffusion large language model (dLLM)! dLLMs push the frontier of intelligence and speed with parallel, coarse-to-fine text generation. pic.twitter.com/HfjDdoSvIC

— Inception Labs (@InceptionAILabs) February 26, 2025

New AI text diffusion models break speed barriers by pulling words from noise - Ars Technica

https://arstechnica.com/ai/2025/02/new-ai-text-diffusion-models-break-speed-barriers-by-pulling-words-from-noise/

Mercury Coder can be tried out on Hugging Face and the Inception demo site. This time, we will use the Inception demo site.

Mercury Coder

https://chat.inceptionlabs.ai/c/365afbf5-d152-44ef-a8f4-66215ace1a38

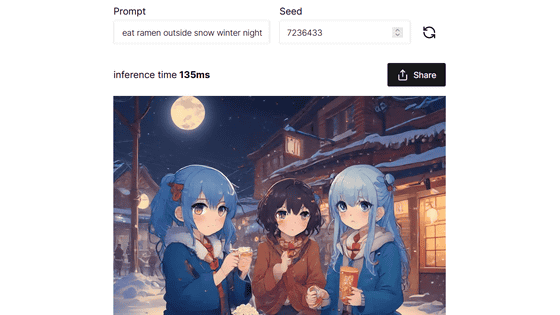

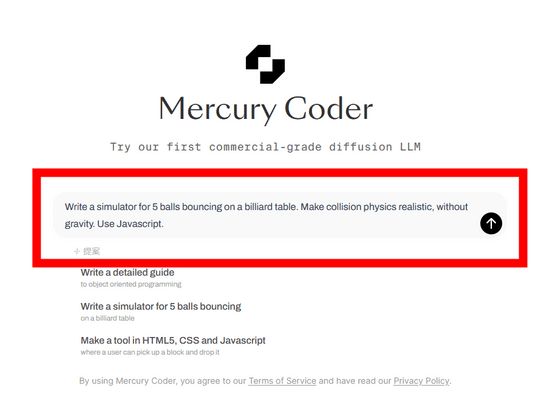

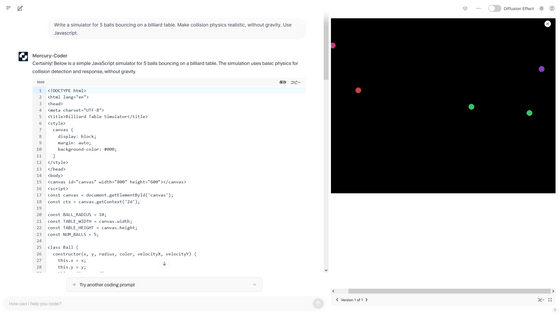

Once you have accessed the above site, enter your question in the text box. This time, I entered 'Write a simulator for 5 balls bouncing on a billiard table. Make collision physics realistic, without gravity. Use Javascript.' (Please write a code for a simulator that bounces 5 balls on a billiard table. Please express the collision physics realistically without considering the effects of gravity. Please use Javascript.) When you have finished entering the information, click the up arrow '↑'.

The code was generated in just a few seconds and the simulation was displayed on the right.

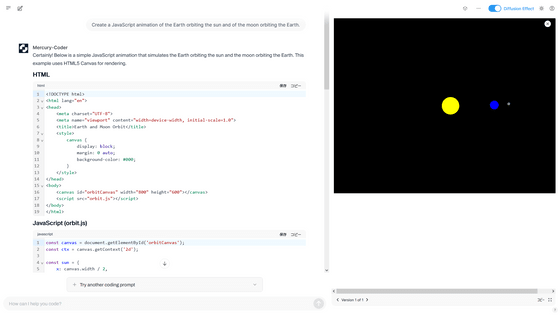

Next, he instructed me to 'Create a JavaScript animation of the Earth orbiting the sun and of the moon orbiting the Earth.'

In a flash, the code I requested was generated.

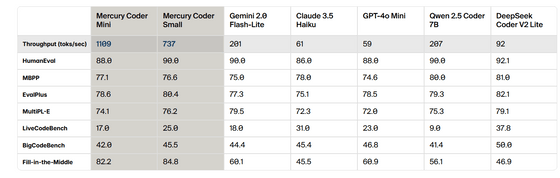

According to Inception, a typical large-scale language model generates one token at a time from left to right, so the next text cannot be generated until the previous text is generated. However, Mercury Coder works in a generation process such as 'coarse to fine', so it is possible to generate text quickly by going through a 'noise removal step' that extracts words from pure noise. Below is a graph comparing the number of output tokens per second between a typical large-scale language model and two models, Mercury Coder. Compared to Qwen2.5 Coder, Gemini 2.0, Llama 3.1, GPT-4o mini, etc., Mercury Coder's performance is very good.

Inception says, 'Mercury Coder is 5 to 10 times faster than typical large-scale language models, while providing high-quality responses at a low cost.' Below are the results of inputting the same prompt into Claude, ChatGPT, and Mercury Code and measuring the time it takes for the code to be generated. Mercury Code takes just 6 seconds to generate the code, while Claude and ChatGPT take 28 seconds and 36 seconds, respectively.

Try Mercury Coder on our playground at

https://t.co/XCeNw9BtsX pic.twitter.com/0Ewp4Z9NOD — Inception Labs (@InceptionAILabs) February 26, 2025

In addition, the benchmark results using HumanEval, MBPP, and EvalPlus, which are evaluation indices for large-scale language models, are below. While Mercury Coder has a much higher number of tokens per second than Gemini 2.0 Flash-Lite, Claude 3.5 Haiku, and GPT-4o Mini, other evaluation indices also perform comparable to other large-scale language models.

In addition, diffusion models like Mercury Coder are not limited to only considering previous outputs, so they are good at structuring inferences and responses, and can continually improve their outputs, allowing them to correct mistakes and hallucinations . To date, diffusion models have been used in AI solutions for video, image, and audio generation, such as Sora, Midjourney, and Stable Diffusion, but their application to discrete data such as text and code has not progressed.

'Mercury Code is very interesting as the first large-scale language model using a diffusion model,' said Andrei Karpathy, a former OpenAI researcher. 'This model is different from other large-scale language models and may give rise to new and unique strengths and weaknesses.'

This is interesting as a first large diffusion-based LLM.

— Andrej Karpathy (@karpathy) February 27, 2025

Most of the LLMs you've been seeing are ~clones as far as the core modeling approach goes. They're all trained 'autoregressively', ie predicting tokens from left to right. Diffusion is different - it doesn't go left to… https://t.co/I0gnRKkh9k

Regarding Mercury Coder, Inception says, 'Mercury Coder is the first in a series of upcoming diffuse large-scale language models. Mercury Coder is designed for chat applications and is in closed beta.'

Related Posts: