Meta releases information about a GPU cluster equipped with 24,576 NVIDIA H100 GPUs and used for training on games such as Llama 3

'Leading AI development means leading in investment in hardware infrastructure,' he said, revealing details of Meta's AI investments, including a datacenter-scale cluster equipped with more than 24,000 GPUs.

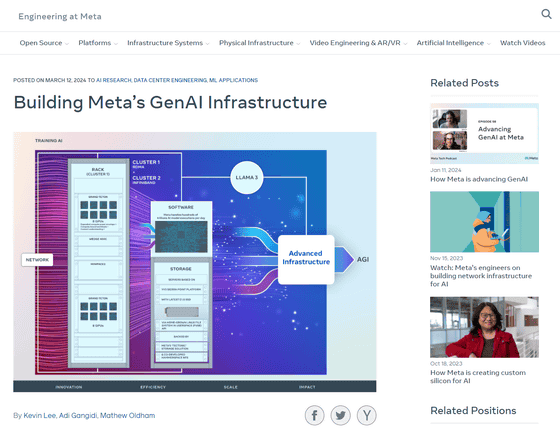

Building Meta's GenAI Infrastructure - Engineering at Meta

Meta reveals details of two new 24k GPU AI clusters - DCD

In 2022, it was reported that Meta would build the 'AI Research SuperCluster (RSC)' equipped with 16,000 GPUs.

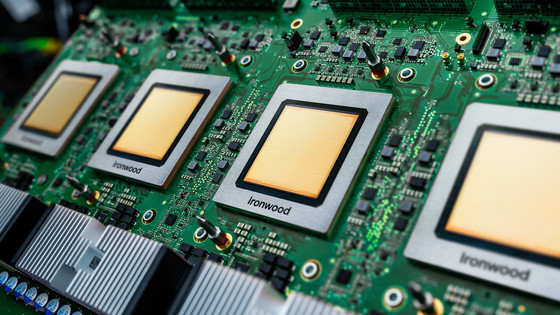

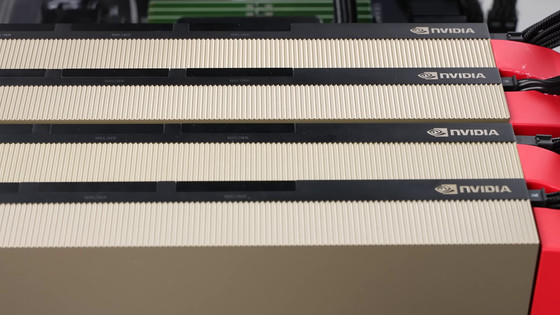

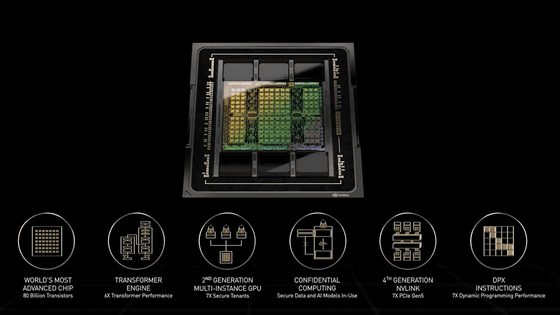

The new GPU cluster is built based on lessons learned from RSC and is equipped with 24,576 NVIDIA H100 GPUs, which are considered optimal for machine learning. Meta is believed to have purchased 150,000 NVIDIA H100 GPUs in 2023.

According to Meta, the new GPU cluster will be able to support larger and more complex models than RSC, paving the way for advances in generative AI development. The cluster will support current and next-generation AI models Meta is working on, including Llama 3, the successor to its publicly available large-scale language model, Llama 2, and will also support generative AI and other areas of AI research and development.

This GPU cluster construction is just one step in Meta's infrastructure roadmap, which aims to build an infrastructure that includes 350,000 NVIDIA H100 GPUs by the end of 2024, equivalent in computing power to 600,000 NVIDIA H100 GPUs.

Related Posts: