Microsoft spends over tens of billions of yen to build a supercomputer for AI development for OpenAI, the developer of ChatGPT

Since 2019, Microsoft has been investing heavily in OpenAI, the developer of the globally talked-about chat AI '

How Microsoft's bet on Azure unlocked an AI revolution - Source

https://news.microsoft.com/source/features/ai/how-microsofts-bet-on-azure-unlocked-an-ai-revolution/

Microsoft Built an Expensive Supercomputer to Power OpenAI's ChatGPT - Bloomberg

https://www.bloomberg.com/news/articles/2023-03-13/microsoft-built-an-expensive-supercomputer-to-power-openai-s-chatgpt

Microsoft spent hundreds of millions of dollars on a ChatGPT supercomputer - The Verge

https://www.theverge.com/2023/3/13/23637675/microsoft-chatgpt-bing-millions-dollars-supercomputer-openai

According to Bloomberg, when Microsoft invested $1 billion in OpenAI in 2019, it also agreed to build a large-scale, state-of-the-art supercomputer for OpenAI. OpenAI and other AI development companies need access to powerful computing resources to develop large-scale AI models, which require training on a large amount of data.

'One of the things we learned from the study is that the bigger the model, the more data, and the longer it takes to train, the more accurate the model becomes,' said Nidhi Chappell, product lead for Azure high-performance computing and AI at Microsoft. 'So there's been a strong push to train bigger models for longer periods of time. That means not only do you have to have the biggest infrastructure, but you also have to make sure it runs reliably for a long time.'

Microsoft worked closely with OpenAI to build a supercomputer capable of training powerful AI models and to provide computing resources through cloud services. Microsoft addressed various challenges in building the supercomputer, including designing cable trays, increasing server capacity in data centers, optimizing communication between GPU units, devising power supply layouts to prevent data center outages, and cooling the machines.

Microsoft Executive Vice President Scott Guthrie declined to say how much the company has spent on the project, but said in an interview with Bloomberg that it's 'probably more than a few hundred million dollars.'

Then in 2020, Microsoft developed a supercomputer connecting 10,000 NVIDIA A100 GPUs, NVIDIA's AI and data center GPUs, and offered it on its cloud computing service, Microsoft Azure .

Microsoft develops supercomputer with 285,000 CPU cores and 10,000 GPUs in collaboration with OpenAI - GIGAZINE

'We've built a system architecture that can operate at a very large scale and is very reliable, which ultimately made ChatGPT possible,' Chappell said. He believes that more models will be born from supercomputers built by Microsoft in the future.

Microsoft also sells its supercomputing resources to customers other than OpenAI. Guthrie told Bloomberg, 'The supercomputer started out as a custom-built thing, but we built it to be generalizable so that people who want to train large language models could use it. That's what has allowed us to become a good cloud for AI more broadly.'

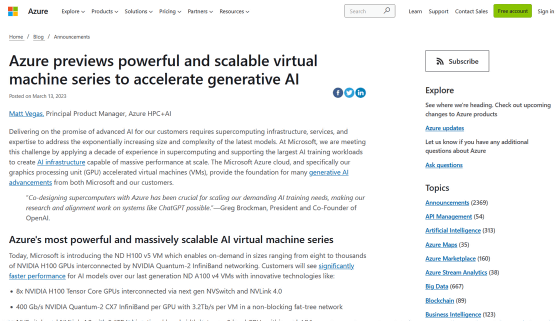

Since then, Microsoft has continued to evolve its AI infrastructure, and in a blog post on March 13, 2023, it announced the ND H100 v5 VM, an AI virtual machine that uses eight to several thousand NVIDIA H100 GPUs and Quantum-2 InfiniBand, an architecture that provides high-speed networks.

Azure previews powerful and scalable virtual machine series to accelerate generative AI | Azure Blog and Updates | Microsoft Azure

https://azure.microsoft.com/en-us/blog/azure-previews-powerful-and-scalable-virtual-machine-to-help-customers-accelerate-ai/

Microsoft continues to work on issues such as custom server and chip design, supply chain optimization, and cost reduction. 'The AI models that are currently astounding the world were developed on supercomputers that began construction two or three years ago. New models will be developed on the new supercomputers we are currently working on,' Guthrie said.

Related Posts: