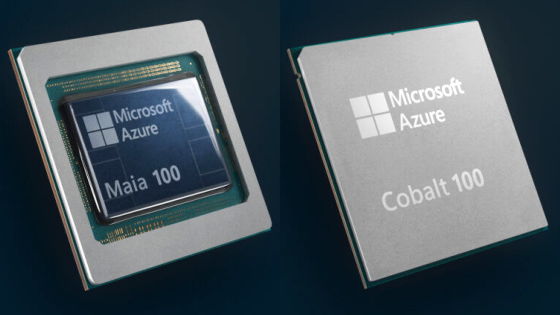

OpenAI reportedly begins using Google's TPU for inference processing of ChatGPT and other systems, marking the first time it has used an AI processing chip other than NVIDIA's

Reuters reported that OpenAI has begun using Google's proprietary machine learning processor 'TPU' through Google Cloud in an effort to reduce the cost of inference.

OpenAI turns to Google's AI chips to power its products, source says | Reuters

Google Convinces OpenAI to Use TPU Chips in Win Against Nvidia — The Information

OpenAI has begun renting Google's TPUs to power its own products, such as ChatGPT, sources familiar with the matter told Reuters.

Reuters reported, 'The deal is part of Google's move to expand external access to its TPUs, which have been limited to internal use, and marks OpenAI's first meaningful use of chips other than NVIDIA's. It also signals OpenAI's move away from reliance on Microsoft's data centers.'

Regarding OpenAI's decision to start using TPUs, foreign media outlet The Information reported that 'OpenAI said it hopes that the TPUs it rents through Google Cloud will help reduce inference costs.'

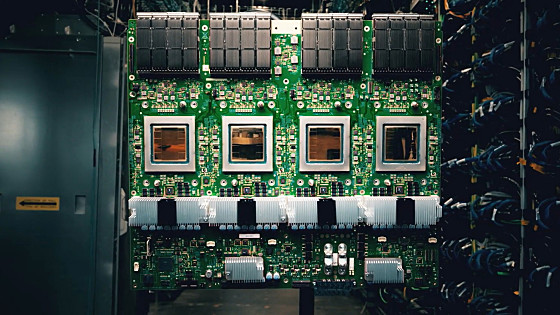

OpenAI has purchased a large number of NVIDIA GPUs and uses them for AI training and inference in data centers such as Microsoft's.

OpenAI has received significant funding from Microsoft, but there are fears it may eventually move away from Microsoft as the company aims to go public and builds its own large-scale computing infrastructure with the help of partners such as SoftBank and Oracle.

OpenAI in talks with Microsoft for future IPO - GIGAZINE

Related Posts:

in Posted by log1p_kr