Alibaba's visual language AI model 'Qwen3-VL' can identify inserted frames in a two-hour video with 99.5% accuracy.

Alibaba, the Chinese IT giant, has published a technical report on Qwen3-VL, the most powerful visual language model in its Qwen AI series. Based on various benchmarks, the report found that Qwen3-VL excels at visual mathematical tasks, and its language coverage has increased to 39 languages, nearly four times that of Qwen2.5, with OCR accuracy exceeding 70% for 32 of those languages.

[2511.21631] Qwen3-VL Technical Report

Qwen3-VL can scan two-hour videos and pinpoint nearly every detail

https://the-decoder.com/qwen3-vl-can-scan-two-hour-videos-and-pinpoint-nearly-every-detail/

Alibaba's Qwen3-VL Can Find a Single Frame in Two Hours of Video. The Catch? It Still Can't Outthink GPT-5.

Alibaba Releases Qwen3-VL Technical Report Detailing Two-Hour Video Analysis – Unite.AI

https://www.unite.ai/alibaba-releases-qwen3-vl-technical-report-detailing-two-hour-video-analysis/

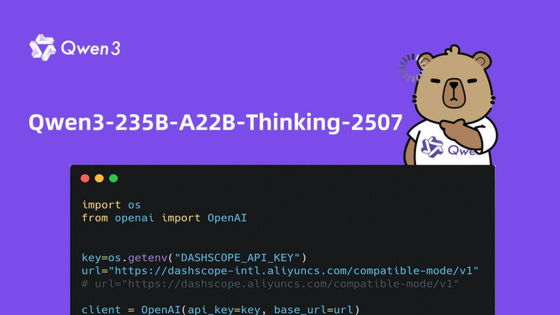

One test was a 'needle-in-a-haystack' test, which examined whether semantically significant frames could be detected by randomly inserting them into a video stream. The flagship model, the Qwen3-VL-235B-A22B, with 235 billion parameters, was able to detect the interleaved frames with 100% accuracy for a 30-minute video, equivalent to approximately 256,000 tokens. Even for a two-hour video, equivalent to approximately 100,000 tokens, the accuracy remained extremely high at 99.5%.

While other previous visual language models have struggled to provide consistent analysis over long periods of time, 'Qwen3-VL represents a significant advance in understanding long-form video,' according to Unite.AI, an AI information and news site.

Qwen3-VL achieved a score of 85.5% on the MathVista benchmark, which measures visual mathematical reasoning ability, beating GPT-5's 81.3%. It also achieved a score of 74.6% on MathVista, beating Gemini 2.5 Pro (73.3%) and GPT-5 (65.8%).

Its document processing capabilities are also high, with high scores of 96.5% in DocVQA, which measures document comprehension, and 875 in OCRBench. Its text recognition capabilities support 39 languages, about four times more than the previous generation model, Qwen2.5-VL, and it achieved an accuracy of over 70% in OCR tasks for 32 of the supported languages.

However, it does not outperform existing AI models in all capabilities. In MMMU-Pro, a test for multimodal LLM, it achieved 69.3%, far behind GPT-5's 78.4%.

It also scored lower than its rivals on a general video question-answering benchmark that tests comprehension of video content, leading Unite.AI to say that 'this suggests that it excels as an expert in visual mathematics and document analysis, rather than a general-purpose reader.'

Regarding the clear strengths and weaknesses, Implicator.AI, an AI news site, states, 'It's not a weakness, it's a design choice, directing training resources to specific functions rather than uniform performance.'

In other words, this demonstrates that the open source model Qwen3-VL can rival or even surpass proprietary models in specialized tasks such as visual mathematics.

The flagship Qwen3-VL-235B model requires approximately 471GB of storage and sufficient GPU memory, making it unsuitable for users with commercial-grade PCs. The Qwen3-VL-8B model, which can run on average users' hardware, has been more popular, with 2 million downloads.

Related Posts:

in AI, Posted by logc_nt