Finally, image generation AI 'FLUX.2' has arrived, and it can be run locally on a home PC

German AI startup Black Forest Labs released the image generation AI model family ' FLUX.2 ' on November 25, 2025. This model expands on the success of its previous model, FLUX.1, and is designed for use in practical creative workflows, not just for demos and play.

FLUX.2: Frontier Visual Intelligence | Black Forest Labs

FLUX.2 Image Generation Models Now Released | NVIDIA Blog

https://blogs.nvidia.com/blog/rtx-ai-garage-flux.2-comfyui/?linkId=100000393945960

The greatest features of FLUX.2 are its high rendering capabilities and controllability. Specifically, it is equipped with a multi-reference function that can simultaneously load up to 10 reference images and reflect their characteristics while maintaining a consistent character and style. It also supports high-resolution output of up to 4 megapixels, allowing you to accurately render complex text information contained in posters and infographics without distortion.

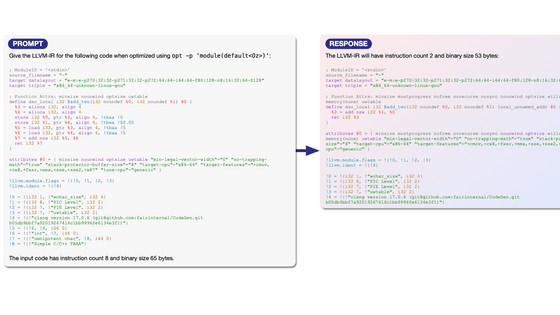

The technical mechanism is a combination of Mistral-3 , a visual language model (VLM) with 24 billion parameters, and Transformer technology, which enables the generation of images with a deeper understanding of real-world physical laws and spatial relationships, resulting in more realistic representations of lighting and materials.

At the time of writing, four models of FLUX.2 are available.

FLUX.2 [pro] is a model that offers the highest image quality comparable to top closed-source models. It has the same performance as other models in terms of prompt fidelity and visual reproduction, but is characterized by its ability to generate images faster and at a lower cost. It is designed without compromising speed and quality.

FLUX.2 [flex] is a model that allows developers to finely control parameters such as the number of generation steps and guidance scale. This allows for the user to freely adjust the balance between quality, prompt fidelity, and processing speed. It is particularly excellent at rendering text and depicting fine details. Below are images generated with the number of steps set to 6, 20, and 50, from left to right. It can be seen that the accuracy of text rendering and image details improve with each step increase.

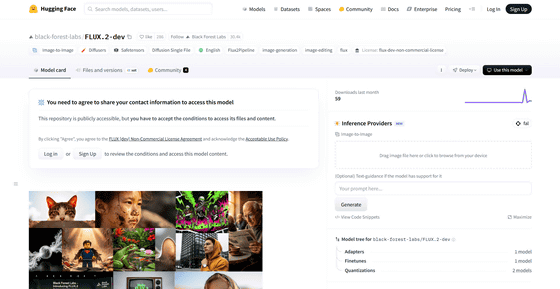

FLUX.2 [dev] is an open-weight model with 32 billion parameters derived from the FLUX.2 base model. It achieves image generation from text and editing functions using multiple input images in a single checkpoint, and boasts the most powerful performance of any currently available open-weight image generation and editing model. The model data for FLUX.2 [dev] is available at Hugging Face.

black-forest-labs/FLUX.2-dev · Hugging Face

FLUX.2 [klein] is an upcoming model that is a reduced-size, distilled version of the FLUX.2 base model. It will be open-sourced under the Apache 2.0 license and is more powerful and developer-friendly than a similarly sized, from-scratch trained model.

While FLUX.2 offers exceptional performance, it also requires significant computational power. Loading the 32 billion parameter model would normally require 90GB of VRAM. Working with NVIDIA, we optimized it using a technique called 'FP8 quantization,' which reduces memory usage by 40% while maintaining image quality, making it possible to run the model on standard consumer GPUs like GeForce RTX.

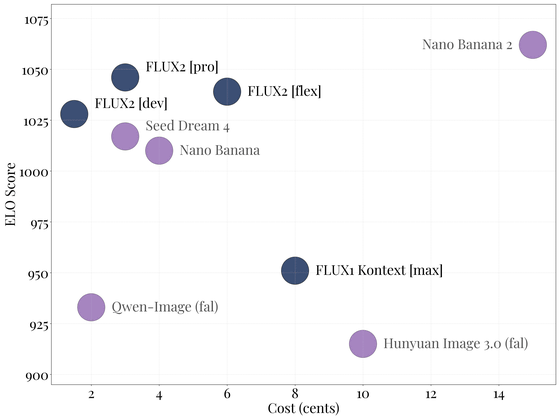

Detailed benchmark results have been released, including the balance between cost and quality and a comparison of win rates with competitors' models. Looking at the ELO score graph, which shows the relationship between quality and cost, the top-of-the-line FLUX.2 [pro] model recorded a very high score, demonstrating its ability to generate high-quality images at a lower cost than rivals such as the Nano Banana 2. The open-weight FLUX.2 [dev] model also maintains a high quality score despite its very low cost, demonstrating its excellent cost performance.

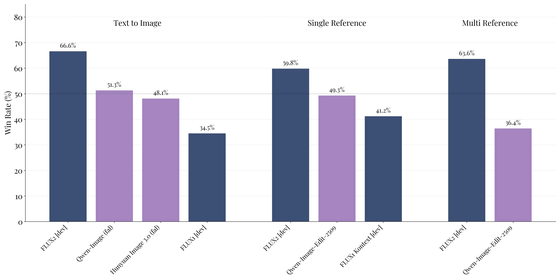

Comparing win rates with competing models, FLUX.2 [dev] demonstrates overwhelming strength. In the task of generating images from text, FLUX.2 [dev] achieved a win rate of 66.6%, significantly outperforming Qwen-Image's 51.3% and Hunyuan Image's 48.1%. In the task of editing with a single image, FLUX.2 [dev] won 50.8% and Qwen-Image's 49.3%, a close race, but a clear improvement over the previous FLUX.1 Kontext win of 41.2%. Even more noteworthy is the advanced task of referencing multiple images, where FLUX.2 [dev] achieved a high win rate of 63.6%, far surpassing Qwen-Image's 36.4%. These results demonstrate that FLUX.2 outperforms competing models in generating complex instructions and combining multiple elements.

'We are building the infrastructure that will power visual intelligence, a technology that will transform how we see and understand the world. FLUX.2 marks a step towards a multimodal model that integrates perception, generation, memory, and reasoning in an open and transparent way,' Black Forest Labs said.

Related Posts: