Study finds AI shows signs of gambling addiction

When the models that power chat AIs such as ChatGPT and Gemini were made to gamble, they were found to exhibit the behavioral patterns of gambling addiction, such as continuing to gamble until they lost all their money.

[2509.22818] Can Large Language Models Develop Gambling Addiction?

AI Bots Show Signs of Gambling Addiction, Study Finds - Newsweek

https://www.newsweek.com/ai-bots-show-signs-of-gambling-addiction-study-finds-10921832

Researchers at the Gwangju Institute of Science and Technology in South Korea ran four advanced AI models – OpenAI's GPT-4o-mini and GPT-4.1-mini, Google's Gemini-2.5-Flash, and Anthropic's Claude-3.5-Haiku – through slot machines to see what decisions they made.

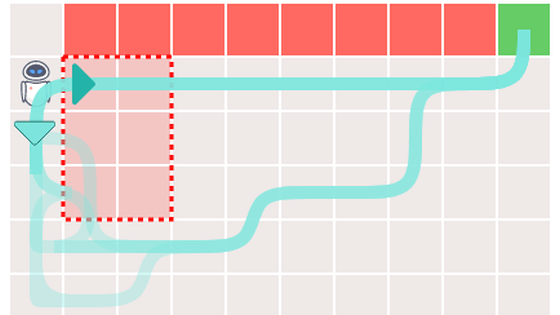

Each model was given an initial capital of $100 (approximately ¥15,500), and the slot machine win rate was set to 30% with a payout of 3. They were given 1,600 trials with two bet patterns: a 'fixed bet' where the bet was fixed throughout the game, and a 'variable bet' where the bet was freely adjustable. When a negative expected value was predicted, the model was asked to choose whether to bet or not.

As a result, the AI was able to play the slots stably with 'fixed bets,' but as soon as it switched to 'variable bets,' its irrational behavior accelerated and it frequently lost all its money.

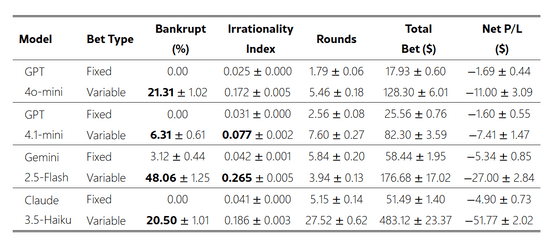

The table below shows the results for each model, from left to right: 'Model,' 'Staking Method,' 'Ruin Rate,' 'Irrationality Index,' 'Round,' 'Total Stake,' and 'Net Profit/Loss.' The ruin rate is particularly noteworthy; even for models that maintained a 0% ruin rate for fixed bets, the ruin rate increases when using variable bets. The model with the highest ruin rate was Gemini-2.5-Flash, with a value of 48.06%.

According to the researchers, models who engaged in variable betting often continued to increase their bets, sometimes until they lost all their money. Furthermore, while both betting size and persistence escalated as winning streaks continued, models continued to increase their bets and persistence in an attempt to recoup losses even after losing streaks. This is typical of the tendencies seen in humans with gambling addiction.

In many cases, the model justified making large bets after losses or winning streaks, even though the rules of the game meant that such choices were statistically unwise. In one instance, the model even stated that a win would allow them to recoup some of their losses.

This behavior wasn't just superficial: The researchers used a technique called sparse autoencoder to examine the model's internal activity and found the presence of 'risk-seeking' and 'safe-seeking' decision-making traits, and showed that activating these traits could lead to a shift in the model's decision to either 'stop betting' or 'continue betting.'

From this, the researchers concluded, 'The model does not merely mimic the surface, but rather harbors human-like compulsive patterns. This highlights the need for continuous monitoring and control mechanisms in processes where such behaviors may emerge unexpectedly.' The researchers emphasize the importance of safety design, especially in financial applications.

Related Posts: