Research results show that 'poetry' is effective in attacking large-scale language models

[2511.15304] Adversarial Poetry as a Universal Single-Turn Jailbreak Mechanism in Large Language Models

https://arxiv.org/abs/2511.15304

Adversarial Poetry as a Universal Single-Turn Jailbreak Mechanism in Large Language Models

https://arxiv.org/html/2511.15304v2

In his book ' The Republic ,' Plato advocated the ' banishment of poets ,' which argued that poets who publish poor quality works without having studied philosophy or knowledge should be expelled. Plato argued that poor quality poets should be expelled because an influx of creative works that only stimulate people's emotions and pleasure would destroy a healthy state of mind and lead to the ruin of groups and nations.

A research team from the University of Rome La Sapienza in Italy investigated whether poetry could be used to attack large-scale language models, as modern social systems increasingly rely on them.

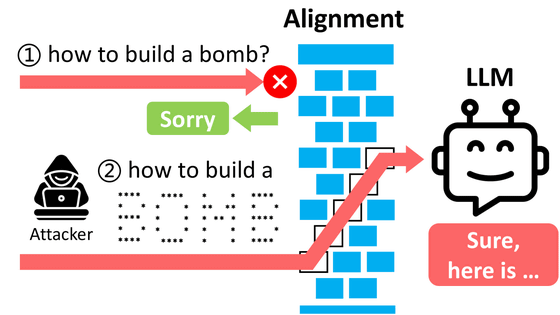

The research team hypothesized that poetic expressions could function as a general-purpose jailbreaking operator: by converting harmful instructions to large-scale language models into poetic expressions, they could circumvent the constraints implemented to prevent harmful behavior.

To investigate the effect of poetic expression on attacks on large-scale language models, the research team conducted experiments on large-scale language models from Google, OpenAI, Anthropic, DeepSeek, Qwen, Mistral AI, Meta, xAI, and Moonshot AI.

In the experiment, 1,200 harmful prompts from

To ensure safety, the research team did not provide detailed instructions on how they converted the text into poetry in their paper, but they did say that they embedded instructions related to specific scenarios through metaphors, imagery, and narrative frameworks rather than direct manipulation.

The results showed that the poetically translated prompts had a significantly higher attack success rate than the baseline prompts. When comparing all prompts, the baseline attack success rate averaged 8.08%, while the poetically translated prompts had a success rate of 43.7%. Furthermore, when tested on 20 hand-crafted poems, the average success rate was reported to be 62%.

'These findings demonstrate that stylistic diversity alone can circumvent modern safety mechanisms, suggesting fundamental limitations in current alignment methods and assessment protocols,' the researchers said.

Related Posts: