Meta launches SAM 3, an AI model that identifies and processes objects in videos

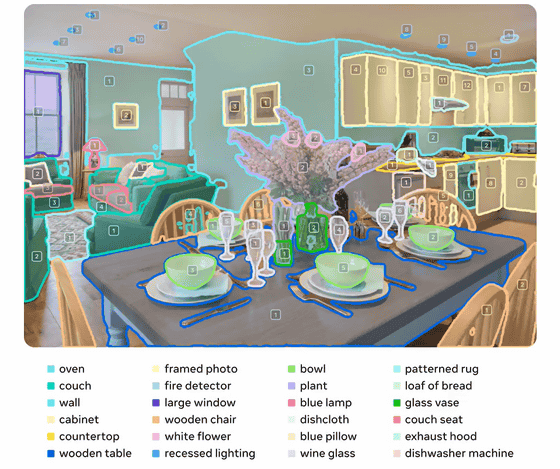

Meta has announced Meta Segment Anything Model 3 (SAM 3), an integrated model for detecting, segmenting, and tracking objects in images and videos using text and visual examples as prompts.

Introducing Meta Segment Anything Model 3 and Segment Anything Playground

Meet SAM 3, a unified model that enables detection, segmentation, and tracking of objects across images and videos. SAM 3 introduces some of our most highly requested features like text and exemplar prompts to segment all objects of a target category.

— AI at Meta (@AIatMeta) November 19, 2025

Learnings from SAM 3 will… pic.twitter.com/qg43OtDyeQ

SAM 3: Segment Anything with Concepts | Research - AI at Meta

https://ai.meta.com/research/publications/sam-3-segment-anything-with-concepts/

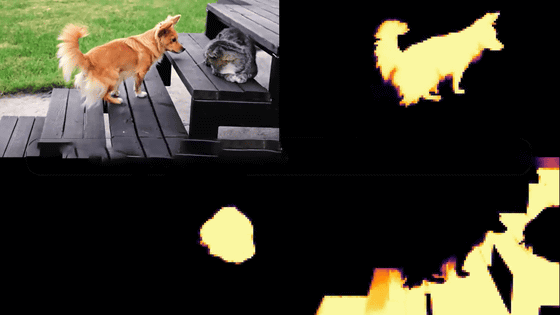

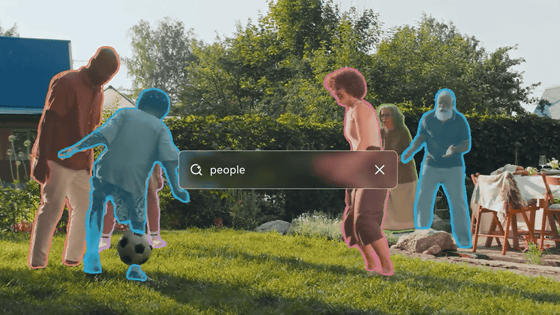

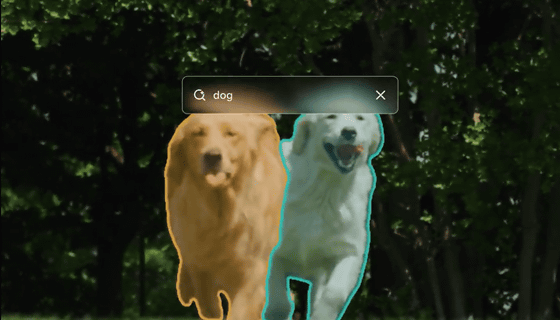

SAM 3 is an integrated model that can detect, segment, and track objects in images and videos based on prompts, such as short text inputs like 'dog' or 'yellow school bus,' or by recognizing an image of a yellow school bus, allowing the model to extract the specified object from an image or video.

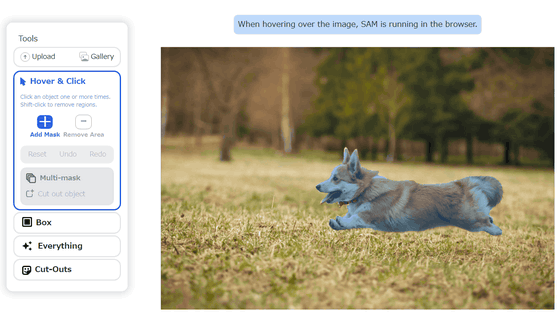

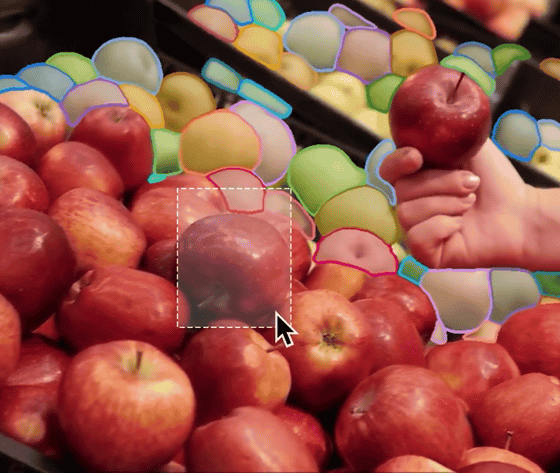

You can also detect and track objects by specifying an area or clicking on them in the video.

SAM 1 and 2 only supported segmentation based on visual prompts, where you click on an object on the screen. SAM 3 now lets you specify in detail the object you want to segment using text.

In addition, existing models have a fixed set of text labels and can segment simple concepts such as 'bus' or 'car,' but have difficulty segmenting more detailed concepts such as 'yellow school bus.' SAM3 overcomes this limitation and supports a wider range of text prompts.

In addition, a new benchmark dataset, 'Segment Anything with Concepts (SA-Co),' has also been released to evaluate SAM 3's 'ability to understand detailed text.' SA-Co enables measurement of segmentation ability based on detailed descriptions such as 'red baseball cap' and 'blue window.' SAM 3 demonstrated high zero-shot performance in this SA-Co benchmark, demonstrating that it can more accurately perform 'object extraction by fine-grained concept specification,' which was difficult with conventional models.

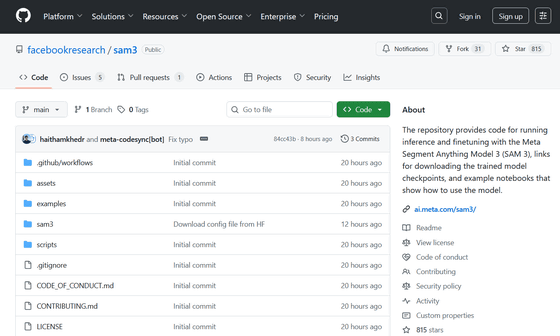

SAM 3 is expected to be used for media and creative purposes such as video editing, AR/VR content, wearable devices, research and industrial applications, as well as for use as a labeling tool for data interpretation and learning support. SAM 3 is available as open source on GitHub.

GitHub - facebookresearch/sam3: The repository provides code for running inference and finetuning with the Meta Segment Anything Model 3 (SAM 3), links for downloading the trained model checkpoints, and example notebooks that show how to use the model.

https://github.com/facebookresearch/sam3

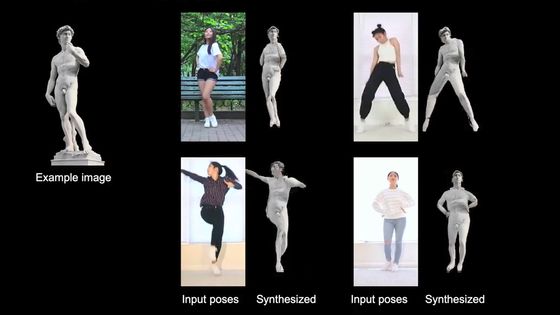

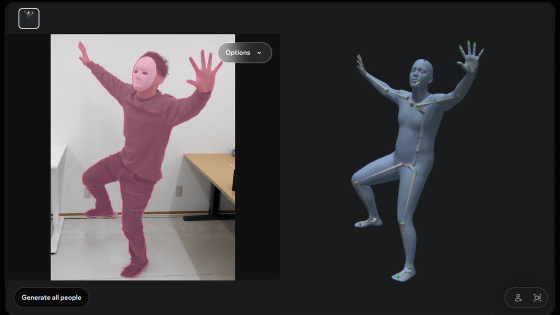

SAM 3D, a model that reconstructs objects and people in 3D from a single image, has also been released.

Meta releases AI 'SAM 3D Body' and 'SAM 3D Objects' that can cut out only the desired person or object from a single photo and create a 3D model - GIGAZINE

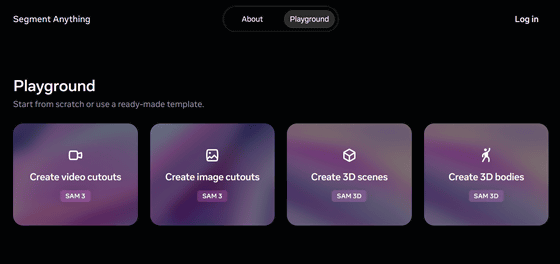

SAM 3 and SAM 3D can be explored on the Segment Anything Playground, a new platform that gives anyone, even those without technical expertise, access to cutting-edge models. The Segment Anything Playground is a research demo and is free for personal, non-commercial use only.

Meta AI Demos

https://aidemos.meta.com/segment-anything/gallery

Related Posts:

in AI, Posted by log1e_dh