Google launches Gemini 3 Pro and third-party model-driven agent-first coding tool 'Google Antigravity' as a free public preview for Windows, macOS, and Linux

Google has announced its AI agent development platform, Google Antigravity , an AI-powered integrated development environment (IDE) experience that leverages Google's leading AI models.

Google Antigravity Blog: introducing-google-antigravity

https://antigravity.google/blog/introducing-google-antigravity

Gemini 3 for developers: New reasoning, agentic capabilities

https://blog.google/technology/developers/gemini-3-developers/

Google Antigravity

https://simonwillison.net/2025/Nov/18/google-antigravity/

With the emergence of AI models like Gemini 3 , we are beginning to reach a point where the intelligence of AI agents can operate uninterrupted for extended periods of time on multiple surfaces. While we're not yet at the point where they can operate uninterrupted for days, we are approaching a world where we interact with AI agents at a higher level of abstraction than individual prompts or tool invocations. In this world, the product surfaces that enable communication between AI agents and users must look and feel different. Google's answer is Google Antigravity.

Google Antigravity is Google's first product to combine four key principles of collaborative development: trust, autonomy, feedback, and self-improvement.

◆Trust

Existing AI agent tools either show the user every action and tool call made by the AI agent, or they only show the final code changes without providing any context on how the AI agent got there or how to validate its behavior.

In either case, users will not trust the actions taken by the AI agent. Therefore, Google Antigravity is designed to help users gain trust by providing context about the AI agent's behavior at a more natural task-level abstraction and by providing necessary and sufficient artifacts and verification results. The company is focusing on thoroughly thinking about not only the AI agent's behavior itself, but also the verification of that behavior.

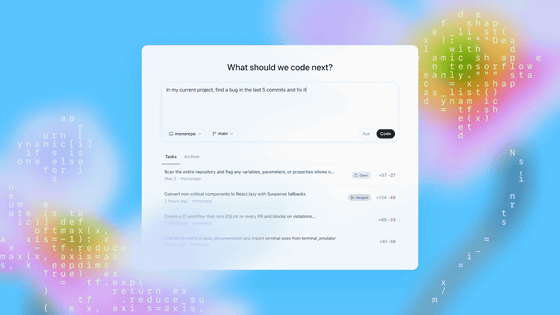

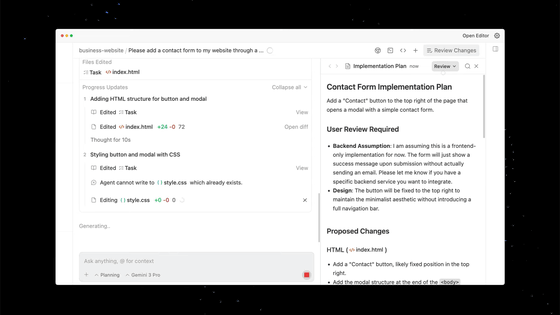

During a conversation with a Google Antigravity AI agent, users can see tool calls grouped within tasks and monitor task overviews and progress. As the AI agent works, it produces tangible deliverables—artifacts—such as task lists, implementation plans, walkthroughs, screenshots, and browser recordings—formats that are easier for users to verify than raw tool calls. Google Antigravity's AI agent uses artifacts to communicate to users that it understands what it is doing and has thoroughly verified its work.

Google Antigravity displays the task list for the AI agent, allows you to review the implementation plan after investigation and before implementation, and scans the walkthrough upon completion.

Task lists, Artifacts, and Verification - YouTube

◆Autonomy

The most intuitive product form factors are those that work in sync with an AI agent embedded in a surface (editor, browser, terminal, etc.), so Google Antigravity's main 'editor view' is a cutting-edge AI-powered IDE experience, with tab completion, inline commands, and a full-featured AI agent in the side panel.

Google Antigravity's AI agent autonomously writes the code for a new front-end feature, then uses Terminal to launch the local host and run a browser to test that the new feature works.

Agent Across Editor, Terminal, and Browser - YouTube

In addition to the IDE-like editor surface, Google also includes an agent-first manager surface, which shifts the paradigm from agents being embedded within surfaces to surfaces being embedded within agents, allowing multiple agents across multiple workspaces to run in parallel, Google explained.

Spawn AI agents to conduct background checks in a separate workspace, while you stay notified of progress using the Agent Manager inbox and side panel, allowing you to focus on more complex tasks in the foreground.

Agent Manager - YouTube

By optimizing for instant handoff between manager and editor, rather than cramming both an asynchronous manager experience and a synchronous editor experience into a single window, Google Antigravity intuitively moves development into the asynchronous era as AI models like Gemini get rapidly smarter.

Feedback

A fundamental drawback of the remote-only form factor is that AI agents cannot be easily iterated upon. While AI agents have certainly become much more intelligent, they are still not perfect. It's useful if an AI agent can complete 80% of the work, but if it can't easily provide feedback, the burden of solving the remaining 20% becomes too great.

User feedback eliminates the need to treat AI agents as black-and-white systems: perfect or useless. Google Antigravity starts with local interaction and enables intuitive asynchronous user feedback on any surface and artifact, whether that's Google Docs-style comments on a text artifact or feedback through selecting and commenting on a screenshot. This feedback is automatically incorporated into the agent's execution without stopping the agent process.

The videos below are examples of feedback on textual artifacts, such as implementation plans, and feedback on visual artifacts, such as screenshots taken by agents.

Artifact-level feedback - YouTube

◆Self-improvement

Google Antigravity treats learning as a core primitive, and the actions of an AI agent both retrieve from and contribute to a knowledge base. This knowledge management allows the AI agent to learn from its past work.

AI agents learn from their work and feedback, generating and leveraging knowledge articles that can be viewed from the Agent Manager.

Global Knowledge Base - YouTube

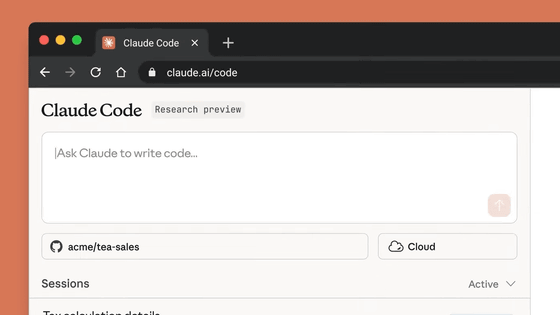

Google believes that Google Antigravity is the next fundamental step in the development of AI agent assistance, and to that end, we aim to deliver the best possible product for end users. To that end, Google Antigravity is available in public preview for free and is compatible with macOS, Linux, and Windows.

Google Antigravity also has access to Google's Gemini 3, as well as Anthropic's Claude Sonnet 4.5 and OpenAI's GPT-OSS, giving developers more options for AI models.

Simon Willison , a developer who actually used Google Antigravity, reported that 'it worked fine at first, but then I got an error message saying 'Agent execution terminated due to model provider overload. Please try again later.''

Willison pointed out that the following video posted by Google on YouTube is 'the best introduction to Google Antigravity to date.' In this video, Kevin Hou, who previously worked at the AI coding tool Windsurf and is currently a product engineer at Google Antigravity, explains the process of building an app using Google Antigravity.

Learn the basics of Google Antigravity - YouTube

Related Posts: