Research shows that people who use AI tend to overestimate their own performance, and those with higher AI literacy tend to be more overconfident

Nowadays, anyone can easily use chat AI and search engine AI tools to get answers to a variety of questions, but it's important to be aware that AI answers are often incorrect. However, some people blindly accept AI answers and end up saying nonsensical things to experts on social media. New research has shown that people who use AI tend to overestimate their own performance, and this tendency is particularly pronounced in people with high AI literacy.

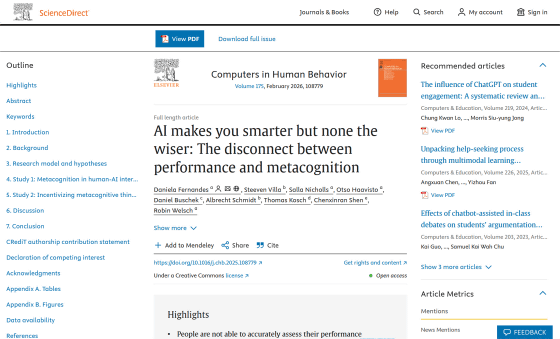

AI makes you smarter but none the wiser: The disconnect between performance and metacognition - ScienceDirect

AI use makes us overestimate our cognitive performance | EurekAlert!

https://www.eurekalert.org/news-releases/1103616

The more that people use AI, the more likely they are to overestimate their own abilities | Live Science

https://www.livescience.com/technology/artificial-intelligence/the-more-that-people-use-ai-the-more-likely-they-are-to-overestimate-their-own-abilities

While it is important to accurately assess one's own abilities, humans generally tend to assess themselves as 'above average.' The Dunning-Kruger effect is also well known, in which people with higher abilities in a particular field tend to underestimate themselves, while people with lower abilities tend to overestimate themselves.

As the use of AI tools becomes more commonplace, properly evaluating AI performance is crucial for optimizing human-AI interactions. To address this issue, a research team at Aalto University in Finland conducted an experiment in which approximately 500 subjects completed logical reasoning tasks from the US Law School Admission Test (LSAT).

Half of the participants used OpenAI's chat AI, ChatGPT, while the other half solved the problems without AI. Afterward, both groups were asked questions about their AI literacy and their own performance. Participants were incentivized to accurately evaluate their own performance, with the promise of additional rewards if they accurately evaluated their own performance.

'These tasks require a lot of cognitive effort,' said Robin Welsh, assistant professor at Aalto University and co-author of the paper. 'As people use AI on a daily basis, it's common to ask AI to solve these kinds of problems because they're so difficult.'

Analysis of the experimental data revealed that AI users generally rarely asked more than one question about a single issue and were satisfied with a single question or instruction. In many cases, AI users simply copied and pasted the question into ChatGPT's input field and accepted the answer without further scrutiny or questioning.

'It turns out that people simply trusted the AI to solve their problems,' Welsh said. 'Typically, it took just one interaction to get a result, which meant users blindly trusted the system. This phenomenon is called '

The results also revealed that ChatGPT users were significantly less able to accurately assess their own performance and tended to overestimate their abilities. Notably, this tendency to overestimate their abilities was more pronounced among users with high AI literacy.

'We found that when it comes to AI, the Dunning-Kruger effect disappears. In fact, surprisingly, higher AI literacy was associated with greater overestimation. We would expect that people with higher AI literacy would not only be better at interacting with AI systems, but also better at judging their own performance in those systems, but this was not the case,' Welsh said.

The increased cognitive offloading of tasks to AI impairs critical thinking , which involves examining results and answers from multiple angles. As a result, workers' ability to properly evaluate their own performance decreases, reliable information becomes harder to obtain, and workers' skills risk eroding.

'Current AI tools are insufficient. They don't foster metacognition (awareness of one's own thought processes) and they don't allow people to learn from their mistakes,' said Daniela Fernandez, a graduate student at Aalto University and lead author of the paper. 'We need to build platforms that encourage this process of self-reflection.'

Related Posts:

in Free Member, AI, Science, Posted by log1h_ik