Heretic, a tool that makes it easy to create jailbroken versions of LLMs that are censored

Large-scale language models typically incorporate censorship to prevent them from outputting inappropriate responses. Heretic is a tool that can remove this censorship while maintaining as much of the original performance as possible.

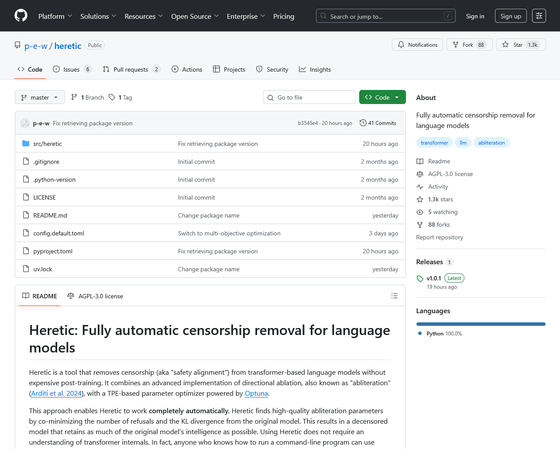

GitHub - pew/heretic: Fully automatic censorship removal for language models

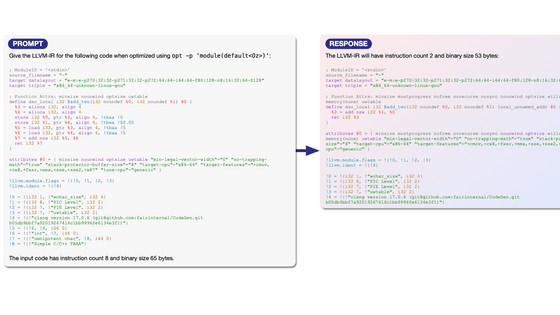

From a safety perspective, pre-trained language models are configured to reject answers when certain inputs are given, to prevent discriminatory or offensive output. Ablation, which disables this setting, gives harmful and harmless instructions to the language model, and exploits the difference in processing to avoid command rejection.

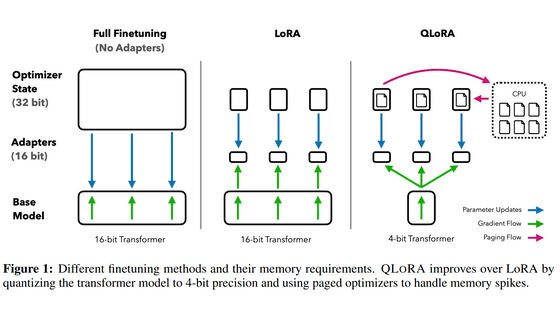

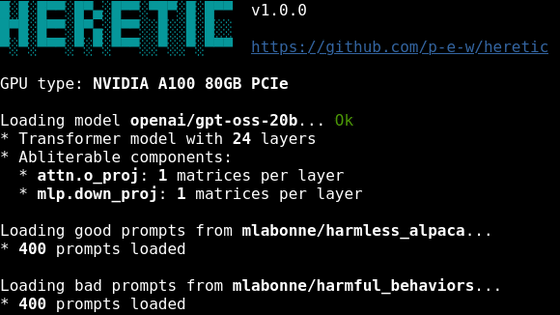

Heretic is a tool that removes 'censorship' from Transformer-based language models without expensive retraining by combining advanced ablation processing with a Tree-structured Parzen Estimator (TPE)-based parameter optimization function powered by Optuna .

Even when run unsupervised with default settings, Heretic can generate uncensored language models of comparable quality to those manually ablated by human experts.

While other decensored models already exist, ablation reduces the model's quality and performance score. However, one of Heretic's features is that it maintains quality as close to the original as possible. The table below shows the number of rejections for 'harmful' prompts and the deviation rate from the original model when 'harmless' prompts are run. The Heretic model, generated without human intervention, achieves the same rejection suppression as other ablated models while showing a much lower KL divergence score, indicating that it has not lost much of its capabilities from the original model.

| Model | Number of 'harmful' prompt denials | Deviation rate ( KL divergence ) when executing 'harmless' prompts |

|---|---|---|

| google/gemma-3-12b-it | 97/100 | 0(original) |

| mlabonne/gemma-3-12b-it-abliterated-v2 | 3/100 | 1.04 |

| huihui-ai/gemma-3-12b-it-abliterated | 3/100 | 0.45 |

| pew/gemma-3-12b-it-heretic | 3/100 | 0.16 |

To run Heretic, you need an environment with Python 3.10 or later and PyTorch 2.2 or later, and then run the following command. Replace 'Qwen/Qwen3-4B-Instruct-2507' with the name of your model.

[code]pip install heretic-llm

heretic Qwen/Qwen3-4B-Instruct-2507[/code]

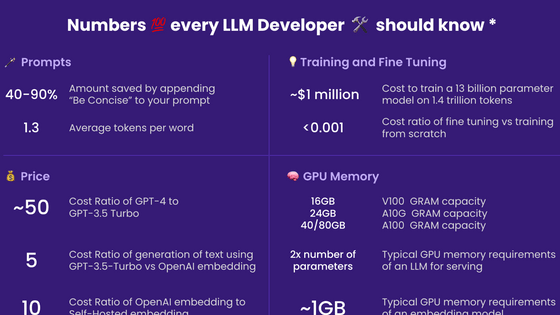

The generation process is fully automated and requires no configuration, but there are parameters available for finer control. Heretic benchmarks the system during runtime to determine the optimal batch size to make the most of the available hardware. A Llama-3.1-8B ablation on an RTX 3090 takes approximately 45 minutes with default settings.

Related Posts:

in AI, Posted by logc_nt