Qualcomm launches AI accelerators 'AI200/AI250,' entering the data center AI chip market

Qualcomm has announced the Qualcomm AI200 and Qualcomm AI250 rack solutions, including AI accelerator cards, as its next-generation AI inference optimized solutions for data centers. These solutions deliver rack-scale performance and superior memory capacity, achieving industry-leading low total cost of ownership for AI inference.

Qualcomm Unveils AI200 and AI250—Redefining Rack-Scale Data Center Inference Performance for the AI Era | Qualcomm

Qualcomm Launches AI250 & AI200 With Huge Memory Footprint For AI Data Center Workloads

https://hothardware.com/news/qualcomm-ai250-ai200-huge-memory-footprint-ai-data-center

Qualcomm is turning parts from cellphone chips into AI chips to rival Nvidia | The Verge

https://www.theverge.com/news/807078/qualcomm-ai-chips-launch-hexagon-npus

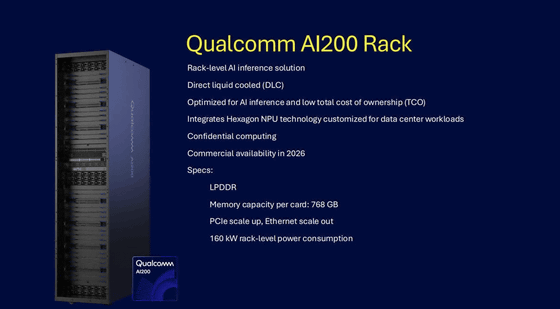

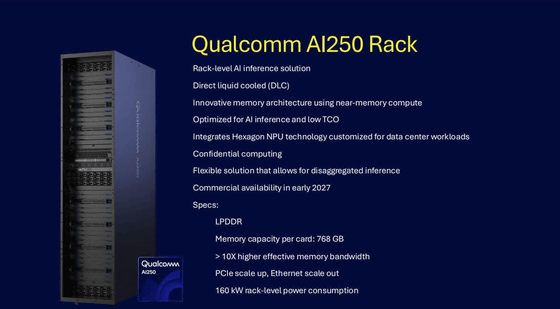

Both the AI200 and AI250 are not standalone chips, but rather rack solutions that include cooling and power supplies in a single rack. They feature direct liquid cooling for thermal efficiency, PCIe for scale-up, Ethernet for scale-out, and confidential computing for secure AI workloads, consuming 160kW of power per rack.

The AI200 is a purpose-built, rack-level AI inference solution designed to deliver optimized performance for AI workloads, such as large-scale language models and multi-modal models, while achieving a low total cost of ownership. Supporting 768GB of LPDDR memory per card, the AI200 offers high memory capacity and low cost, providing superior scalability and flexibility for AI inference. The AI200 is expected to be commercially available in 2026.

The AI250 (PDF file) employs an innovative memory architecture based on

Qualcomm's AI processors, like NVIDIA and AMD's GPUs, can operate in racks of up to 72 chips acting as a single computer. These AI processors are based on Qualcomm's Hexagon NPU , which is found in chips for mobile devices and laptops.

The AI200 and AI250 represent a new venture for Qualcomm, which has primarily made processors for mobile phones, laptops, tablets and communications devices, and a new foray into the crowded data center AI chip market, where competitors like Nvidia and AMD are already vying for power.

Qualcomm has already revealed that Humain, an AI company backed by the Saudi Arabian government, has already purchased 200 megawatts of its AI200 and AI250 rack solutions.

@Qualcomm and @HUMAINAI are deploying the world's first fully optimized edge-to-cloud AI infrastructure in Saudi Arabia, including 200MW of AI200 and AI250 rack solutions—accelerating scalable, high-performance inferencing for enterprises and government. https://t.co/GW3DSXGgQc pic.twitter.com/QQ5vlhIH0B

— Cristiano R. Amon (@cristianoamon) October 27, 2025

'With the AI200 and AI250, Qualcomm is redefining what's possible with rack-scale AI inferencing,' said Durga Maladi, senior vice president and general manager of Technology Planning, Edge Solutions and Data Center at Qualcomm Technologies. 'Our innovative new AI infrastructure solutions enable the deployment of generative AI at an unprecedented total cost of ownership, while maintaining the flexibility and security that modern data centers demand.'

'Qualcomm's rich software stack and open ecosystem support make it easier than ever for developers and enterprises to integrate, manage, and extend already-trained AI models on an optimized AI inference solution,' said Malady. 'With seamless compatibility with major AI frameworks and one-click model deployment, the Qualcomm AI200 and AI250 are designed for frictionless adoption and rapid innovation.'

Related Posts: