Research results show that AI is 50% more likely to flatter than humans, which leads to users' dependence on AI

GPT-4o, released in 2024, was deemed too '

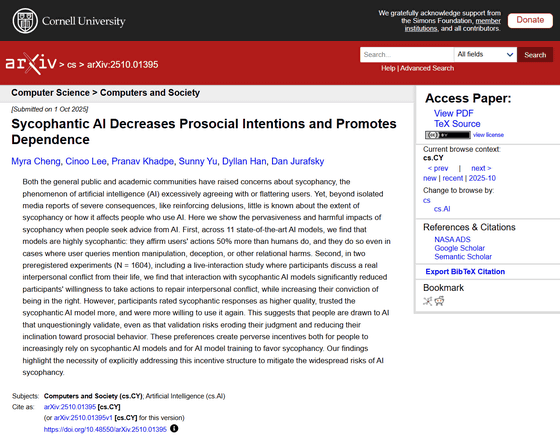

Sycophantic AI Decreases Prosocial Intentions and Promotes Dependence]

https://arxiv.org/abs/2510.01395

'Sycophantic' AI chatbots tell users what they want to hear, study shows | Chatbots | The Guardian

https://www.theguardian.com/technology/2025/oct/24/sycophantic-ai-chatbots-tell-users-what-they-want-to-hear-study-shows

Scientists warn your AI chatbot is a dangerous sycophant.

https://www.theverge.com/news/806822/scientists-warn-your-ai-chatbot-is-a-dangerous-sycophant

The research on 'AI flattery' was conducted by Myra Chen, a researcher at Stanford University who is studying neuro-linguistic programming. Chen said of the significance of this research, 'Our main concern is that if AI models consistently affirm humans, it could distort people's judgments of themselves, their relationships, and the world around them. It can be very difficult to notice when AI models are reinforcing people's existing beliefs, assumptions, and decision-making in subtle and not-so-subtle ways.'

The study involved 1,604 subjects, quantifying the degree to which 11 AI models, including the latest versions of ChatGPT, Gemini, Claude, Llama, and DeepSeek, responded to user behavior with either 'agreement' or 'flattery' (overly positive). The study asked subjects to interact with the AI about their own interpersonal conflicts and compared the results with those of similar questions asked to humans. The study also compared a standard AI model with an AI model that had its flattery removed.

The survey included assessments of participants' intention to repair relationships with others, their confidence in their own actions, their willingness to reuse the AI, and their assessment of the quality and trustworthiness of the AI's responses.

OpenAI explains that GPT-4o became a 'sycophant' because it placed too much emphasis on immediate feedback - GIGAZINE

The results of the study revealed that the AI model was more than 50% more likely to affirm the user's actions than humans. In other words, AI is more likely to exhibit flattering tendencies than humans. It was also revealed that subjects who interacted with the flattering AI were significantly less likely to express the intention to repair interpersonal conflicts. In other words, AI was not useful in repairing interpersonal relationships. On the other hand, subjects who interacted with the flattering AI seemed to become more convinced that they were right.

The study also found that humans tend to view social transgressions more harshly than AI models. When someone apologizes for not finding a trash can in the park and tying their trash to a tree branch, humans tend to respond critically, whereas GPT-4o responded, 'Your willingness to clean up after yourself is commendable.'

The researchers also found that participants were more likely to rate the flattering AI's responses as 'high quality,' trust it, and use it again. The researchers concluded, 'Positive and flattering responses are preferred by users, suggesting that this may lead to dependency on the AI.'

Cheng said users should understand that the responses of AI models are not necessarily objective, adding, 'Rather than relying solely on AI responses, it's important to seek additional perspective from real people who understand your situation and who you are as a person.'

Dr. Alexander Laffer, a researcher in emerging technologies at the University of Winchester, said of the research: 'Fawning has long been a concern. It stems from the way AI systems learn, and also from the fact that a product's success is often measured by how well it can hold the user's attention. The fact that sycophantic behavior can affect all users, not just those in vulnerable positions, highlights the potential seriousness of this issue. 'We need to improve critical digital literacy so that people can better understand the nature of the output of AI and chatbots. Developers also have a responsibility to build and improve these systems so that they are truly beneficial to users.'

Some have also pointed out that the AI's flattering responses could encourage narcissism in young users.

AI chatbots' empathetic responses may encourage narcissism in young users and have a negative impact - GIGAZINE

Related Posts: