It turns out that the cause of the large-scale AWS outage was a design flaw hidden in the 'DNS management system'

by

On October 20, 2025 (Japan time), a large-scale service outage occurred in the Amazon Web Services North Virginia (us-east-1) region, forcing web services and applications around the world to temporarily halt. Amazon's subsequent investigation revealed that the outage was caused by a design flaw in the automated DNS management system of DynamoDB, an AWS database service.

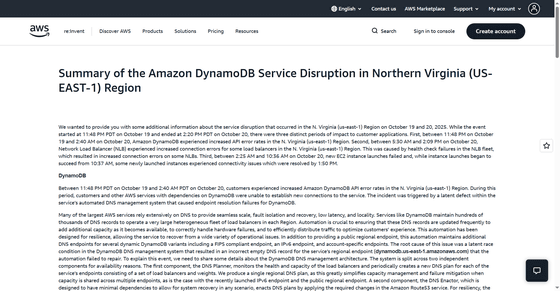

Summary of the Amazon DynamoDB Service Disruption in Northern Virginia (US-EAST-1) Region

https://aws.amazon.com/jp/message/101925/

According to AWS, the outage, which occurred between 3:48 PM on October 20, 2025 (Japan time) and 6:20 AM on October 21, progressed in three stages, first affecting DynamoDB, then EC2, and finally Network Load Balancer (NLB). The first stage occurred between 3:48 PM and 5:40 PM on October 20, and caused a sudden increase in DynamoDB API error rates. The root cause was a design bug in DynamoDB's DNS management system.

Large-scale services like AWS use DNS to distribute traffic across a huge number of servers. This DNS is frequently updated by an automatic update system called 'DNS Enactor' to handle server additions and failures. However, the timing of these DNS Enactor processes overlapped.

According to AWS, while one DNS Enactor was taking an unusually long time to apply the old DNS records, another DNS Enactor applied the latest DNS records and initiated a subsequent cleanup process. Shortly after, the delayed DNS Enactor overwrote the old DNS records, bypassing the checks. Almost immediately, the cleanup process deleted the old DNS records. As a result, all IP addresses were removed from the main DNS records in DynamoDB, making automatic recovery impossible.

Amazon's technical team identified the issue at 4:38 PM, repaired the DNS at 5:25 PM, completed global table synchronization at 5:32 PM, and restored connectivity to all customers by 5:40 PM.

The second phase involved the virtual server service Amazon EC2, and lasted from 3:48 PM on October 20th to 5:50 AM on the following day, October 21st. During this time, attempts to launch new EC2 instances failed with errors such as 'RequestLimitExceeded' and 'InsufficientCapacity,' while existing instances continued functioning normally.

The cause of the problem was a DynamoDB failure in the first phase, which caused the Droplet Workflow Manager (DWFM), which monitors the physical servers, to fail to monitor their status. Even after recovery, the system was overloaded with the intensive re-establishment of leases (monitoring systems). AWS selectively restarted DWFM at 12:14 AM on October 21 to restore normal operations, and by 1:28 AM, leases had been re-established between DWFM and all physical servers.

However, after that, the 'Network Manager' system, which distributes network settings to EC2 instances, was unable to keep up with the accumulated update processing, causing delays. As a result, EC2 instances continued to be unable to connect to the network even after starting up. The network delay was resolved at 2:36 on the 21st, and the EC2 instances finally returned to full normal at 5:50 on the 21st.

The third stage occurred in the Network Load Balancer (NLB), which checks whether the underlying EC2 instances are normal, and connection errors occurred between 9:30 PM on October 20th and 6:09 AM on October 21st. This occurred because the NLB health check was performed even though the network status of the new EC2 instances had not yet been updated, resulting in healthy nodes being mistakenly isolated and frequent DNS failovers. Engineers addressed this by suspending automatic failovers at 1:36 AM on the 21st, and reverted the settings at 6:09 AM on the 21st after the EC2 instances recovered.

AWS apologized for the impact this outage has had on our customers. 'We have a strong track record of operating our services with high levels of availability and understand how important our services are to our customers, their applications, their end users, and their businesses. We understand that this event had a significant impact on many of our customers. We are doing everything we can to learn from this event and use it to further improve availability,' the company said in a statement.

As a next step, AWS stated that it will suspend the DynamoDB DNS auto-update system that caused the issue worldwide and will not resume operations until the bug is fixed and protective measures are added. AWS also stated that it will implement measures such as adding a mechanism to limit the number of servers that NLB will disconnect when a health check fails, strengthening DWFM recovery tests for EC2, and improving the mechanism to limit processing during high loads.

Related Posts:

in Security, Posted by log1i_yk